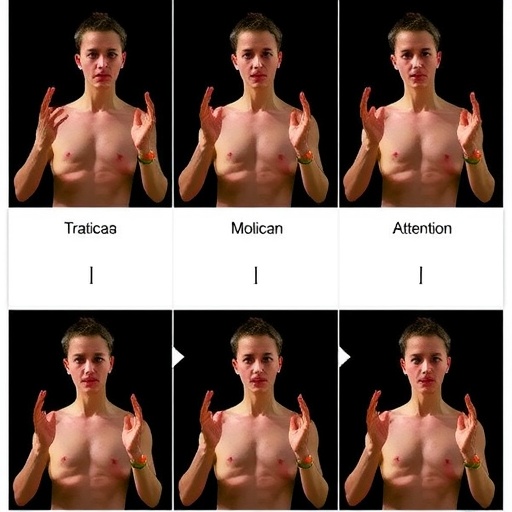

Gesture recognition, a crucial component in enhancing human-computer interaction, is witnessing significant advancements through innovative techniques in artificial intelligence and computer vision. A recent study by Q. Lu proposes a novel gesture recognition method that merges multimodal inter-frame motion analysis with shared attention weights, improving the accuracy and adaptability of gesture recognition systems. This development aims to facilitate more intuitive communication between machines and users, particularly in complex environments.

Lu’s research addresses the limitations of traditional gesture recognition methods, which primarily rely on single data sources, such as visual input from cameras. Such reliance can hinder performance under varying conditions like poor lighting or obstructions. By integrating multiple modalities, Lu’s approach broadens the scope of information analyzed—incorporating motion tracking and even auditory cues—to provide richer contextual understanding for interpreting gestures.

One key aspect of Lu’s framework is the emphasis on inter-frame motion analysis. Traditional systems often depend on analyzing static frames, which may not effectively capture dynamic gestures that evolve over time. Lu’s technique continuously tracks motion across frames, enhancing recognition accuracy by capturing the nuances in quick succession gestures or those with slight variations, thus adding sophistication to the recognition process.

The introduction of shared attention weights further refines this recognition system, allowing it to focus on the most relevant aspects of multimodal input during gesture analysis. By dynamically adjusting these weights based on contextual factors, the system can distinguish between similar gestures more effectively. This adaptability is vital for creating robust gesture recognition applications in fields such as virtual and augmented reality, as well as assistive technologies.

Beyond improved accuracy, Lu’s gesture recognition framework offers deeper insights into user intent, making systems more proactive and responsive. In environments like smart homes and autonomous vehicles, enhanced gesture recognition can lead to a seamless integration of user commands, ultimately making technology more accessible and user-friendly for everyday tasks.

The implications of this research extend notably into accessibility. By refining gesture recognition capabilities, Lu’s method can empower assistive technologies for individuals with disabilities, enabling users with limited mobility to interact effectively with their devices. This advancement fosters independence and enhances the quality of life for many. Recognizing subtle or unconventional gestures opens new avenues for inclusivity in technology use.

In an era where remote communication is increasingly prevalent, gesture recognition technology plays a pivotal role in enhancing virtual interactions. Lu’s innovative approach could significantly improve clarity and engagement in digital communications, allowing users to convey emotions and reactions more naturally, thereby reducing misunderstandings often associated with online interactions.

As the field of artificial intelligence continues to progress, Lu’s research contributes to a growing body of work aimed at refining human-computer interaction. Future advancements may build upon these principles, leading to even more personalized and intelligent responsive systems. The trajectory suggests a future enriched by gesture recognition that is emotionally aware and contextually responsive, providing a more engaging user experience.

In summary, Q. Lu’s method of gesture recognition, which integrates multimodal inter-frame motion and shared attention weights, marks a significant advancement in the realm of human-computer interaction. The improvements in accuracy and responsiveness have far-reaching implications across various sectors, including market technologies, accessibility solutions, and immersive environments. As technology continues to intertwine with daily life, the relevance and necessity of intuitive gesture recognition will only amplify.

The potential for commercial application in diverse fields—from gaming to robotics—positions gesture recognition as a key area for innovation. Companies investing in these technologies are likely to gain a competitive edge by developing products that seamlessly incorporate gesture control into user experiences. As research evolves, the future promises exciting developments, paving the way for increasingly sophisticated interactions between humans and machines.

Subject of Research: Gesture recognition methods

Article Title: Gesture recognition method integrating multimodal inter-frame motion and shared attention weights.

Article References:

Lu, Q. Gesture recognition method integrating multimodal inter-frame motion and shared attention weights.

Discov Artif Intell 5, 405 (2025). https://doi.org/10.1007/s44163-025-00653-7

Image Credits: AI Generated

DOI: https://doi.org/10.1007/s44163-025-00653-7

Keywords: Gesture recognition, multimodal analysis, artificial intelligence, user interaction, assistive technology, motion tracking, shared attention weights.

Tags: adaptive gesture recognition systems, artificial intelligence in gesture recognition, attention-based gesture recognition, auditory cues in gesture recognition, context-aware gesture interpretation, enhancing gesture recognition accuracy, gesture recognition techniques, human-computer interaction advancements, inter-frame motion analysis, multimodal data integration, multimodal interaction in AI, Q. Lu gesture recognition study.

See also ChatPlayground AI Launches Unlimited Plan for $79, Compares 25+ Leading AI Models

ChatPlayground AI Launches Unlimited Plan for $79, Compares 25+ Leading AI Models Andrew Ng Warns AI is Years Away from General Intelligence, Citing Training Constraints

Andrew Ng Warns AI is Years Away from General Intelligence, Citing Training Constraints Quantum U-Net Achieves 76% Improvement in Synthetic Earth Observation Data Quality

Quantum U-Net Achieves 76% Improvement in Synthetic Earth Observation Data Quality AI Diffusion Lags in Key Sectors: China Leads with 54% of Global Robot Installations

AI Diffusion Lags in Key Sectors: China Leads with 54% of Global Robot Installations Spot AI-Generated Images on Social Media: Key Signs and Verification Tools

Spot AI-Generated Images on Social Media: Key Signs and Verification Tools