In the evolving landscape of enterprise technology, the role of an AI data analyst is becoming increasingly pivotal. This advanced tool is designed to convert natural language queries into precise, secure, and explainable SQL commands, executing them across various data warehouses or lakes to provide users with trustworthy answers. By grounding its operations in a governed semantic layer, the AI ensures that business terms are accurately interpreted, avoiding the pitfalls of raw data guesses.

Security is paramount, as the AI adheres to existing row and column-level policies, evaluating user permissions in real-time to ensure that sensitive data remains protected. Explainability is equally critical; the AI not only returns results but also provides the SQL generated, as well as the lineage and definitions of metrics, allowing analysts to validate the answers and learn from the insights provided.

The AI’s capabilities extend beyond simple query translation. It is designed to handle multi-turn analyses, maintaining a memory scoped to the active session and dataset, while preventing leakages across users. Additionally, it automatically redacts sensitive inputs and outputs, capturing detailed telemetry for reproducibility, including model prompts, query plans, execution statistics, and result summaries.

The implementation of such a system requires careful planning. Experts recommend starting with a semantic contract rather than focusing solely on the underlying AI model. This approach aligns the assistant with a metrics layer or data catalog that clearly defines entities, measures, and join logic, which can significantly reduce hallucinations and ensure consistent business logic across various tools. Treating this semantic contract as code—with versioning, tests, and promotion gates—further enhances its reliability.

Data security should be enforced at the data plane. Utilizing the same identity provider and policy engine as existing business intelligence (BI) stacks ensures consistent authorization processes. By applying row and column filters directly within the warehouse, organizations can minimize unnecessary data movement, and logging every query alongside user information helps maintain an effective audit trail.

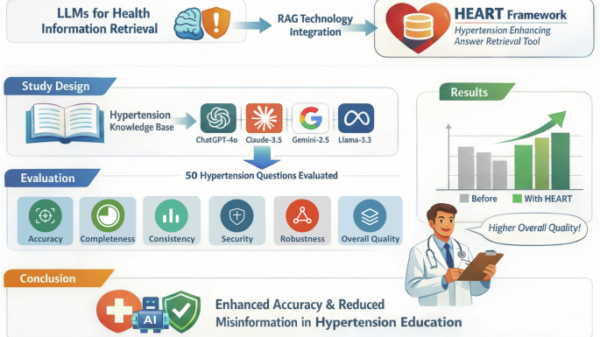

To optimize AI performance, it is essential to ground the model with relevant schema, metrics, and contextual examples. This retrieval-augmented generation should be selective, drawing strictly from validated documentation and previous queries. Employing self-consistency strategies allows the AI to generate multiple candidate queries and select the best one based on rigorous checks concerning schema validity, metric usage, and estimated costs.

Controlling costs and performance through query planning is another critical aspect. Before executing queries, the AI should estimate costs using warehouse metadata, sampling when appropriate, and caching stable aggregates. Frequently requested metrics can be materialized in governed data marts, while service level objectives for latency and freshness should be established. In cases involving extensive datasets, the AI should be capable of failing gracefully, providing partial results when necessary.

An evaluation harness is also vital for validating AI efficiency. By employing a collection of real business questions paired with accurate SQL and expected results, organizations can measure the precision of generated SQL, execution success rates, adherence to data policies, and numerical accuracy. Keeping track of regressions when updating models, prompts, or the semantic layer helps ensure a consistent user experience aligned with analytical correctness.

Establishing an operational model and risk controls is crucial for the effective functioning of the AI assistant. It should be treated as a shared analytics service, with clear ownership assigned for the semantic layer, prompt templates, and evaluation datasets. High-risk or new metrics should undergo human review prior to promotion, and a well-defined approval process for adding datasets should be implemented. Publishing model cards to document capabilities and limitations sets user expectations appropriately.

Logging should be seamlessly integrated into security operations, forwarding audit events to security information and event management (SIEM) systems. This includes tracking denied policy checks, atypical query volumes, and abnormal access to results. Aligning the AI’s control plane with established governance structures can alleviate the burden of change management and enhance user adoption.

To measure return on investment (ROI) in concrete business terms, organizations can track various metrics such as the cycle time from question to validated answer, the percentage of self-serve inquiries resolved without analyst intervention, and the reduction of duplicative or conflicting metrics in circulation. Evaluating the cost per successful analytical session and the incremental margin impact of decisions derived from AI-generated insights is also critical. Savings accrued from avoided data quality incidents, along with the costs of unauthorized access, should be considered alongside productivity enhancements.

As organizations increasingly seek streamlined data analysis solutions, the potential for AI in this domain is substantial. By adhering to governance principles while implementing robust AI frameworks, companies can accelerate their journey towards effective decision-making, ensuring a faster and safer analytical process at scale.

See also AI Financial Advisors: Experts Debate Benefits and Risks for Consumers in 2026

AI Financial Advisors: Experts Debate Benefits and Risks for Consumers in 2026 Hong Kong’s Paul Chan Promotes Tech-Finance Integration Amid AI Stock Surge

Hong Kong’s Paul Chan Promotes Tech-Finance Integration Amid AI Stock Surge Investors Shift Focus to Undervalued Assets as AI Market Matures in 2026

Investors Shift Focus to Undervalued Assets as AI Market Matures in 2026 Generative AI to Boost Financial Efficiency by 20% Amid Compliance Challenges

Generative AI to Boost Financial Efficiency by 20% Amid Compliance Challenges Nvidia Launches Vera Rubin Superchip at CES 2026, Promises 4x GPU Efficiency Boost

Nvidia Launches Vera Rubin Superchip at CES 2026, Promises 4x GPU Efficiency Boost