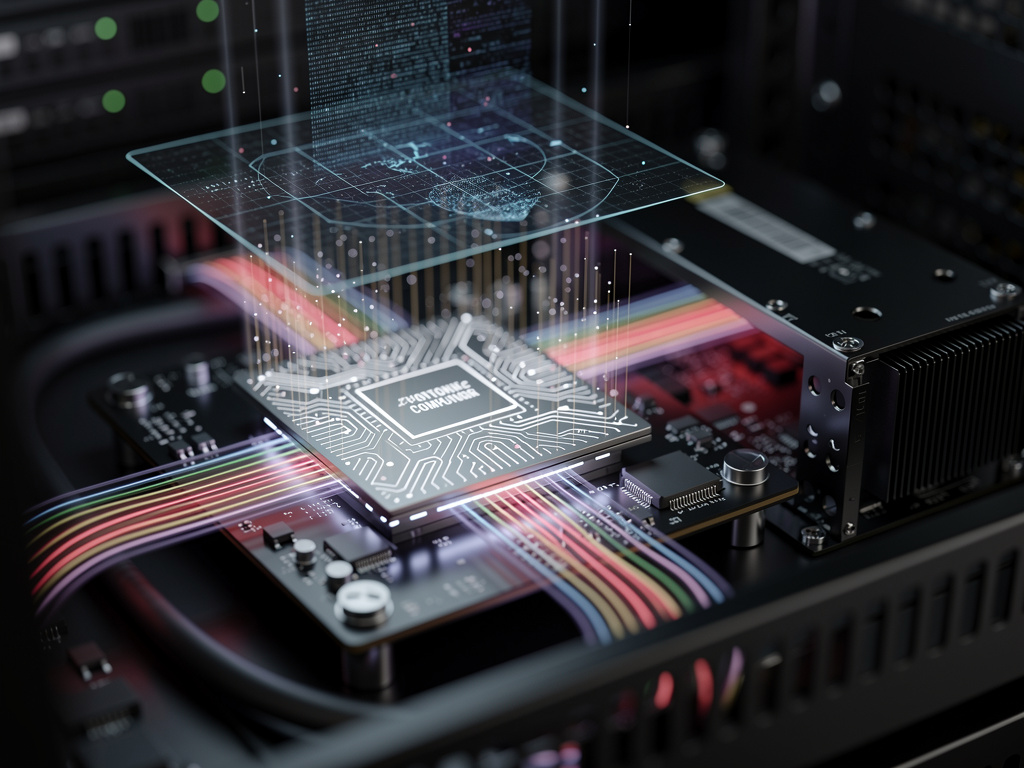

In a significant leap for artificial intelligence, a team of researchers from Shanghai Jiao Tong University and Tsinghua University has introduced an optical AI chip named LightGen. This groundbreaking innovation, detailed in a recent paper published in the journal Science, claims to outperform Nvidia’s A100 GPU by an astonishing 100 times in both speed and energy efficiency. Utilizing the speed of light for processing complex generative tasks, LightGen is positioned to reshape performance benchmarks in AI and tackle the growing energy demands of large models.

LightGen’s design incorporates 3D-stacked photonic neurons, facilitating parallel processing that mimics neural networks but operates at optical speeds. Early testing has shown that the chip can achieve a staggering 35,700 trillion operations per second (TOPS) and 664 TOPS per watt, dramatically surpassing the capabilities of the A100, particularly in image and video synthesis tasks.

The chip marks a bold foray into the realm of photonic computing, leveraging the innate speed of photons for data processing. Unlike traditional silicon-based chips that depend on electrons—subject to heat dissipation and energy loss—LightGen uses light pulses to convey information, allowing for faster data transmission and significantly reduced energy consumption. This shift addresses one of the critical challenges in AI development: the escalating energy needs of training and deploying large-scale models.

Industry experts are closely monitoring the implications of this advancement, especially in light of ongoing U.S.-China tech tensions and restrictions on semiconductor exports. The efficiency of LightGen in handling demanding AI workloads suggests that it could revolutionize energy-intensive applications, particularly in fields such as autonomous driving and medical imaging, where speed and efficiency are paramount. Reports indicate that LightGen performs matrix multiplications—essential for AI algorithms—up to 100 times faster than the A100 in controlled laboratory environments.

However, skepticism remains regarding the chip’s practical applicability. Critics argue that while LightGen excels in narrowly defined tasks, it may encounter challenges with broader AI workloads requiring versatile programming. Nvidia’s A100, a staple in data centers globally, benefits from a well-established ecosystem and software support that photonic chips are still in the process of developing. The A100, launched in 2020, delivers around 312 teraflops in tensor performance, while LightGen’s optical architecture reportedly achieves equivalent computations in a fraction of the time.

As discussions regarding LightGen unfold, the geopolitical context is crucial. The chip’s introduction intensifies the tech rivalry between the U.S. and China, particularly as the U.S. tightens restrictions on AI hardware exports. Chinese companies, facing barriers in acquiring Nvidia’s latest GPUs, are increasingly pivoting toward homegrown technologies. Online discussions reflect a mix of enthusiasm and caution, with commentators speculating on how photonic chips could reshape the global AI landscape.

In response to this competitive pressure, Nvidia is also investing in optical technologies, evidenced by developments in a separate optical quantum chip. This shift indicates a recognition within the industry of photonics as a viable pathway for future advancements, potentially leading to hybrid systems that merge silicon and optical elements for optimal performance.

While the potential of LightGen is evident, significant technical hurdles still remain. Integrating optical components with existing silicon infrastructure demands breakthroughs in materials science, particularly in developing reliable photonic-electronic interfaces. The LightGen team has acknowledged these challenges, noting in their research that although the chip excels in analog computations, digital precision tasks may still favor traditional GPUs.

Energy efficiency is another focal point, with analog AI chips reportedly operating at speeds up to 1,000 times faster while consuming less power. This aligns with LightGen’s claims and could prove transformative for hyperscale data centers grappling with soaring electricity costs. As interest in optical AI technology grows, collaborations between academia and industry are anticipated to accelerate commercialization efforts.

Looking ahead, the integration of LightGen into real-world applications presents intriguing possibilities. For instance, in autonomous vehicles, the chip’s speed could significantly enhance real-time image processing for object detection, thereby improving safety and decision-making. In healthcare, faster generative models could facilitate accelerated research timelines for drug discovery and medical imaging analysis.

Despite the promise shown in initial trials, challenges regarding compatibility with existing software ecosystems, such as Nvidia’s CUDA, remain. Analysts predict that while initial deployments will likely focus on niche markets, broader adoption could occur as the technology matures. The economic implications of LightGen’s emergence could bolster China’s semiconductor self-sufficiency and disrupt Nvidia’s market dominance in AI accelerators.

In summary, while LightGen does not pose an immediate threat to Nvidia’s standing, it signals a burgeoning shift towards optical computing within the AI sector. With ongoing advancements and collaborations, the trajectory of AI technology suggests a future where light could drive the next era of intelligence, compelling the industry to adapt or risk obsolescence.

See also Software Engineering Jobs Surge to 105K in 2026, Driven by AI and Cloud Demand

Software Engineering Jobs Surge to 105K in 2026, Driven by AI and Cloud Demand SoftBank Acquires DigitalBridge for $4 Billion to Enhance Global AI Infrastructure

SoftBank Acquires DigitalBridge for $4 Billion to Enhance Global AI Infrastructure New Study Reveals AI Security Risks Rooted in Cultural Assumptions and Uneven Development

New Study Reveals AI Security Risks Rooted in Cultural Assumptions and Uneven Development DPIFS Solutions Launches AI-Driven Smart Traffic Systems to Transform Urban Mobility

DPIFS Solutions Launches AI-Driven Smart Traffic Systems to Transform Urban Mobility Jeffs’ Brands Expands Into Homeland Security with KeepZone AI’s RT LTA Aerostat Agreement

Jeffs’ Brands Expands Into Homeland Security with KeepZone AI’s RT LTA Aerostat Agreement