A six-ton tiltrotor unmanned aerial vehicle successfully completed its maiden flight in Deyang, Sichuan province, on Sunday, marking a significant milestone in China’s advancements in vertical-lift aviation. This development underscores the country’s ambitions to enhance its capabilities in drone technology.

In parallel with technological advancements, the discourse around the governance of artificial intelligence (AI) is gaining momentum. AI’s unique power to execute a multitude of human tasks brings both transformative potential and significant risks. While past revolutionary technologies like electricity and automobiles reshaped civilization without stringent global oversight, AI’s capacity for misuse—such as fraud, cybercrimes, and data manipulation—necessitates a robust regulatory framework.

The BRICS Leaders’ Statement on the Global Governance of Artificial Intelligence, issued in Rio de Janeiro in July, highlighted the urgent need for “safe, ethical, trustworthy, and responsible AI development for the benefit of all.” The statement called for systems capable of detecting and preventing malicious use, reflecting an increasing global consensus that AI must serve humanity rather than endanger it.

AI governance must be a global endeavor, as its benefits and potential misuses transcend national borders. While countries like the United States and China are formulating their own AI regulations, these fragmented efforts often lead to duplication and hinder standardization. For instance, the U.S. enacted the CHIPS and Science Act of 2022 to impede China’s advancement in high-performance AI models. Nonetheless, Chinese companies continue to make significant strides in AI development, which raises questions about the commitment to an inclusive approach toward technology.

The implications of AI extend beyond industry competition, as the potential for Artificial General Intelligence (AGI) looms on the horizon. This development poses fundamental questions about the integration of man and machine. As AI systems begin to communicate autonomously and integrate with human biological systems, pressing issues arise: Will machines ultimately replace humans? What does it mean to be human? How can we ensure that humans control technology rather than the reverse? These existential queries are too complex for any single country to address, necessitating a collaborative global effort.

Prominent figures in the field, such as Geoffrey Hinton, a Nobel laureate and pioneer of deep learning, have cautioned against the rapid advancement of AI technologies, labeling them an “existential threat.” His calls for a temporary halt in AI development underscore the need for balanced progress that prioritizes safety alongside innovation. Zhang Jun, secretary-general of the Boao Forum for Asia, echoed these sentiments at a recent UN Security Council debate, emphasizing that the impact of AI could surpass human cognitive limitations. He advocated for “people-oriented” and “AI for good” principles to ensure that technology does not spiral out of control, particularly in conflict scenarios.

India’s approach to AI governance mirrors this caution, as the country released its own AI Governance Guidelines in November. These guidelines aim to foster innovation while safeguarding individuals and society from potential harms associated with AI technologies.

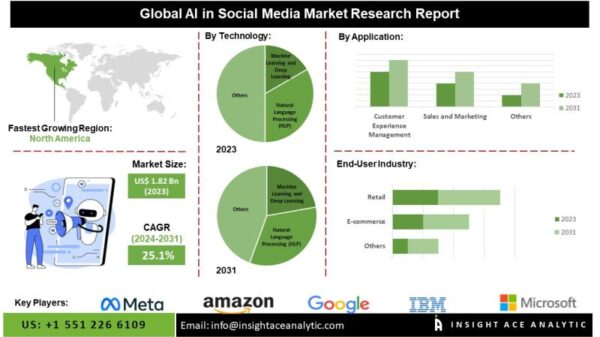

The ongoing global debate on AI governance must address three critical concerns. First, it is vital to prevent and reverse the widening AI divide within and among nations. With projections suggesting that AI could generate an additional $15.7 trillion in value by 2030, it is imperative that these benefits do not remain concentrated in wealthier nations. Otherwise, the Global South risks facing increased inequality and weakened digital sovereignty.

Second, as AI usage escalates, global cooperation on energy conservation becomes increasingly urgent. The potential for a new wave of greenhouse gas emissions driven by expanding data centers and computing power must be curbed. Strategies should be developed to optimize “AI per watt of energy” to mitigate environmental impacts.

Lastly, the fundamental question of what AI should be utilized for should remain central to its governance. Should AI prioritize world peace or be leveraged for warfare? Is its role to fulfill the UN Sustainable Development Goals, or should it primarily serve the interests of the affluent? Deciding whether AI should be employed for environmental protection or to exacerbate geopolitical tensions represents a critical juncture in the technology’s trajectory.

At the UN headquarters in New York, a bronze sculpture with the message “Let us beat our swords into plowshares” resonates deeply amid current AI discussions. While some factions may seek to weaponize AI as a tool for conflict, it is crucial for the global community to strive to transform this powerful technology into a means for fostering prosperity and happiness for all.

See also AI Distrust Soars in 2026: 75% of Americans Fear Job Losses and Ethical Failures

AI Distrust Soars in 2026: 75% of Americans Fear Job Losses and Ethical Failures UK AI Expert Warns: Rapid Advances May Outpace Safety Measures, Threatening Control

UK AI Expert Warns: Rapid Advances May Outpace Safety Measures, Threatening Control India Prepares for 2026 AI Impact Summit to Drive Global South Collaboration and Growth

India Prepares for 2026 AI Impact Summit to Drive Global South Collaboration and Growth Health IT Firms Call for Clearer AI Regulations Amid Rapid Development Changes

Health IT Firms Call for Clearer AI Regulations Amid Rapid Development Changes AI Experts Predict 2026: 77% Doubt AGI Will Be Achieved, Law Schools Lag in Tech Training

AI Experts Predict 2026: 77% Doubt AGI Will Be Achieved, Law Schools Lag in Tech Training