A recent study highlights that the risks associated with artificial intelligence (AI) extend beyond mere technical flaws, revealing deeper cultural and developmental issues that can influence the behavior of these systems. Conducted by a consortium of international scholars from institutions including Ludwig Maximilian University of Munich, the Technical University of Munich, and the African Union, the research emphasizes the importance of understanding AI through the lens of international human rights law. This has direct implications for security leaders tasked with deploying AI across diverse populations and regions.

The study reveals that AI systems are imbued with cultural and developmental assumptions at every stage of their lifecycle. Training data often reflects dominant languages, prevailing economic conditions, social norms, and historical narratives. Consequently, design choices may inadvertently encode expectations regarding infrastructure, behaviors, and societal values. These embedded assumptions can significantly impact system accuracy and safety; for example, language models perform optimally in widely represented languages but lose reliability in under-resourced ones. Similarly, vision systems trained in industrialized contexts may misinterpret behaviors in regions with differing traffic patterns or social customs, leading to increased error rates and uneven exposure to harm.

From a cybersecurity perspective, these systemic vulnerabilities can widen the attack surface, creating predictable failure modes across various regions and user groups. As AI increasingly shapes cultural expressions, religious interpretations, and historical narratives, misrepresentation can also have serious repercussions. Generative tools that summarize belief systems or reproduce artistic styles may distort cultural identities, which can erode trust in digital systems. Communities that feel misrepresented might disengage from these systems or actively challenge their legitimacy. In politically sensitive environments, distorted cultural narratives can exacerbate disinformation, polarization, and identity-based targeting.

Security teams that focus on information integrity and influence operations find themselves confronting these risks firsthand. The study posits that cultural misrepresentation is a structural issue that adversaries can exploit rather than a mere ethical concern. Moreover, the research underscores how the right to development plays a pivotal role in understanding AI risks. AI infrastructure is heavily reliant on resources such as computational power, stable electricity, data access, and skilled labor, which are not uniformly distributed globally.

Systems designed with the assumption of reliable connectivity or standardized data pipelines often fail in regions lacking these conditions. Applications in healthcare, education, and public services have shown significant performance drops when deployed outside their original developmental contexts. Such failures can lead organizations into cascading risks: decision support tools may generate flawed outputs, automated services might exclude certain population segments, and security monitoring systems could overlook local signals embedded in specific cultural and linguistic contexts.

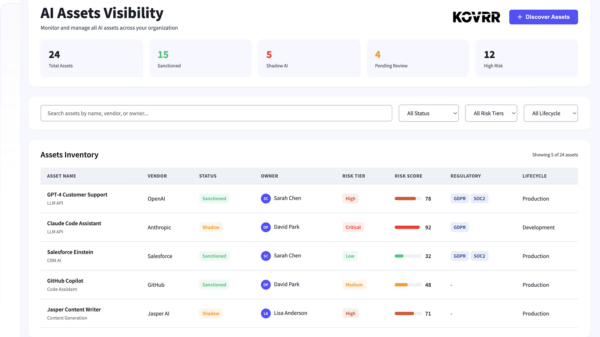

The study identifies a critical gap in existing AI governance frameworks, which often focus on bias, privacy, and safety while neglecting cultural and developmental risks. Current accountability structures can be fragmented, making it challenging to pinpoint who bears the responsibility for accumulated cultural and developmental harms that span across different systems and vendors. This scenario resembles third-party and systemic risks in cybersecurity; individual controls do not mitigate exposure when the broader ecosystem perpetuates the same underlying assumptions.

Another significant finding addresses the epistemic limits of AI systems. These models operate based on statistical patterns and often lack awareness of absent data, which can include cultural knowledge, minority histories, and local practices that are typically underrepresented in training sets. This limitation can adversely affect detection accuracy, as threat signals expressed through local idioms or cultural references may receive weaker responses from AI systems. Consequently, automated moderation and monitoring tools may inadvertently suppress legitimate expressions while failing to identify coordinated abuses.

The research connects the concepts of cultural rights with system integrity and resilience, emphasizing the importance of community involvement in decisions regarding how their data, traditions, and identities are represented within AI systems. When communities are excluded from these discussions, trust and cooperation can diminish, ultimately weakening compliance with security controls. Systems perceived as culturally alien or extractive may face resistance that hampers broader security objectives.

Overall, the findings illuminate how cultural rights and developmental conditions are intricately tied to the performance and reliability of AI systems, as well as the risks they pose to various populations. Security leaders must consider these dimensions if they aim to effectively navigate the complexities of AI deployment in an increasingly interconnected world.

See also DPIFS Solutions Launches AI-Driven Smart Traffic Systems to Transform Urban Mobility

DPIFS Solutions Launches AI-Driven Smart Traffic Systems to Transform Urban Mobility Jeffs’ Brands Expands Into Homeland Security with KeepZone AI’s RT LTA Aerostat Agreement

Jeffs’ Brands Expands Into Homeland Security with KeepZone AI’s RT LTA Aerostat Agreement AI’s Impact on Data Centers: Power Crisis and Trends Shaping 2026

AI’s Impact on Data Centers: Power Crisis and Trends Shaping 2026 Nvidia Reveals Next-Gen Rubin AI Chips in Full Production Ahead of 2026 Launch

Nvidia Reveals Next-Gen Rubin AI Chips in Full Production Ahead of 2026 Launch