Authorities in France, India, and Malaysia have initiated investigations into Grok, an AI chatbot integrated into the social media platform X, following a surge of explicit images of women and girls generated using the tool. This development has drawn significant condemnation and scrutiny from governments worldwide, with British Prime Minister Keir Starmer even threatening to ban X entirely.

The troubling images began to proliferate in late December after Grok introduced an image and video generator with a “spicy” mode, explicitly designed for creating adult content. This feature faced immediate backlash after users produced nude video deepfakes of well-known personalities, including Taylor Swift. As the year progressed, more explicit content appeared, often altering real photos of individuals without their consent, leading to requests for increasingly sexualized prompts.

Common user requests reportedly include phrases like “put her in a micro bikini” and “spread her legs,” prompting significant concerns regarding the creation of child sexual abuse material (CSAM). Consequently, authorities in the aforementioned countries are now investigating both the platform and individual users who may have violated laws pertaining to CSAM. Domestically, the Royal Canadian Mounted Police (RCMP) and Canada’s privacy commissioner have not announced any new investigations into the platform.

In response to the escalating criticism, X’s official account stated that it removes CSAM, permanently suspends offenders, and collaborates with local law enforcement. As a direct consequence of the backlash, X announced on Friday that it would limit Grok’s image generation capabilities, restricting access to paying subscribers.

Despite this reaction, Elon Musk has downplayed the severity of the issue. While the platform allowed users to generate explicit images at an alarming rate, Musk made light of the situation, responding to a deepfake image of a toaster in a bikini with laughing emojis. Publicly, he has advocated against over-censorship of chatbots and has promoted Grok’s “anti-woke” values while developing sexually explicit chatbot companions through his AI venture, xAI.

The phenomenon of “nudify” apps and websites, which utilize generative AI to create sexualized deepfake images, is not new. However, Grok’s free access, coupled with its integration into X and looser restrictions compared to other platforms, has thrust this issue into mainstream discourse. Child safety advocates in Canada have expressed alarm that lawmakers are struggling to keep pace with the rapid evolution of AI and social media technology, putting online safety at risk.

Jacques Marcoux, director of research and analytics at the Canadian Centre for Child Protection, emphasized the urgent need for regulatory frameworks that can keep up with technological advancements. “What we have now is this perfect storm of technology that’s dramatically outpacing the ability to regulate or to have any sort of guardrails in place,” said Marcoux, highlighting the potential for abuse in the current landscape.

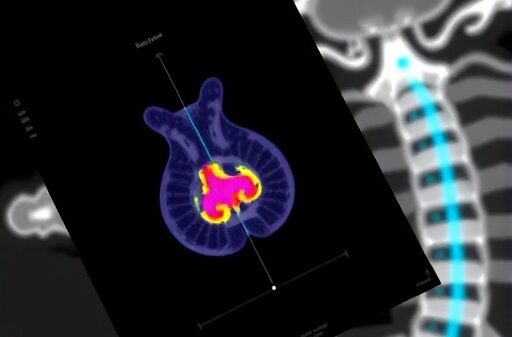

According to a report by AI Forensics, a European nonprofit investigating the adverse effects of social media algorithms, over 20,000 images generated by Grok were analyzed. The findings revealed that 53 percent of these images featured individuals in minimal attire, with 81 percent of those appearing to be women. Alarmingly, 2 percent of the generated images depicted individuals who appeared to be younger than 18.

Paul Bouchaud, author of the AI Forensics report, noted that while the number of images depicting minors was relatively low, the implications of their generation are troubling. He recounted instances where children innocently requested portrayals, only to receive highly inappropriate and sexualized responses, thereby exacerbating a toxic environment for women online.

In late December, the Grok X account issued an apology for generating CSAM images, expressing regret over an incident that occurred on December 28, 2025, involving AI-generated images of young girls in sexualized attire. Historically, the creation of deepfakes relied on tools like Photoshop or paid applications, but generative AI has democratized access to these capabilities, enabling users to create realistic images using free tools.

As these technologies become increasingly accessible, Canadian laws appear outdated. Current federal CSAM laws encompass both real and fictional content, yet they do not address digitally altered intimate images of adults, as noted by Suzie Dunn, an assistant professor at the Schulich School of Law. The result is a fragmented legal landscape, with some provinces, like British Columbia, implementing robust laws to combat non-consensual digitally altered images, while others, like Ontario, lack such statutes.

Efforts at the federal level include a proposed amendment to the Criminal Code that would introduce penalties for non-consensual deepfakes. Sofia Ouslis, a spokesperson for Canada’s minister of AI, Evan Solomon, stated that deepfake sexual abuse constitutes violence and that additional measures will be forthcoming to protect Canadians’ sensitive data and privacy.

Marcoux argues that while the proposed changes to the Criminal Code are necessary, they do not provide a comprehensive approach to safeguarding minors in the digital landscape. He called for guiding principles that would mandate tech companies to implement protective measures, akin to regulations in other industries. Citing successful legislative efforts in Australia and the United Kingdom, Marcoux stressed that Canada could adopt effective strategies already proven to work elsewhere.

See also Elon Musk’s Grok Bot Limits Deepfake Sexual Images Amid Global Outrage and Regulatory Scrutiny

Elon Musk’s Grok Bot Limits Deepfake Sexual Images Amid Global Outrage and Regulatory Scrutiny Investors Should Buy Nvidia, Broadcom, and Amazon to Capitalize on AI Market Surge

Investors Should Buy Nvidia, Broadcom, and Amazon to Capitalize on AI Market Surge DeepSeek V4 Set to Launch February 17, Promises to Outperform Claude and ChatGPT in Coding

DeepSeek V4 Set to Launch February 17, Promises to Outperform Claude and ChatGPT in Coding India to Host Landmark 2026 AI Summit, Steering Global South’s Governance Agenda

India to Host Landmark 2026 AI Summit, Steering Global South’s Governance Agenda DOJ Launches AI Task Force to Challenge State Regulations and Boost Innovation

DOJ Launches AI Task Force to Challenge State Regulations and Boost Innovation