Artificial intelligence is increasingly being integrated into healthcare settings, aiding tasks from writing physicians’ notes to making patient recommendations. However, new research from Northeastern University highlights a critical concern: AI and large language models (LLMs) can perpetuate racial biases embedded in their training data, influencing outputs in ways that may not be immediately apparent to users. This study aims to decode the decision-making process of LLMs, shedding light on when race may be problematic in their recommendations.

Hiba Ahsan, a Ph.D. student and lead author of the study, emphasizes a significant finding: previous research indicates that Black patients are often less likely to receive pain medications despite reporting pain levels comparable to those of white patients. Ahsan warns that AI models could replicate this bias, making potentially harmful recommendations based on race.

In some medical contexts, considering race can be clinically important. For instance, gestational hypertension is more prevalent among individuals of African descent, while cystic fibrosis occurs more frequently in Northern Europeans, according to the Mayo Clinic. However, Ahsan notes that many biases in LLMs arise from irrelevant prejudices based on race, leading to outcomes that could adversely affect patient care.

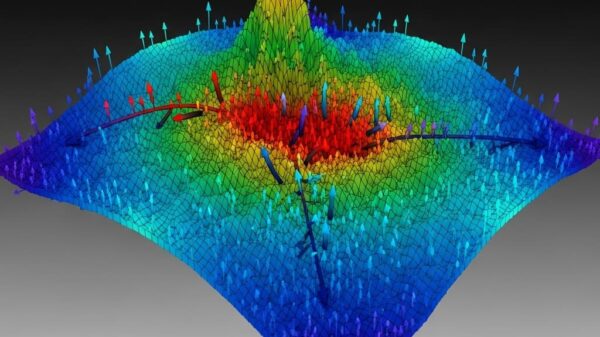

The researchers utilized a tool called a sparse autoencoder to examine the intricate, often opaque workings of LLMs. Ahsan explains that these models simplify complex data inputs through a process called encoding, creating intermediate representations known as “latents.” While the model can interpret these numbers, human understanding remains limited.

By employing the sparse autoencoder, Ahsan and her advisor Byron Wallace, a machine learning expert, aimed to translate these latents into comprehensible data. This tool can indicate whether a particular data point is associated with race or another identifiable characteristic. Ahsan describes the process: if the autoencoder detects a latent related to race, it will signal that race is influencing the model’s output.

The researchers analyzed clinical notes and discharge summaries from the publicly available MIMIC dataset, which anonymizes personal information. They focused on notes where patients identified as white or Black. After processing these notes through an LLM named Gemma-2, they employed the sparse autoencoder to identify latents corresponding to race.

The findings revealed that racial biases were indeed embedded in the LLM. The autoencoder identified a notable frequency of latents associated with Black individuals alongside stigmatizing concepts such as “incarceration,” “gunshot,” and “cocaine use.” While previous evidence of racial bias in AI is well-documented, this research provides a rare glimpse into the underlying elements that influence LLM responses.

Ahsan emphasizes the challenges of interpretability in LLMs, describing them as “black boxes” that obscure the factors leading to specific decisions. Utilizing a sparse autoencoder to peer into these systems offers a pathway for physicians to discern when a patient’s race may be improperly factored into AI recommendations. Increased transparency could help doctors intervene and mitigate bias or seek alternative solutions, such as retraining the AI on more representative datasets.

Wallace acknowledges that while they did not invent sparse autoencoders, their application in clinical settings is pioneering. He asserts, “If we’re going to use these models in health care and want to do it safely, we probably need to improve the methods for interpreting them.” The sparse autoencoder method represents a significant step in addressing the interpretability challenges inherent in AI systems.

As AI continues to play a larger role in healthcare, the implications of this research are profound. With the ability to identify and understand biases in AI recommendations, healthcare providers can work towards ensuring more equitable treatment and decision-making, paving the way for advancements that prioritize patient welfare over algorithmic convenience.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature