Recent incidents underscore the intertwining challenges of education, artificial intelligence (AI), and security, illustrating the potential dangers of reliance on AI technology. This week, a graduate student submitted a thesis proposal that cited a social theory that does not exist, a phenomenon known as AI hallucination. In another instance, an AI-powered security camera at a school mistakenly triggered an alert for a nonexistent gun, prompting a hasty police response. Additionally, a university email account began suggesting lengthy replies filled with irrelevant information, further complicating communication. Each of these occurrences highlights underlying risks associated with AI models, where inaccuracies can have serious consequences—academic credibility, safety, and trust are all at stake.

The author of this article, who uses AI models like ChatGPT and Gemini daily, aims to explore the underlying flaws within these systems. A recent attempt to add a friend to a group photo using Gemini revealed significant shortcomings in AI’s understanding of context. The initial prompt, “add my friend to this photo,” was executed without adequate context, leading to a result that would be unacceptable for a human designer.

When the author refined the command to “add my friend to the line of people and don’t change any faces or features,” the AI produced an image that recognized the prompt but went awry by rendering the friend at an unrealistic height of seven feet and altering other faces in the photo. A subsequent request to maintain the same height for all individuals resulted in even more confusion, with multiple versions of the friend appearing at exaggerated sizes and one person entirely missing from the image.

These errors illustrate four distinct problems in the AI’s processing:

1. An early commitment error, or “salience latch,” where the model anchors on the wrong subject and fails to adjust its reasoning.

2. Height scaling issues that resulted from the misinterpretation of the prompt.

3. The model’s failure to integrate existing elements properly, resulting in altered faces.

4. A resource allocation issue leading to the disappearance of one individual as the AI minimized data processing to keep the image coherent.

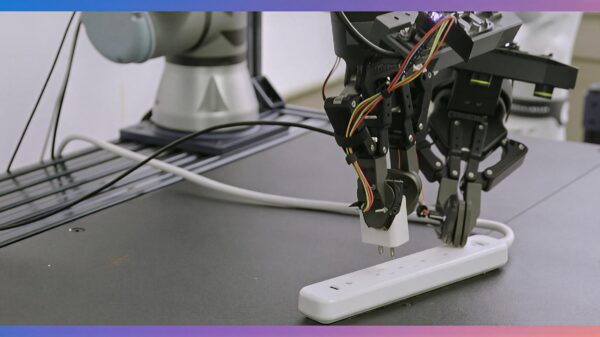

While the author faced no serious repercussions in this casual editing exercise, the implications are far more serious in contexts such as autonomous driving or customer service. Tesla’s self-driving technology, which relies solely on cameras, is prone to similar risks. By avoiding costlier spatial sensors like LiDAR, Tesla’s system interprets visual data without accurately measuring distances, increasing the likelihood of catastrophic errors.

For instance, when the AI confused the author’s friend with a ten-foot figure, it highlights a critical flaw in the use of computer vision for driving applications. A self-driving car could mistakenly identify hazards based on inaccurate object sizes or positions, leading to disastrous consequences. Tesla has reportedly been involved in at least 772 fatalities, often stemming from the same early commitment errors observed in the photo editing attempts.

When prioritizing lane-keeping over obstacle avoidance, the AI could drive directly into barriers, compromising safety. This was exemplified by a Tesla that failed to navigate construction changes on a highway, resulting in a serious accident. In stark contrast, Waymo’s self-driving taxis have not been involved in significant accidents, attributable to their comprehensive sensor suite, which includes LiDAR, radar, and multiple cameras.

This raises vital questions about the trade-offs in AI technology. Tesla’s reliance on cost-effective camera systems, valued at less than $1,000, starkly contrasts with Waymo’s investment of approximately $60,000 in sensors. The reduced cost of entry comes at the price of safety, revealing a troubling reality: when AI misjudges early decisions, it can execute flawed logic with alarming precision.

While human intuition enables double-checking and clarifying information, AI models typically treat uncertainty as a problem to eliminate. This leads to decisions that prioritize computational efficiency over accuracy, further complicating interactions and reliance on these systems. As demonstrated, the issues are systematic, arising from the probabilistic nature of AI models and their inability to self-correct once they latch onto the wrong elements.

As discussions surrounding AI evolve, it is crucial to recognize that enhancing models requires more than just data. The focus should shift toward understanding the implications of early decision-making in AI systems. Without addressing these fundamental flaws, AI technology may continue to misinterpret critical information, resulting in errors with real-world consequences.

David Riedman, PhD, serves as Chief Data Officer at a global risk management firm and is a tenure-track professor, bringing both academic and practical insights into the risks associated with AI in education and beyond.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature