Recent research from a team of scientists, including Meet Raval of the University of Southern California, Tejul Pandit from Palo Alto Networks, and Dhvani Upadhyay from Dhirubhani Ambani University, has highlighted the complexities surrounding the use of artificial intelligence in medical classification. Their comprehensive evaluation focused on comparing traditional machine learning techniques against modern foundation models, revealing that classical methods often outperform their advanced counterparts across various medical datasets. The study utilized four publicly available datasets, encompassing both text and image modalities, emphasizing the need for effective adaptation strategies when employing these powerful AI tools in critical healthcare applications.

The research unveiled a surprising trend: traditional machine learning models, such as Logistic Regression and LightGBM, consistently achieved superior performance in most medical classification tasks. In particular, structured text-based datasets showcased the classical models’ efficiency, which exceeded that of both zero-shot Large Language Models (LLMs) and Parameter-Efficient Fine-Tuned (PEFT) models. The results challenge the prevailing assumption that foundation models are universally more effective. The findings indicate that the performance of these advanced models, such as those using the Gemini framework, is contingent upon substantial fine-tuning and adaptation.

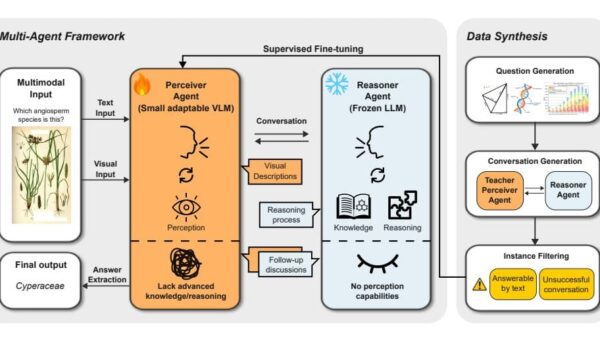

Central to this investigation was a rigorous benchmarking system that methodically assessed performance across text and image modalities. The researchers made a point to maintain consistent data splits and evaluation criteria to ensure a fair comparison of model efficacy. By scrutinizing three distinct model classes for each task—classical machine learning models, prompt-based LLMs/VLMs, and fine-tuned PEFT models—the study provided a robust evaluation framework. Notably, the models utilizing LoRA-tuned Gemma variants consistently underperformed, emphasizing that minimal fine-tuning can be detrimental to their generalization capabilities.

In their analysis, the zero-shot LLM/VLM pipelines employing Gemini 2.5 yielded mixed results. Although these models struggled with text-based classification, they demonstrated competitive performance on multiclass image categorization, particularly matching the baseline established by the traditional ResNet-50 model. This dichotomy underlines the nuanced strengths and weaknesses of both classical and contemporary models, further illustrating that established machine learning techniques remain a reliable option for medical categorization tasks.

The design of this study is particularly noteworthy for its innovative approach to benchmarking. By addressing limitations in existing methodologies—such as inconsistent evaluation rigor and the lack of cross-modality alignment—the researchers created a detailed framework for assessing model performance. The inclusion of both binary and multiclass tasks, especially in the realm of medical imaging, broadened the evaluation’s scope beyond simpler classification challenges and revealed the traditional models’ enduring relevance in medical contexts.

As the team continues to analyze the implications of their findings, they underscore the importance of effective adaptation strategies in utilizing foundation models. Their work not only offers crucial insights for practitioners in medical AI but also highlights the necessity of thorough evaluation criteria when selecting modeling approaches for healthcare applications. The study advocates for a more nuanced understanding of the conditions under which advanced AI models can be considered viable alternatives to classical methods.

The ongoing exploration into the optimization of PEFT strategies, as well as the potential of multimodal AI in complex medical classification tasks, remains a crucial area for future research. By fostering a comprehensive understanding of how various models perform across diverse data types, the study aims to enhance diagnostic accuracy and ultimately improve patient care outcomes. This research reinforces the idea that while artificial intelligence offers exciting possibilities for healthcare, it is not a panacea, and traditional methods still play a vital role in the landscape of medical classification.

👉 More information

🗞LLM is Not All You Need: A Systematic Evaluation of ML vs. Foundation Models for text and image based Medical Classification

🧠 ArXiv: https://arxiv.org/abs/2601.16549

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions