Scientists are increasingly harnessing the power of reinforcement learning (RL) to tackle significant challenges in the field of quantum technology. A team led by Marin Bukov of the Max Planck Institute for the Physics of Complex Systems and Florian Marquardt from the Max Planck Institute for the Science of Light has showcased how RL, a machine learning approach focused on adaptive decision-making, can be effectively applied to optimize complex quantum systems. This research marks a pivotal shift from traditional control strategies, potentially accelerating the development of practical quantum technologies.

The study delves into various critical areas, including quantum state preparation, the design and optimization of high-fidelity quantum gates, and the automated construction of quantum circuits, essential for variational eigensolvers and architecture searches. By framing quantum control problems through the RL paradigm, the researchers demonstrate that algorithms can learn effective strategies for manipulating quantum states and implementing operations without relying on extensive manual tuning or pre-defined models.

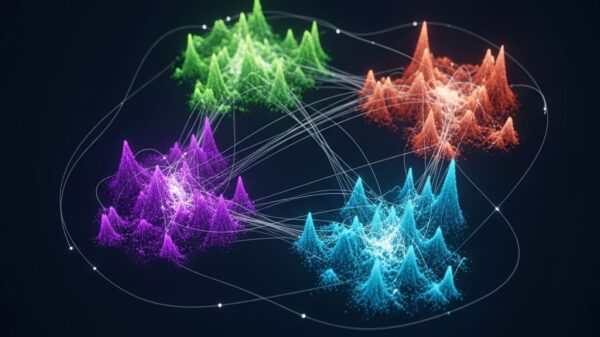

The researchers meticulously define the elements of RL—environments, states, actions, and rewards—and how they relate to quantum systems. By employing deep reinforcement learning techniques, the team has engineered agents capable of navigating complex quantum landscapes, a methodological advancement that includes distinguishing between model-free and model-based RL. This flexibility allows for precise control based on the specific quantum task at hand, thereby surpassing limitations of traditional methods.

A noteworthy application of this research is the enhancement of quantum control methods, particularly in the preparation of few- and many-body quantum states. The study illustrates how RL agents are trained to design and optimize high-fidelity quantum gates, crucial for developing scalable quantum computers. This research also extends to automated circuit construction, which significantly accelerates the development of quantum algorithms, including solutions for entanglement control, a fundamental requirement for quantum computation and communication.

Moreover, the interactive capabilities of RL agents are highlighted in areas of quantum feedback control and error correction. The researchers have developed RL-based decoders for quantum error-correcting codes, marking crucial advancements toward building fault-tolerant quantum computers. Applications in quantum metrology further demonstrate RL’s potential to enhance parameter estimation and sensing abilities, pushing the boundaries of precision measurement.

The RL methodology focuses on reward maximization, where agents select actions based on policies that encode the probability of choosing specific actions in given states. The research emphasizes the significance of designing reward functions that accurately reflect task success. Notably, it demonstrates that the reward function may depend on the current state, chosen action, and subsequent state of the environment, enabling agents to refine their strategies effectively.

Through their experiments, the team reveals that RL presents an efficient toolbox for designing error-robust quantum logic gates, even outperforming state-of-the-art implementations previously designed by humans. The study reports that RL agents can synthesize single-qubit gates with reduced execution times and improved trade-offs between protocol length and speed, potentially enabling real-time operations. Furthermore, custom deep RL algorithms have produced control pulses for superconducting qubits at significantly higher speeds while maintaining fidelity and leakage rates comparable to existing gates.

Despite these advances, the authors of the study recognize ongoing challenges, such as scalability issues, the interpretability of RL agents, and the integration of these algorithms with existing experimental platforms. They argue that future research should address these concerns to fully realize the potential of RL in quantum technologies. Promising directions include developing methods to enhance scalability, improving the interpretability of decision-making processes, and streamlining the integration of RL with experimental setups.

This body of work establishes that RL is not merely a theoretical possibility but a practical tool in advancing quantum technologies, as evidenced by multiple experimental implementations highlighted throughout the study. By outlining key areas for future research, this research underscores the growing role of reinforcement learning in shaping the future of quantum technologies and unlocking their full potential for scientific discovery and technological innovation.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions