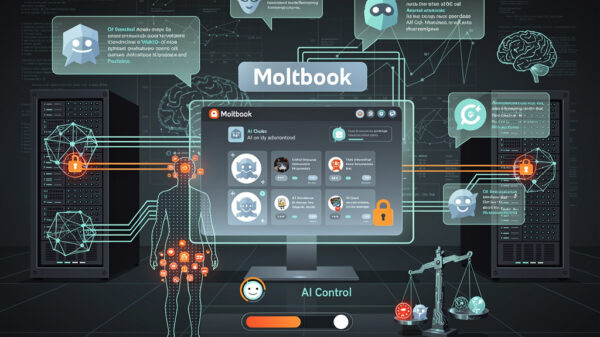

A recent peer-reviewed study published in Science Journal raises alarms over the potential for “swarms” of artificial intelligence (AI) bots to disrupt social media landscapes by spreading misinformation and targeting users. The study, titled “How malicious AI swarms can threaten democracy,” highlights concerns about these AI systems mimicking human behavior in an effort to influence public opinion and threaten democratic processes.

The authors warn that societal instincts, particularly herd mentality, may be exploited by AI swarms that can coalesce around specific narratives. “Humans, generally speaking, are conformist,” said Jonas Kunst, a co-author of the study. “We often don’t want to agree with that, and while individuals vary, there is a tendency to attribute value to what the majority of people do. This is something that can relatively easily be hijacked by these swarms.”

The implications of such AI swarms could be profound. Instead of being guided by genuine community sentiment, users may find themselves influenced by coordinated AI systems acting on behalf of various interests, including unknown individuals, political parties, or state actors. The study suggests that these bots could mimic the behavior of an angry mob, targeting dissenters and driving them off social media platforms.

Currently, more than 50% of online traffic is attributed to automated bots, which typically perform simple and repetitive tasks. However, the next generation of AI bots, powered by large language models (LLMs) similar to those behind popular chatbots such as ChatGPT and Google’s Gemini, are expected to be much more sophisticated. These advanced bots could craft distinct personas, adapt to diverse online communities, and operate undetected.

While the study did not specify when these AI swarms might materialize, it noted that their subtlety would make them hard to detect, implying that their presence could already be more significant than acknowledged. “Swarms could contain thousands, even millions of AI agents,” said lead author Daniel Schroeder, who emphasized that “the more sophisticated these bots are, the less you will actually need.” This poses a unique challenge in the domain of digital communication and information sharing.

The relentless nature of AI agents presents a stark contrast to human users, who are limited by their personal lives and commitments. These bots can operate 24/7, relentlessly pushing narratives without pause, creating what the researchers term “cognitive warfare.” This capability could overshadow human efforts to counteract misinformation.

In anticipation of this new threat, researchers expect tech companies to enhance account authentication processes. However, they expressed concerns that such measures might unintentionally stifle political dissent, particularly in regions where anonymity is crucial for individuals to voice their opinions against oppressive regimes.

To combat the rise of AI swarms, the researchers recommend several proactive approaches, including monitoring live traffic for unusual patterns that may signify AI activity. They also propose the establishment of an “AI Influence Observatory” to track and respond to these emerging threats. Kunst concluded, “We are with reasonable certainty warning about a future development that might have disproportionate consequences for democracy, and we need to start preparing for that.”

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions