On Tuesday, Google officially introduced Gemini 3, a significant upgrade that reinforces its competitive edge in the AI landscape, despite the dominance of OpenAI’s ChatGPT in the chatbot arena. This launch follows a month of speculation and builds upon Google’s previous AI models, showcasing its commitment to revolutionizing user interactions across its services.

Gemini 3 is embedded in various Google services. For users subscribed to the “Pro” or “Ultra” tiers, its applications extend even further, enabling advanced functions such as document analysis, personalized travel suggestions, and even website design assistance. Additionally, Google has made Gemini 3 available for free to college students in the United States.

According to Demis Hassabis, co-founder and CEO of Google DeepMind, this is merely the beginning. In a recent interview with Alex Heath for the Sources newsletter, Hassabis addressed intriguing future possibilities for the tool, including the integration of “the entire internet in memory.”

Sources: I’ve heard there’s internal interest in fitting the entire Google search index into Gemini, and that this idea dates back to the early days of Google, when Larry Page and Sergey Brin discussed it in the context of AI. What’s the significance of that if it were to happen?

Hassabis: Yeah. We’re doing lots of experiments, as you can imagine, with long context. We had the breakthrough to the 1 million token context window, which still hasn’t really been beaten by anyone else. There has been this idea in the background from Jeff Dean, Larry, and Sergey that maybe we could have the entire internet in memory and serve from there. I think that would be pretty amazing. The question is the retrieval speed of that. There’s a reason why the human memory doesn’t remember everything; you remember what’s important. So maybe there’s a key there. Machines can have way millions of times more memory than humans can have, but it’s still not efficient to store everything in a kind of naive, brute force way. We want to solve that more effectively for many reasons.

This vision for the future is intriguing, but what can users expect from Gemini 3 right now? The model is designed to “think” more deeply than its predecessor, Gemini 2.5, which was launched in March. Unlike Gemini 2.5, which provided rapid responses, Gemini 3 is structured to understand depth and nuance, a feature emphasized in a recent blog post from Google. Consequently, users may experience longer wait times for answers as the model processes more complex inquiries.

The focus on nuance allows Gemini 3 to pick up on “subtle clues” in user prompts, whether written or spoken. A modal displayed on gemini.google.com highlights its capabilities, stating, “Gemini 3 Pro is here. It’s our smartest model yet — more powerful and helpful for whatever you need: Expert coding & math help; next-level research intelligence; deeper understanding across text, images, files, and videos.”

My initial tests with Gemini 3, using a Google Pro subscription, revealed that the new model, labeled “Thinking,” indeed delivers richer results. This improvement was so noteworthy that even Sam Altman, CEO of OpenAI, offered congratulations via X on the day of the launch, saying, “Congrats to Google on Gemini 3! Looks like a great model.”

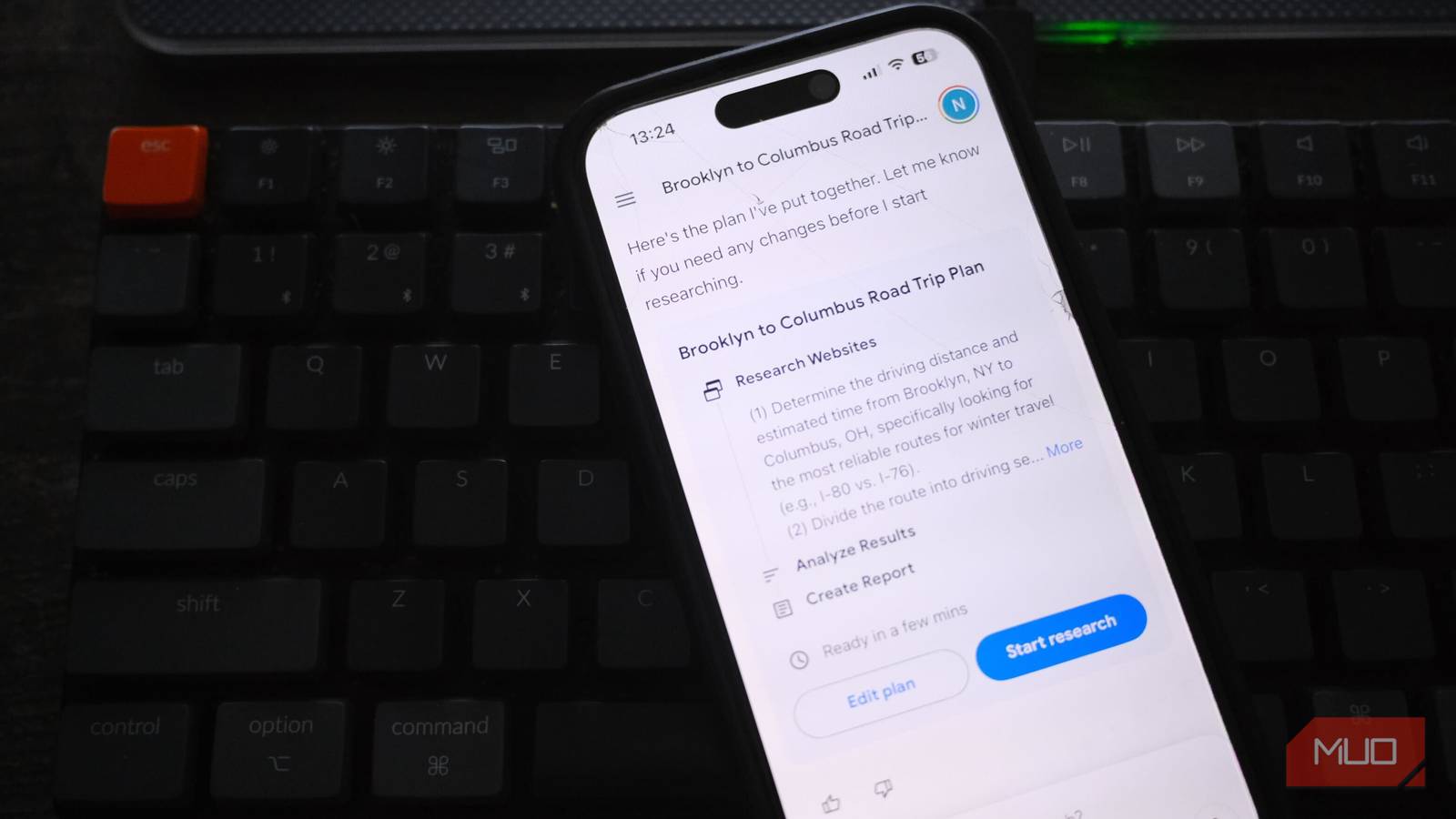

Road Trip Planning with Gemini 3

One practical application of Gemini 3 can be seen in how it approaches planning a road trip. When I requested an itinerary from Brooklyn, New York, to Columbus, Ohio, using Gemini 2.5, I received a structured table within seconds, outlining the journey’s timeline, potential breaks, and relevant travel tips.

In contrast, when I posed the same question to Gemini 3, the response time was approximately one minute, accompanied by engaging updates like “Refining directional nuance.” The resultant itinerary was not only detailed but also featured personalized suggestions for meals and scenic detours, such as a visit to the Flight 93 National Memorial.

Advanced use cases for Gemini 3 are emerging, with one user even creating a 3D LEGO editor using the model’s capabilities. This reflects the model’s versatility and its potential to support innovative applications across various domains.

Accessing Google Gemini 3

To utilize Gemini 3, users need a Google Gemini Pro subscription, which integrates it into Google’s AI Mode. The model is accessible through both mobile applications and the web. Developers can also tap into Gemini 3 via AI Studio, Vertex AI, and the newly launched Google Antigravity, an AI-assisted developer platform.

For those seeking free access, Gemini 3 is available with certain usage limitations, akin to restrictions found in other large language models like ChatGPT and Claude.

In summary, Gemini 3 marks a significant step forward for Google in the realm of AI. With its enhanced reasoning capabilities and broader applications, it not only deepens user engagement with Google services but also propels the company further into the competitive landscape of AI technology.

See also Trump Leverages $1 Trillion Saudi Investment to Boost AI Economy Amid Market Concerns

Trump Leverages $1 Trillion Saudi Investment to Boost AI Economy Amid Market Concerns IBM Integrates Consulting Advantage into Microsoft Copilot, Saving 250,000 Hours Annually

IBM Integrates Consulting Advantage into Microsoft Copilot, Saving 250,000 Hours Annually Yann LeCun Leaves Meta to Launch AI Startup Focused on Advanced Research and Memory

Yann LeCun Leaves Meta to Launch AI Startup Focused on Advanced Research and Memory Warner Music Group and Stability AI Partner to Develop Ethical AI Tools for Music Creation

Warner Music Group and Stability AI Partner to Develop Ethical AI Tools for Music Creation Pope Leo XIV Addresses AI’s Impact on Human Dignity for 60th World Communications Day

Pope Leo XIV Addresses AI’s Impact on Human Dignity for 60th World Communications Day