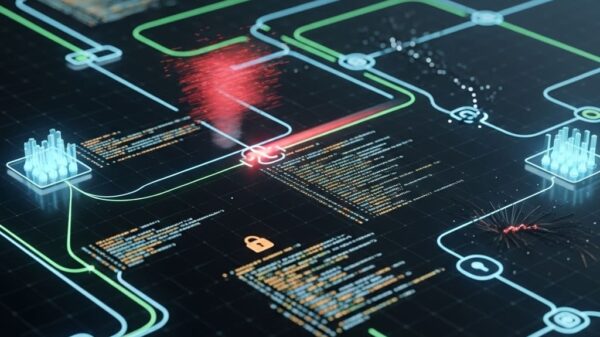

Researchers from The University of Hong Kong and McGill University have unveiled significant security vulnerabilities tied to Next Edit Suggestions (NES) in AI-integrated Integrated Development Environments (IDEs). The study, led by Yunlong Lyu, Yixuan Tang, and Peng Chen, alongside Tian Dong, Xinyu Wang, and Zhiqiang Dong, marks a crucial examination into the security implications of these advanced coding tools, which aim to enhance developer productivity but may inadvertently expose them to new attack vectors.

Unlike traditional autocompletion features, which passively fill in code based on keystrokes, NES actively suggests multi-line code changes by analyzing a broader context of user interactions. This shift introduces a more dynamic and interactive coding experience, enabling developers to navigate relevant code sections and apply edits with minimal friction. However, the enhanced capabilities raise concerns regarding the potential for context poisoning and other security threats.

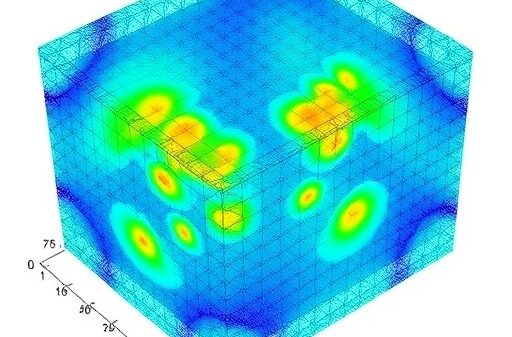

The researchers conducted a systematic security analysis, dissecting the mechanisms behind NES as implemented in popular IDEs like GitHub Copilot and Zed Editor. Their findings indicate that NES retrieves an expanded context from imperceptible user actions, which includes cursor movements and code selections, increasing the potential attack surfaces available to malicious actors. In laboratory settings, these systems demonstrated vulnerability to context manipulation, further compounded by transactional edits that developers make without adequate security awareness.

In a survey of over 200 professional developers, the researchers found a stark lack of awareness regarding NES security risks. While 81.1% of respondents reported having encountered security issues within NES suggestions, only 12.3% regularly verify the security of the code generated. Alarmingly, 32.0% acknowledged that they often skim or rarely scrutinize what the NES proposes. This disconnect underscores a critical need for better education and refined security measures in AI-assisted coding environments.

The researchers emphasized that the expanded interaction patterns inherent in NES disrupt the traditional trust model between developers and their tools. In a seamless workflow, the temptation to accept suggested edits without thorough examination increases, particularly when suggestions are highly accurate. This lapse in vigilance can lead to subtle vulnerabilities being integrated into the codebase, often without the developer’s awareness. The study illustrates that, while NES can boost efficiency, it simultaneously introduces risks that developers may not be equipped to handle.

As part of their comprehensive analysis, the researchers identified twelve previously undocumented attack vectors emerging from the NES functionality. The vulnerability rate exceeded 70% in both commercial and open-source implementations. For instance, one developer reported that a secret key was inadvertently exposed in plaintext in the codebase, despite efforts to exclude it through a .cursorignore file. Such incidents highlight the pressing need for security-focused design principles in modern IDEs.

The paper draws attention to a fundamental gap between the innovative features NES brings to coding and the defensive measures currently in place. To address these vulnerabilities, the authors advocate for the development of automated security protocols in AI-assisted programming environments. They argue that as the integration of AI becomes increasingly prevalent in software development, prioritizing security will be essential for protecting developers and their projects.

The findings not only illuminate the risks posed by NES but also call for action from both IDE developers and the broader coding community. As developers continue to rely on these sophisticated tools, the need for heightened awareness and robust security frameworks becomes critical in safeguarding the integrity of the software development lifecycle.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks