India’s Union Government has enacted amendments to the Information Technology Act, 2021, mandating that photorealistic AI-generated content be clearly labeled. This regulatory shift aims to enhance accountability as it addresses the rapid rise of synthetic media, particularly in the context of illegal material such as non-consensual deepfakes. The changes will take effect on February 20, coinciding with the conclusion of the upcoming India-AI Impact Summit.

Under the revised regulations, social media platforms are now required to remove certain categories of unlawful content within a significantly reduced timeframe of 2 to 3 hours. Specifically, content identified as illegal by a court or an “appropriate government” authority must be taken down within just 3 hours. This expedited response is part of a broader effort to curb the dissemination of harmful digital material.

On Tuesday, the Ministry of Information Technology made these amendments official as part of the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021. A notable adjustment in the newly proposed rules was the removal of a requirement from last October, which mandated that AI-generated content must be labeled with a coverage of at least 10% of the content area. The government has shifted to a less prescriptive approach, opting instead for a standard of “prominently” visible labels.

A senior government official indicated that the removal of the specific 10% requirement was a response to feedback from technology companies during consultations. They had expressed concerns that such a label could detract from the visual appeal of the content and potentially drive users away. However, the initial proposal had already faced criticism from tech firms that felt it was overly restrictive.

In addition to the labeling requirements, the government is urging social media platforms to implement automated tools to detect and prevent the spread of illegal, sexually exploitative, misleading, or fraudulent AI-generated content. These platforms are also mandated to inform users about the penalties for violating these rules at least once every three months, thereby fostering a more responsible digital environment.

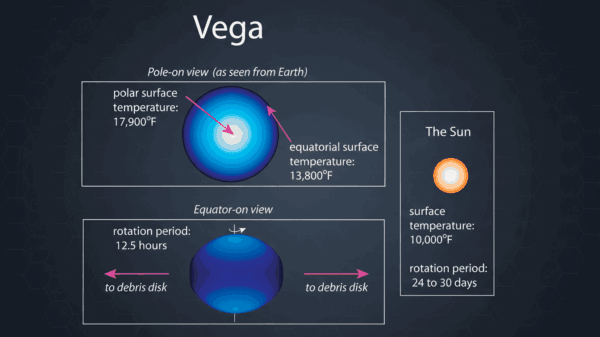

The updated regulations significantly increase the obligations of social media companies and digital platforms concerning deepfakes and AI-generated material. The government has now clearly defined what constitutes “synthetically generated information,” which includes audio, video, photos, or other visual content that has been artificially or algorithmically created to resemble real people or events.

This legislative change reflects a growing global concern regarding the implications of AI-generated content, particularly as it pertains to misinformation and digital rights. As synthetic media technologies continue to evolve, the challenge will be to strike a balance between innovation and the protection of individual rights and societal norms. The amendments serve as a crucial step in navigating this complex landscape, positioning India at the forefront of the global discourse on digital ethics and governance.

See also AI Technology Enhances Road Safety in U.S. Cities

AI Technology Enhances Road Safety in U.S. Cities China Enforces New Rules Mandating Labeling of AI-Generated Content Starting Next Year

China Enforces New Rules Mandating Labeling of AI-Generated Content Starting Next Year AI-Generated Video of Indian Army Official Criticizing Modi’s Policies Debunked as Fake

AI-Generated Video of Indian Army Official Criticizing Modi’s Policies Debunked as Fake JobSphere Launches AI Career Assistant, Reducing Costs by 89% with Multilingual Support

JobSphere Launches AI Career Assistant, Reducing Costs by 89% with Multilingual Support Australia Mandates AI Training for 185,000 Public Servants to Enhance Service Delivery

Australia Mandates AI Training for 185,000 Public Servants to Enhance Service Delivery