The diffusion language model (dLLM) has marked a significant turning point in artificial intelligence development with the release of LLaDA2.1 on HuggingFace. Launched last Monday, this model follows just two months after its predecessor, LLaDA2.0, and comes in two configurations: LLaDA2.1-Mini (16B) and LLaDA2.1-Flash (100B). This latest iteration demonstrates a notable advancement in the capabilities of diffusion models, effectively completing what some experts term a “coming-of-age ceremony” for the technology.

At the heart of LLaDA2.1’s advancements is its peak processing speed of 892 Tokens/second, a figure that transforms theoretical efficiency into practical application for the first time. The model introduces a mechanism for correcting errors during text generation, thereby overcoming the traditional limitations of speed versus accuracy that have plagued previous iterations. By incorporating a switchable dual-mode feature and a successful post-training reinforcement learning phase, LLaDA2.1 signals the emergence of diffusion language models as a serious competitor in the field.

Currently, autoregressive models, which generate text token by token, dominate the landscape. While this method provides stability and control, it is hampered by high computational costs and slow inference speeds, particularly in long-text generation. These challenges are compounded by the inability of autoregressive models to revisit previous outputs for corrections, resulting in cumulative errors that hinder large-scale applications.

LLaDA2.1 addresses these issues by shifting away from traditional paradigms. Instead of merely improving existing frameworks, it adopts an innovative approach that allows for parallel token generation akin to a “cloze test,” enabling the model to refine its output continuously. This transformative method is elucidated in a technical report authored by Ant Group, Zhejiang University, Westlake University, and Southern University of Science and Technology.

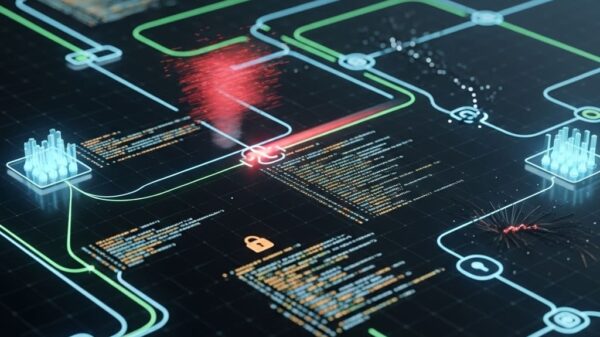

The significance of LLaDA2.1 can be contextualized within the broader struggle of AI models that adhere to autoregressive architectures. The limitations of these models have prompted researchers to explore alternative pathways, with diffusion models emerging as a viable option. Unlike their autoregressive counterparts, diffusion models do not follow a linear generation path but aim to produce multiple tokens simultaneously. However, early models faced challenges like increased error rates due to fixed “mask-to-token” paths, hampering practical deployment.

LLaDA2.1 marks a breakthrough in this area by systematically refining its decoding mechanisms and training protocols, effectively addressing the longstanding dichotomy between speed and quality in diffusion language models. The results are noteworthy: during complex programming tasks, the 100B parameter version of LLaDA2.1 achieved an impressive speed of 892 Tokens/second. This performance is particularly significant given that it was recorded during a rigorous benchmark test.

One of the standout features of LLaDA2.1 is its Error-Correcting Editable (ECE) mechanism, which allows the model to draft answers rapidly and self-correct in real-time. In this two-step process, the model first generates a draft quickly, permitting some level of uncertainty, and then transitions into an editing phase to refine its output. This innovation enables LLaDA2.1 to overcome common inconsistencies associated with parallel decoding, setting it apart from traditional models that adhere to a rigid writing process.

Another notable advancement is the implementation of a dual-mode system within a single model. Users can toggle between a Speedy Mode, which prioritizes quick drafting with post-editing, and a Quality Mode, designed for high-stakes tasks requiring precision. This flexibility simplifies user experience and model management, allowing adjustments to be made according to specific needs without the burden of multiple versions.

To enhance the model’s alignment with human intentions, the development team incorporated a reinforcement learning phase into LLaDA2.1’s training. The introduction of an ELBO-based Block-level Policy Optimization (EBPO) method facilitates the model’s capacity to understand instructions more effectively, marking an important step toward a more adaptable AI framework.

As evidenced in experimental evaluations, LLaDA2.1 demonstrates tangible improvements in both speed and performance. While slightly lower task scores compared to LLaDA2.0 were observed in Speedy Mode, the efficiency of token generation saw significant gains. In Quality Mode, LLaDA2.1 outperformed its predecessor across various benchmarks, indicating the model’s effectiveness in addressing both speed and quality. The peak throughput of LLaDA2.1-Flash reached 891.74 TPS on challenging programming benchmarks, underscoring its capabilities amidst complex requirements.

The release of LLaDA2.1 not only showcases the potential of diffusion models but also raises important questions about the prevailing autoregressive architecture that has dominated the AI landscape. As LLaDA2.1 paves the way for future innovations, it brings to light the need for a re-examination of the underlying principles guiding AI development, suggesting a paradigm shift may be on the horizon.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature