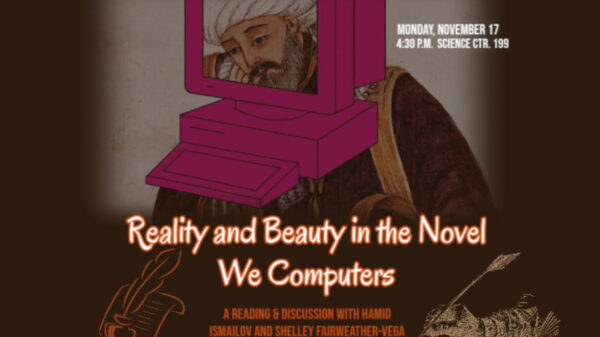

As the landscape of higher education continues to evolve, the integration of generative artificial intelligence (AI) stands out as a compelling topic of discussion. Faculty members from Swarthmore College recently shared their varied perspectives on this issue, emphasizing both the potential benefits and challenges that generative AI poses for education, particularly in the liberal arts.

Syon Bhanot, Associate Professor of Economics, states, “Generative AI is here, and we cannot pretend it is not.” He encourages a proactive adaptation to this technology rather than attempting to exclude it. Bhanot has made adjustments to his course assessments to mitigate the impact of AI-induced shortcuts, while also recognizing situations where AI can enhance learning, such as aiding in coding tasks with software like Stata or LaTeX. He advocates for a strategic incorporation of AI tools to alleviate faculty workload and improve overall morale, suggesting a role for AI in enhancing efficiency within academic environments.

Sibelan Forrester, Sarah W. Lippincott Professor of Modern and Classical Languages, brings a nuanced perspective, particularly regarding AI in translation and interpretation. While she acknowledges the utility of tools like Google Translate, she notes significant limitations. Forrester highlights the challenges of translating less common languages and the inadequacy of AI in grasping figurative language, which often leads to poor translations. “The precise meaning of an original is less important than its sound and rhythm,” she explains, emphasizing the cultural nuances that AI fails to capture. This raises broader questions about the reliability of AI in maintaining the richness of human communication.

Emily Gasser ’07, Associate Professor of Linguistics, is candid in her criticism, labeling generative AI as “synthetic text extruding machines.” She asserts that such systems lack the ability to think or analyze deeply, producing outputs that require rigorous verification. Gasser warns against the dangers of relying on AI for scholarly work, suggesting that its use undermines the fundamental purpose of education: to foster critical thinking and creativity. Furthermore, she cites real-world consequences stemming from AI misinformation, ranging from legal mishaps to health hazards.

Sam Handlin ’00, Associate Professor of Political Science, advocates for a middle-ground approach to AI usage in academia. He acknowledges the ubiquity of AI in modern tools but expresses concern about its cognitive impact on students. Recent studies indicate that reliance on AI for writing and reasoning could lead to diminished cognitive skills. He posits the need for a college-wide policy to manage AI use, particularly in tasks requiring complex cognitive effort.

Emad Masroor, Visiting Assistant Professor of Engineering, echoes concerns about the detrimental effects of relying on generative AI. He argues that these tools often provide an “illusion of knowledge,” shortcutting the intellectual processes that are essential to true learning. He warns that such tools may erode students’ abilities to write well, think critically, and engage deeply with content, compromising the very essence of education.

Warren Snead, Assistant Professor of Political Science, emphasizes the importance of the writing process in education. He argues that writing assignments promote critical thinking and articulate idea development, essential traits for a well-rounded education. The introduction of AI tools threatens to diminish this valuable process. “The value in writing assignments is that it pushes students to think,” he asserts, advocating for department-level autonomy in establishing AI policies.

Donna Jo Napoli, Professor of Linguistics and Social Justice, expresses a similar sentiment, indicating that while AI can be useful for mundane tasks, it should not replace the critical engagement that education fosters. She urges students to embrace the challenges of learning, as those struggles are integral to personal and intellectual growth.

Federica Zoe Ricci, Assistant Professor of Statistics, presents a balanced view, recognizing both the potential and pitfalls of AI in academia. While she sees value in using AI for repetitive tasks, she warns that over-reliance can hinder skill development and social interactions crucial for success. She believes that students must learn to engage with AI without compromising their educational experience.

The perspectives shared by these faculty members reflect a significant discourse on the implications of generative AI in higher education. As institutions navigate this complex landscape, the challenge remains in finding a balance between leveraging the benefits of AI and preserving the integrity of the educational process.

See also Innovartan Launches Brand Film Showcasing AI’s Impact on Classroom Learning

Innovartan Launches Brand Film Showcasing AI’s Impact on Classroom Learning Hikvision Launches WonderOS 4, Enhancing Smart Education with AI and Cloud Integration

Hikvision Launches WonderOS 4, Enhancing Smart Education with AI and Cloud Integration DP World and MIT Launch Global Summit to Equip 250,000 Teachers with AI Literacy

DP World and MIT Launch Global Summit to Equip 250,000 Teachers with AI Literacy TikTok Launches $2M AI Literacy Fund and New Control Features for AI Content Management

TikTok Launches $2M AI Literacy Fund and New Control Features for AI Content Management California State Board Approves HMH’s AI-Powered Math Program for K-8 Students

California State Board Approves HMH’s AI-Powered Math Program for K-8 Students