February 17, 2026

Blog

Edge computing devices are rapidly evolving into frontline AI systems, with applications in retail, manufacturing, and logistics. The integration of CPUs, GPUs, and NPUs (neural processing units) is crucial for delivering low-latency, on-device intelligence. This transformation presents unique considerations for Chief Information Officers (CIOs) as they navigate the diverse offerings from Intel, AMD, NVIDIA, and Arm in aligning silicon capabilities with practical operational needs.

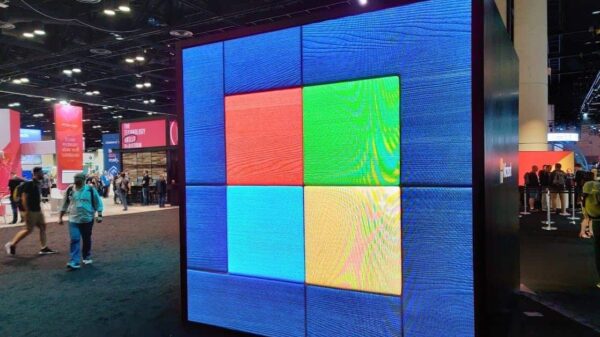

Enterprises are increasingly deploying AI closer to data sources—such as stores and factories—to reduce latency and enhance privacy, even in bandwidth-constrained environments. Modern NPUs are designed to offload inference tasks from CPUs and GPUs, which leads to improved battery efficiency and thermal performance. For instance, Microsoft’s AI Hub is curating applications that leverage NPUs, indicating a shift in the software landscape toward on-device acceleration across various platforms.

In terms of computing architecture at the edge, CPUs serve as system orchestrators, coordinating operations and data preparation. GPUs act as the parallel workhorses for high-throughput tasks, particularly in video and imaging applications. NPUs, on the other hand, improve performance-per-watt by managing inference tasks efficiently, making them invaluable for day-to-day operations, especially when bandwidth or energy is limited. For CIOs, understanding the interplay between these components is essential for optimizing performance in diverse environments.

Intel’s approach emphasizes a platform-first strategy focusing on fleet manageability and balanced AI workloads. The Core Ultra (Series 2) combines CPU cores with an integrated Arc GPU and an NPU to deliver up to 99 “total platform TOPS” for varied inference tasks. The vPro portfolio highlights Intel’s commitment to stability and long-term software support, catering especially to large enterprise fleets. This architecture allows sustained tasks such as noise reduction and object detection to be offloaded from CPUs and GPUs, enhancing battery life and thermal performance.

AMD, on the other hand, targets deterministic AI and functional safety for real-time systems with its Ryzen AI and Versal AI Edge Gen2 platforms. The Ryzen Embedded 8000 integrates CPU, RDNA 3 GPU, and XDNA NPU, delivering moderate AI performance suitable for robotics and automation, with documented functional safety features for regulated environments. Such capabilities make AMD’s offerings particularly appealing for safety-critical applications.

NVIDIA leads with a software-centric approach, leveraging a robust developer ecosystem that includes JetPack and specialized modules like Jetson AGX Orin Industrial. These platforms support demanding tasks such as multi-camera perception and sensor fusion, providing ready-to-deploy solutions that minimize integration time and risk. NVIDIA’s emphasis on software maturity also meets the growing demand for perception-heavy applications across various sectors.

Arm focuses on ultra-low-power edge devices, enabling partners to create integrated CPU-NPU systems that operate efficiently in milliwatt-level power envelopes. The Cortex-A320 and Ethos-U85 architecture supports sophisticated AI workloads in IoT devices, allowing for meaningful AI applications like vision and speech recognition without sacrificing battery life. This adaptability makes Arm’s solutions ideal for handhelds and distributed sensors where energy efficiency is paramount.

As CIOs evaluate their choices, several trends warrant attention. The shift to heterogeneous computing illustrates that while the fundamental technology may be similar, its application varies widely between vendors. Intel emphasizes centralized manageability, AMD focuses on safety, NVIDIA drives developer engagement, and Arm prioritizes power efficiency. This spectrum illustrates that workload and operational context should guide silicon selection rather than merely focusing on TOPS.

Furthermore, NPUs are evolving from a secondary role to a central player in enterprise AI initiatives, as evidenced by Microsoft’s AI Hub highlighting NPU-aware applications. The industrial-grade computing requirements are also becoming mainstream, with more vendors offering hardened modules to address the reliability demands of enterprise applications. As the industry moves forward, the convergence of local inference with cloud capabilities will define the architecture for future AI deployments.

CIOs are advised to prioritize the workload over the silicon itself when selecting edge AI solutions. Understanding thermal constraints, lifecycle considerations, and the importance of manageability can significantly influence total cost of ownership. As NPUs become central to everyday inference and operational ecosystems mature rapidly, organizations that plan for hybrid edge-cloud operations are poised to gain a competitive advantage in the evolving landscape of distributed computing.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech