Nvidia has long been synonymous with graphics processing units, or GPUs, a legacy reinforced by the recent surge in demand for these chips due to the rise of generative AI. However, the chipmaker has begun to pivot, signaling a strategic interest in expanding its customer base to those requiring less compute-intensive solutions. As part of this effort, Nvidia recently invested billions to acquire technology from a startup focused on low-latency AI computing, while also introducing standalone CPUs within its latest superchip system.

In a significant development, Meta announced yesterday that it will purchase billions of dollars’ worth of Nvidia chips to enhance its computing capabilities for large-scale infrastructure projects. This multiyear contract builds on an already collaborative relationship, with Meta previously estimating it would acquire 350,000 H100 chips from Nvidia by the end of 2024. By 2025, the company anticipates having access to approximately 1.3 million GPUs, although it remains unclear whether all of these will be sourced from Nvidia.

Nvidia confirmed that the expanded deal involves Meta constructing hyperscale data centers, optimized for both training and inference, in line with the company’s long-term AI roadmap. This initiative includes a “large-scale deployment” of Nvidia’s CPUs along with “millions” of Nvidia Blackwell and Rubin GPUs.

Notably, Meta stands out as the first major tech firm to announce a large-scale purchase of Nvidia’s Grace CPU as a standalone chip. This decision aligns with Nvidia’s earlier statements regarding the full specifications of its new Vera Rubin superchip, which emphasizes a comprehensive approach to compute power by integrating various chip technologies.

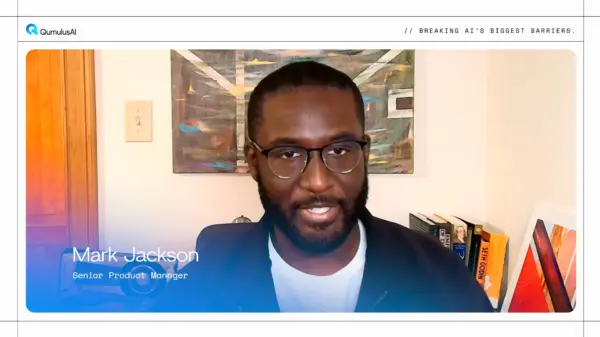

Ben Bajarin, CEO of tech market research firm Creative Strategies, comments on this shift, suggesting that Nvidia is recognizing the growing demand for AI applications that require CPU capabilities akin to conventional cloud services. He observes, “The reason why the industry is so bullish on CPUs within data centers right now is agentic AI, which puts new demands on general-purpose CPU architectures.”

A report from the chip newsletter Semianalysis reinforces this view, highlighting an uptick in CPU usage to support AI training and inference tasks. For instance, Microsoft’s data center for OpenAI reportedly now requires “tens of thousands of CPUs” to manage the vast amounts of data produced by GPUs, a necessity that has emerged as a direct consequence of AI advancements.

Despite this growing emphasis on CPUs, Bajarin notes that GPUs are still a critical component in advanced AI hardware systems, as evidenced by Meta’s continued procurement of Nvidia’s GPUs, which still outnumber its CPU purchases. “If you’re one of the hyperscalers, you’re not going to be running all of your inference computing on CPUs,” he states. “You just need whatever software you’re running to be fast enough on the CPU to interact with the GPU architecture that’s actually the driving force of that computing. Otherwise, the CPU becomes a bottleneck.”

Meta has yet to provide additional comment on its expanded agreement with Nvidia. However, during a recent earnings call, the social media giant projected a substantial increase in its AI infrastructure expenditures this year, estimating spending between $115 billion and $135 billion, a significant rise from $72.2 billion in the previous year.

As Nvidia broadens its product offerings and diversifies its customer base, the implications for the AI landscape could be substantial. The growing reliance on CPUs in conjunction with GPUs reflects a transformation in how companies view compute power, signaling a shift toward more integrated and efficient AI solutions.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech