Artificial intelligence (AI) is increasingly regarded as a fundamental operational risk in banking, according to recent remarks from the Bank for International Settlements (BIS). In a shift from viewing AI merely as a tool for innovation, regulators now underscore the necessity for robust governance and testing comparable to that required for credit and liquidity systems. This evolution signifies that AI’s implications for financial stability are becoming a priority for both regulators and the banking sector.

Tao Zhang, the BIS Chief Representative for Asia and the Pacific, remarked at a conference in Hong Kong that AI is rapidly integrating into the financial system, being utilized for tasks such as data processing, credit underwriting, fraud detection, and automating back-office functions. He noted the expanding role of AI, stating, “AI is being adopted across the financial sector … to process large volumes of data.” Such integration highlights AI’s trajectory from peripheral concept to core banking activity.

The implications for quality assurance (QA) and software testing teams are significant. With AI now considered an integral part of operational risk, it necessitates the same level of scrutiny as traditional financial models. As Zhang cautioned, advancements in large language models and generative AI extend AI’s capabilities into areas like customer interaction and supervisory processes. This raises concerns about its performance at scale, particularly under stress, and how interconnected systems may amplify financial shocks.

The BIS’s warnings align with European regulatory priorities, where AI governance and digital strategy have been elevated to core expectations for the 2026–2028 period. European banking supervisors are calling for banks to establish structured governance frameworks and risk controls around their AI applications, reflecting a shift from general monitoring to detailed assessments of specific AI use cases. Supervisors expect banks to have a clear understanding of where and how AI is deployed, along with its micro-prudential impact.

This regulatory evolution indicates that AI validation will increasingly intersect with supervisory expectations surrounding model governance and operational resilience. Banks must ensure that their AI deployments do not mask underlying weaknesses in controls or data quality, as these could threaten financial stability.

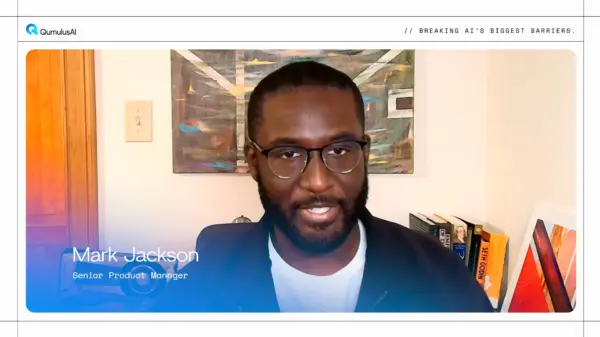

Industry experts interpret this regulatory shift as a transformative change in how AI risk is perceived. Amit Ranjan, Chief Manager at Punjab National Bank, emphasized that AI systems are now prevalent in various decision-making processes within banking, including credit decisioning and fraud monitoring. He noted that supervisors are integrating AI systems into the same governance perimeter as other critical financial models, positioning AI-driven decisions as “material models” subject to model risk governance frameworks.

This regulatory pivot suggests that failures in AI systems may no longer be viewed solely as technology issues but could be considered prudential risks affecting financial stability. Ranjan warned that boards should begin asking whether AI-driven decisions could create correlated risks across their portfolios and whether third-party dependencies are adequately captured within their risk frameworks.

The next phase of AI oversight will likely focus less on efficiency and more on the potential for systemic vulnerabilities. Ranjan’s observations underscore a crucial reality: “The next systemic risk may not originate from markets, but from synchronized algorithms making similar decisions at scale.” This perspective reinforces the need for comprehensive testing that encompasses degradation scenarios and third-party model dependencies.

As the regulatory framework around AI tightens amid economic pressures, analysts at JPMorgan Chase have suggested that the necessity for significant AI investment in financial services could compel smaller banks to merge, as larger institutions leverage AI to enhance compliance, treasury operations, and risk management. This competitive momentum is pushing banks to transition from pilot projects to full-scale production systems, making AI a baseline expectation rather than a differentiator.

For banking QA, software testing, and digital resilience leaders, the regulatory landscape is increasingly clear: AI must be treated as a critical component of banking operations, subject to rigorous governance and testing. The BIS’s warnings regarding systemic stability and European supervisors’ focus on AI governance are indicative of a broader shift towards embedding AI within operational risk frameworks. As industry leaders like Ranjan assert, validating AI systems will necessitate a comprehensive approach that goes beyond accuracy to include robustness, explainability, and stress behavior.

In this evolving environment, QA teams are transitioning to become frontline control functions, ensuring that AI technologies bolster rather than undermine the stability of the financial system.

See also Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032 Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs

Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs Wall Street Recovers from Early Loss as Nvidia Surges 1.8% Amid Market Volatility

Wall Street Recovers from Early Loss as Nvidia Surges 1.8% Amid Market Volatility