As AI chatbots become integral to daily life, their proliferation raises significant privacy concerns. With users increasingly sharing personal prompts, documents, and interactions, a pressing question emerges: which chatbot truly respects user data?

Recently, PCMag conducted a review comparing the privacy policies of some of the most widely used AI applications, including ChatGPT, Copilot, DeepSeek, Gemini, and Qwen. The results revealed substantial disparities in data collection practices among these platforms, highlighting a critical aspect that many users may overlook.

While AI chatbots streamline various tasks—from academic assignments to workplace duties—their privacy implications often remain obscure. PCMag’s analysis found that many AI platforms collect vast amounts of user information, frequently more than what users are aware of. Alarmingly, this data can be shared with advertisers, third-party partners, and even governments depending on legal frameworks.

Understanding Data Collection and Privacy Policies

Echoing its previous evaluations of other apps, PCMag noted a disturbing trend: seemingly benign applications often gather extensive data. This finding underscores the recommendation to favor browser-based interfaces over installed applications, as the latter tend to extract more user information.

Upon reviewing the App Store privacy labels for various chatbots, PCMag discovered notable differences in data collection practices. Some chatbots merely gather basic identifiers and app-usage data, while others, such as Google’s Gemini, collect an extensive array of user information, including browsing history, contact lists, emails, photos, precise location, and videos. PCMag aptly remarked, “It seems a bit much,” particularly when contrasted with Qwen, which claims to collect only device IDs and interactions. However, Qwen’s privacy report does not fully align with its written policy, raising further questions about transparency.

This lack of oversight is a recurring issue across all reviewed chatbots. Neither Apple nor Google actively audits the accuracy of these self-reported privacy disclosures, forcing users to trust the integrity of the companies involved.

Identifying the Most Privacy-Conscious AI Chatbots

To accurately assess what data is actually being collected, PCMag examined the published privacy policies of these companies. The findings were illuminating. Both DeepSeek and OpenAI provide concise and comprehensible policies. In contrast, Qwen’s short policy features several typographical errors, which are concerning when it comes to data protection.

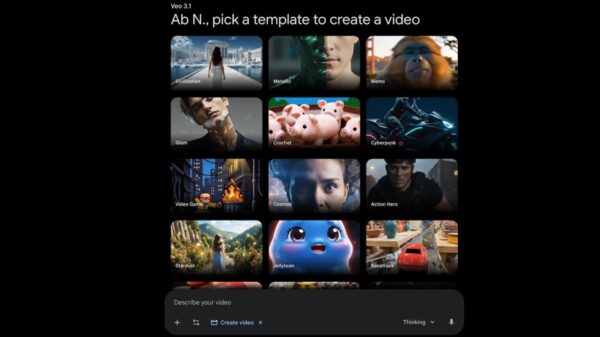

One standout policy belonged to Google’s Gemini. Its documentation is comprehensive and candid regarding the extent of data collection. The company openly states that it processes user audio and allows users to delete or disable their Gemini activity history. Notably, it acknowledges that human reviewers may scrutinize Gemini chats to enhance quality and safety, a point emphasized in PCMag’s analysis. Users must opt-out completely if they wish to prevent their data from being assessed.

Despite these measures, PCMag reiterated the importance of caution: “Given that all the apps above record and store your prompts, it’s best to refrain from sharing secrets with any of these AI chatbots.”

Among the evaluated platforms, Microsoft’s Copilot emerged as the leader in privacy protection. Although its policy requires navigating various linked documents due to the distinct functions of Microsoft 365 Copilot and Bing, the overarching conclusion is that Copilot collects minimal user data and does not share this information with advertisers. Unlike ChatGPT, which uses a broader public dataset, Copilot’s architecture leverages existing Microsoft 365 data for contextual responses, placing it ahead of its competitors regarding data privacy.

Broader Implications for Data Privacy in AI

Concerns about data access extend beyond U.S. companies. Chinese AI firms, such as DeepSeek, operate under regulations that provide the government with extensive rights over user data. This issue has gained traction, particularly following discussions around app data practices, like those involving TikTok earlier this year.

As Cliff Steinhauer of the National Cybersecurity Alliance pointed out, the rise of companies like DeepSeek necessitates a broader global dialogue concerning AI privacy. He stated, “Chinese AI companies operate under distinct requirements that give their government broad access to user data and intellectual property.”

However, this concern is not exclusive to China; many U.S.-based AI applications also gather large amounts of user data, emphasizing a pervasive industry challenge.

For users seeking to minimize their data exposure, PCMag offers practical advice: avoid mobile apps when possible. Alternatively, running AI models locally on personal computers can bolster privacy. Tools such as Ollama enable users to operate language models offline, and other technologies like DeepSeek R1 provide enhanced security by ensuring no personal information leaves the device.

In a world where the integration of AI chatbots into everyday life is inevitable, understanding the implications of data sharing and privacy is essential for users navigating these technologies.

Grok AI’s Unusual Musk Worship Highlights Deep Connection to X Platform

Grok AI’s Unusual Musk Worship Highlights Deep Connection to X Platform Google’s Gemini 3 AI Chatbot Challenges User on 2025 Claim Due to Settings Error

Google’s Gemini 3 AI Chatbot Challenges User on 2025 Claim Due to Settings Error Morpheus Launches AI SOC Platform for MSSPs, Automating Microsoft Security Management

Morpheus Launches AI SOC Platform for MSSPs, Automating Microsoft Security Management FTC Cracks Down on AI Washing: Key Guidelines for Legal Marketing Compliance

FTC Cracks Down on AI Washing: Key Guidelines for Legal Marketing Compliance