In the evolving landscape of artificial intelligence, the integration of generative AI with traditional machine learning systems is becoming increasingly critical. Many organizations are attempting to enhance user experience by simply adding chatbots on top of existing data structures, often leading to disappointing outcomes. This raises essential questions regarding return on investment (ROI), risk management, and boardroom confidence in AI initiatives. The need for a well-structured approach has never been more apparent.

Understanding Generative AI’s Role

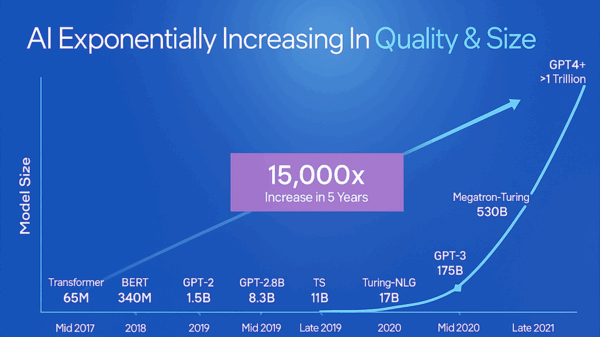

Generative AI, particularly large language models (LLMs), excels in language processing—summarizing, rewriting, translating, and engaging in conversational roles. However, these models serve as probabilistic text generators rather than definitive systems of record. In high-stakes environments, such as finance or healthcare, relying solely on LLMs can result in failures if they are not built around clearly defined processes and metrics. As IT leaders have consistently noted, simply adding AI to existing systems without proper integration does not guarantee success; it can elevate risks instead.

The Value of Traditional AI

By “traditional AI,” we refer to established methodologies such as gradient boosting, logistic regression, and various predictive models tailored for tasks like churn prediction or resource allocation. These models provide explicit features, coefficients, and consistent behavior. In some instances, integrating predictive AI has boosted operational accuracy from approximately 70% to between 97% and 98%. Unlike LLMs, traditional models can be trained and audited on specific datasets, allowing for better governance and monitoring.

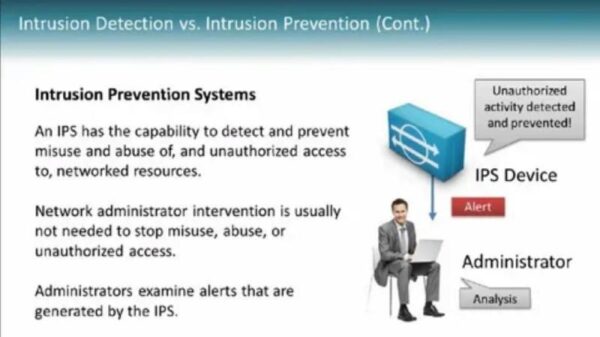

To achieve a robust AI architecture, a model layering strategy is suggested: traditional predictive models should operate beneath, while generative AI handles unstructured interactions on top. This method allows for seamless orchestration in complex environments, where chatbots refined through expert insights yield effective results only when embedded within safety systems and human oversight. The LLMs can drive conversations but should not make critical decisions—such as loan approvals or medical prescriptions.

Practical Applications in FinTech and MarTech

In the financial sector, for instance, CFOs and FinTech teams can optimize their operations by utilizing risk models that predict various factors like loan defaults and loss assessments. On the generative AI side, an AI copilot can explain decision outcomes, gather necessary data, and simulate alternative scenarios based on their risk engines. This creates transparency for regulators and fosters a user-friendly experience for customers.

Similarly, in marketing technology, traditional AI can handle segmentation and experimental designs, while generative AI personalizes content within the constraints of brand guidelines and regulatory requirements. Allowing generative AI to create content for sensitive sectors, such as health or finance, without oversight can lead to severe regulatory repercussions.

Infrastructure Challenges and Solutions

For organizations interested in this integrated approach, infrastructure priorities must shift significantly. Reliable data plumbing, multi-model routing, and evaluation mechanisms must take precedence over merely deploying LLM endpoints. Feature stores, labeling systems, and governance for predictive models are essential; without them, AI implementations risk becoming ineffective.

If you’re a CIO, CFO, or CMO, a first step is to map out critical decisions—like credit assessments and pricing strategies—and identify which models should own these decisions. The conversation layer should be treated separately, with LLMs acting as orchestration and user experience facilitators rather than core decision-making units. Investments should focus on the foundational elements, including data quality and monitoring systems, that support both generative and predictive AI.

In summary, while generative AI offers remarkable conversational capabilities, traditional AI provides the necessary decision-making framework. For systems that involve real financial, customer, or clinical risks, a harmonious integration of these technologies is imperative for building systems you can trust.

See also BNX Payments Launches BNX AI: Free, Registration-Free Image Generation for All Users

BNX Payments Launches BNX AI: Free, Registration-Free Image Generation for All Users Erotic AI Platform Secret Desires Exposes 2 Million Images, Including Private Citizens

Erotic AI Platform Secret Desires Exposes 2 Million Images, Including Private Citizens IIT Patna and Adobe Research Launch TAI Framework for Translating 1,570 Indian Poems

IIT Patna and Adobe Research Launch TAI Framework for Translating 1,570 Indian Poems Google CEO Urges Caution on AI Trustworthiness as Gemini 3.0 Launches

Google CEO Urges Caution on AI Trustworthiness as Gemini 3.0 Launches Generative AI Transforms QA with Human Oversight to Prevent Technical Debt

Generative AI Transforms QA with Human Oversight to Prevent Technical Debt