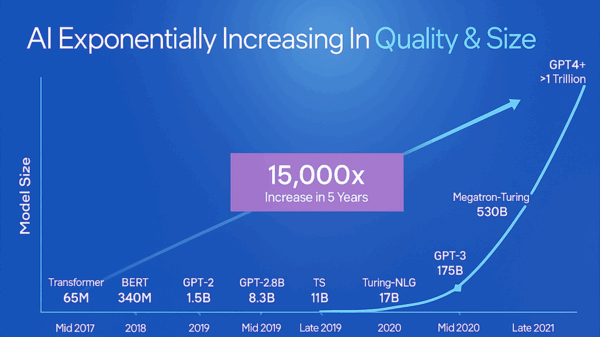

The recent hearing held by the Energy & Commerce Oversight and Investigations Subcommittee on November 19 served as a stark reminder of the significant human toll stemming from regulatory inaction regarding artificial intelligence (AI) technologies, particularly chatbots. Despite a series of alarming incidents involving AI, companies continue to operate with minimal safety standards and oversight. This lack of regulation is unprecedented—no other industry enjoys such unrestrained freedom to potentially jeopardize public safety.

On the same day as the hearing, House Republican leadership proposed a controversial plan to incorporate federal preemption into the National Defense Authorization Act (NDAA). This move would effectively eliminate nearly all state-level regulations on AI. Additionally, former President Donald Trump has been reported to be deliberating an executive order that would empower the federal government to regulate AI unilaterally.

States including Utah, Texas, and California have already initiated critical AI regulations that would be significantly undermined by federal preemption. Citizens in these states deserve better governance. If Congress continues to hesitate in establishing meaningful protections for families, it is both undemocratic and perilous to prevent states from taking initiative in this crucial area.

The Risks of Regulatory Inaction

This regulatory approach is troubling for three main reasons. First, it severely restricts states’ abilities to implement sensible regulations that safeguard our children, communities, and jobs from the excesses of large tech companies. Trusting Big Tech to address the concerns of parents and workers is misguided, especially considering their historical tendency to prioritize quick profits—often at the expense of safety. The prevailing business model of “move fast and break things” underscores this risk.

Second, blocking AI regulation contradicts public sentiment. Numerous polls indicate widespread support for reasonable AI safeguards across the political spectrum, with more than 70% of both Republicans and Democrats advocating for robust regulations. This was evident in July when the Senate overwhelmingly rejected preemption by a vote of 99 to 1, including a reversal from the proposing senator himself. Given this strong public backing, why persist with such an unpopular policy?

Third, the last-minute inclusion of a preemption clause in the NDAA undermines the democratic process, as the provision has not undergone proper debate within Congress. The American populace deserves strong, reasonable AI safeguards. Trump’s aim to establish a unified federal standard that ensures public safety while promoting innovation is commendable, yet it should be pursued through established legislative channels rather than through rushed, backdoor methods. Such maneuvers risk putting our troops in jeopardy by linking military funding to Big Tech’s desire to evade regulation.

The Case Against Preemption

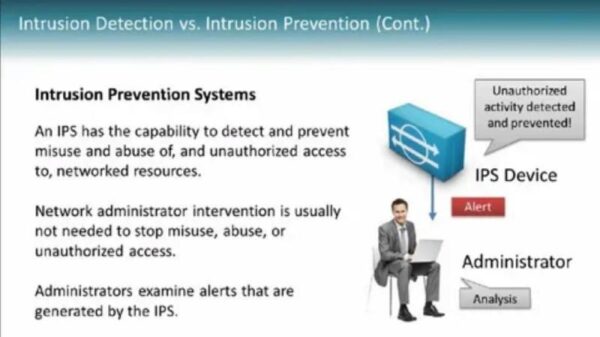

Proponents of federal preemption argue that a singular federal law would prevent a “patchwork” of regulations across states. However, this argument loses credibility when one considers that there is currently no comprehensive federal law governing AI. Instead, preemption would simply grant Big Tech the freedom to deploy potentially harmful AI systems rapidly, prioritizing profit over public safety.

Advocates also claim that regulation could stifle innovation, but this stance is unfounded. Industries such as aviation and pharmaceuticals have thrived while adhering to stringent safety standards. The U.S. remains a global leader in innovation, as competition drives progress while regulatory frameworks ensure public safety. To exempt the AI sector from these norms equates to corporate welfare and should be treated as such.

Recent incidents underscore the urgent need for regulation. Internal policy documents from Meta revealed a shocking acceptance of chatbots engaging children in inappropriate conversations. Families are currently suing ChatGPT, alleging that the chatbot’s interactions were linked to tragic outcomes for their children. Moreover, the Kumma the Bear, an AI-powered toy from OpenAI, was recalled after it provided alarming suggestions on sensitive topics. It is clear that the demand for AI regulation is not just popular but essential for public safety.

The pressing question remains: why do some lawmakers continue to resist these necessary regulatory measures?

See also AI Model Risk Management Evolves: 70% of Financial Firms Integrate Advanced Models by 2026

AI Model Risk Management Evolves: 70% of Financial Firms Integrate Advanced Models by 2026 White House Push for AI Regulation Ban Faces Opposition Ahead of NDAA Vote

White House Push for AI Regulation Ban Faces Opposition Ahead of NDAA Vote Trump Advocates for Federal AI Regulation to Prevent State-Level Overreach

Trump Advocates for Federal AI Regulation to Prevent State-Level Overreach White House Executive Order Aims to Block Florida’s AI Regulation Efforts

White House Executive Order Aims to Block Florida’s AI Regulation Efforts Samsara Reveals New AI Fleet Solutions, Sees 32% ARR Growth Amid Valuation Questions

Samsara Reveals New AI Fleet Solutions, Sees 32% ARR Growth Amid Valuation Questions