In a strategic move to enhance AI chip efficiency, Huawei has unveiled Flex:ai, an open-source software platform aimed at improving processing utilization by approximately 30%. This initiative comes amidst ongoing challenges faced by Chinese companies in accessing advanced semiconductor technology due to U.S. trade restrictions.

Revolutionizing AI Chip Management

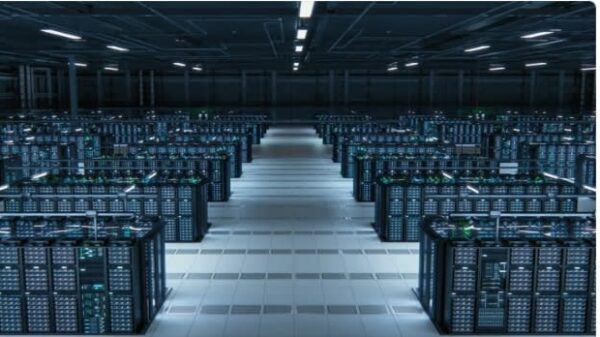

Flex:ai leverages Kubernetes orchestration to manage workloads across GPUs, NPUs, and other accelerators effectively. This innovative tool allows a single physical card to be divided into multiple virtual computing units, promoting more flexible and efficient usage of hardware resources.

Huawei’s assertion of a 30% performance improvement has yet to be independently validated. The platform will be made available through Huawei’s ModelEngine developer community, encouraging developers and researchers to contribute to and expand on this technology.

This development aligns with a broader trend among Chinese tech firms striving to create software-driven solutions that maximize performance, especially given current restrictions on the procurement of cutting-edge chips.

Collaboration with Leading Universities

Huawei’s collaboration with researchers from Shanghai Jiao Tong University, Xian Jiaotong University, and Xiamen University has been pivotal in crafting the core framework of Flex:ai. This partnership signifies a growing trend towards integrating corporate and academic expertise in the realm of AI.

As global competitors like Nvidia, which acquired Run:ai in 2024 for similar AI workload management solutions, Huawei’s Flex:ai aims to offer a domestic alternative that ensures Chinese developers have access to advanced AI chip orchestration without reliance on foreign technologies.

Optimizing Hardware Utilization

One of the standout features of Flex:ai is its capability to partition a single processing card into multiple virtual units, effectively increasing the computational capacity for AI tasks. This allows researchers and companies to run several experiments concurrently on a single GPU or NPU, significantly reducing hardware waste and enhancing throughput.

Using Kubernetes for dynamic resource management, Flex:ai distributes workloads efficiently across available hardware, aligning with global trends in cloud and AI infrastructure where software orchestration is critical for maximizing chip utilization.

Strategic Response to Supply Chain Challenges

The launch of Flex:ai comes in response to the ongoing difficulties Chinese companies face in acquiring advanced chips. Reports indicate that Huawei’s Ascend AI chips still rely on components from overseas suppliers, including TSMC, Samsung, and SK Hynix, despite U.S. export restrictions.

By prioritizing software optimization, Huawei aims to extract maximum performance from existing hardware, thereby navigating supply chain limitations more effectively. The company is also scaling up its AI chip production, with plans to double the output of its flagship 910C Ascend chips by 2026, targeting up to 1.6 million dies. Flex:ai’s capabilities will ensure the efficient use of these chips across various applications within Chinese tech firms, including major players like Alibaba and DeepSeek.

As Huawei positions itself within a constrained market, the introduction of Flex:ai might not only signify a technological leap for Chinese AI capabilities but also reflect a strategic pivot towards self-reliance in the face of global trade challenges.

AI-First Engineering Doubles Productivity with Spec-Driven Development and Self-Verification

AI-First Engineering Doubles Productivity with Spec-Driven Development and Self-Verification Micron Technology Sees 188% Stock Surge, Positioning as Key AI Infrastructure Player

Micron Technology Sees 188% Stock Surge, Positioning as Key AI Infrastructure Player Multi-Model MLOps Infrastructure Enhances AI Deployment Efficiency and Cost Savings

Multi-Model MLOps Infrastructure Enhances AI Deployment Efficiency and Cost Savings Zebra Technologies Introduces AI-Driven RFID Solutions Amidst Declining Retail Satisfaction

Zebra Technologies Introduces AI-Driven RFID Solutions Amidst Declining Retail Satisfaction OpenAI and Foxconn Collaborate to Build Next-Gen AI Infrastructure in U.S.

OpenAI and Foxconn Collaborate to Build Next-Gen AI Infrastructure in U.S.