Since the launch of OpenAI’s ChatGPT in late 2022, the chatbot has undeniably set the standard in the realm of generative AI, capturing a significant share of the market. However, a recent study by British firm Prolific has revealed that ChatGPT ranks eighth among leading AI models, trailing behind competitors such as Gemini, Grok, Claude, DeepSeek, and Mistral.

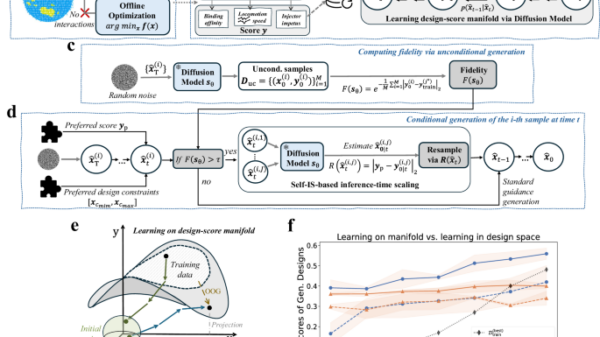

Prolific developed a new benchmark known as “Humaine” to evaluate AI performance based on human interaction standards rather than purely technical metrics. The company criticizes current evaluation methods, asserting that they often focus on data that is more relevant for researchers than for everyday users. “Current evaluation is heavily skewed towards metrics that are meaningful to researchers but opaque to everyday users,” the blog post emphasized, highlighting a disconnection between optimization and user experience. This sentiment is echoed in concerns about human-preference leaderboards, which can suffer from sample bias, often favoring tech-savvy audiences.

ChatGPT’s Ranking and Market Context

According to the Humaine study, the top ten AI models are as follows:

- Gemini 2.5 Pro (Google)

- DeepSeek v3 (DeepSeek)

- Magistral Medium (Mistral)

- Gemini 2.5 Flash (Google)

- DeepSeek R1 (DeepSeek)

- Gemini 2.0 Flash (Google)

- ChatGPT

This ranking is particularly surprising for OpenAI, given that its model is now positioned behind not only Google’s Gemini models but also offerings from DeepSeek and Mistral. The study was published in September, prior to the release of Google’s Gemini 3 Pro model and xAI’s Grok 4.1 models, which may affect future rankings.

Despite the ongoing advancements from competitors, the Gemini 2.5 Pro maintaining its place at the top comes as little surprise, having consistently led various performance benchmarks since its introduction. However, OpenAI’s omission from the top five raises questions about its current standing in an increasingly competitive landscape.

Addressing Evaluation Gaps

The Prolific study highlights the need for more rigorous and relevant methods of evaluating AI models. By implementing automated quality monitoring in their Humaine benchmark, Prolific aims to ensure that the feedback and interactions are genuinely reflective of user preferences rather than skewed by sample bias.

As AI technology continues to evolve, understanding how models perform under conditions that mimic real human interaction becomes essential. The insights gained from this study could potentially guide developers in refining their models to better meet user expectations, thereby bridging the gap between technical performance and user satisfaction.

In summary, while OpenAI’s ChatGPT remains a significant player in the generative AI space, its recent ranking as eighth among AI models indicates a shift in the competitive landscape. As companies like Google and DeepSeek continue to innovate, the importance of evaluating AI through the lens of user interaction will likely become increasingly vital for maintaining relevance in the market.

See also UAE Launches $1 Billion AI Initiative to Transform Infrastructure and Services in Africa

UAE Launches $1 Billion AI Initiative to Transform Infrastructure and Services in Africa OpenAI’s Engagement Metrics Linked to 50 Mental Health Crises During ChatGPT Use

OpenAI’s Engagement Metrics Linked to 50 Mental Health Crises During ChatGPT Use Microsoft Stock Undervalued by 22.4% Amid AI Push, DCF Analysis Shows

Microsoft Stock Undervalued by 22.4% Amid AI Push, DCF Analysis Shows NVIDIA Surges 25% in Q4 Earnings, Catalyzing Major Market Rally Amid AI Boom

NVIDIA Surges 25% in Q4 Earnings, Catalyzing Major Market Rally Amid AI Boom Meta Slashes Ray-Ban Smart Glasses Price to $238, Half of Gen 2 Cost

Meta Slashes Ray-Ban Smart Glasses Price to $238, Half of Gen 2 Cost