Mark Zuckerberg has recently suggested that the development of superintelligent artificial intelligence (AI) is approaching reality, positing that this evolution will lead to innovations currently unimaginable. Meanwhile, Dario Amodei anticipates that powerful AI could emerge as early as 2026, potentially smarter than a Nobel Prize winner across various fields, claiming advancements might include the doubling of human lifespans or even achieving “escape velocity” from death itself. Sam Altman, another prominent figure in the industry, echoed this sentiment, asserting that the capability to build artificial general intelligence (AGI) is now within reach.

However, skepticism arises when evaluating the actual performance of current AI systems, such as OpenAI’s ChatGPT, Anthropic’s Claude, and Google’s Gemini. These technologies predominantly function as large language models (LLMs), which rely on vast linguistic datasets to identify correlations between words and produce responses to prompts. Despite the complexity of generative AI, they fundamentally mimic language rather than embody intelligence.

Current neuroscience suggests that human cognition largely operates independently of language, leading to doubts about whether the advancement of LLMs will yield an intelligence that matches or surpasses human capability. Humans utilize language to express their reasoning and make abstractions; however, this does not equate to language being the essence of thought. Recognizing this distinction is crucial to discern scientific facts from the exaggerated claims made by enthusiastic tech leaders.

The prevalent narrative posits that by amassing extensive data and leveraging increasing computational power—primarily reliant on Nvidia chips—the creation of AGI is merely a scaling challenge. Yet, this perspective is scientifically flawed. LLMs are mere tools for emulating language functions without the cognitive processes of reasoning and thought inherent to human intelligence.

A commentary published in the journal Nature last year by scientists including Evelina Fedorenko of MIT challenges the notion that language dictates our ability to think. The authors argue that language is a cultural tool designed for communication, not a foundation for cognitive ability. They highlight two primary assertions: that language serves primarily as a means for sharing thoughts, and that it has evolved to facilitate effective communication.

Empirical evidence supports the idea that cognitive functions can persist even without language. For instance, functional magnetic resonance imaging (fMRI) has shown distinct brain networks activated during various mental tasks, illustrating that reasoning and problem-solving engage neural pathways separate from language processing. Additionally, studies of individuals who have suffered language impairments reveal that they can still engage in reasoning and problem-solving, further reinforcing the distinction between language and thought.

Cognitive scientist Alison Gopnik notes that infants learn about the world through exploration and experimentation, suggesting that thought processes exist prior to linguistic capabilities. This leads to a broader understanding that language enhances cognition but does not define it.

The Nature article also emphasizes language’s role as an efficient communication tool. It posits that the evolution of human languages reflects a design for ease of learning and robustness, reinforcing our capability to share knowledge across generations. As such, language acts as a “cognitive gadget,” improving our capacity to learn collectively, rather than being the source of intelligence itself.

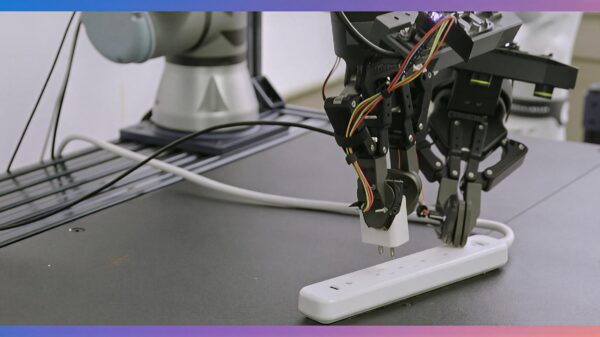

Critics within the AI community, including Yann LeCun, a Turing Award recipient, are increasingly wary of LLMs. LeCun recently transitioned from Meta to establish a startup focusing on “world models”—AI systems capable of understanding physical realities, engaging in reasoning, and planning complex actions. This shift underscores a growing consensus that LLMs alone may not suffice to achieve AGI.

Leading AI researchers, including Yoshua Bengio and former Google CEO Eric Schmidt, advocate for a redefined understanding of AGI, suggesting it should encompass the cognitive versatility of a well-educated adult, rather than a one-dimensional intelligence model. They propose that intelligence should be viewed as a complex amalgam of various capacities, such as speed, knowledge, and reasoning.

As discussions progress, there remains significant uncertainty about whether an AI system can genuinely replicate the cognitive leaps of humanity. Even with the potential to develop a system that excels in a range of cognitive tasks, it does not guarantee that AI will achieve transformative discoveries akin to those made by humans.

The philosophical underpinnings associated with scientific innovation, such as those articulated by Thomas Kuhn, suggest that significant paradigm shifts arise not solely from empirical advancements, but from conceptual breakthroughs that redefine our understanding of the world. AI models, while potentially capable of sophisticated data analysis, lack the impetus to question or innovate beyond their training data.

Consequently, AI may remain confined to a repository of existing knowledge, recycling and remixing human-generated concepts without the ability to forge new paradigms. As a result, the potential for AI to lead transformative discoveries appears limited, with human thought and reasoning continuing to occupy the forefront of scientific and creative advancement.

As the dialogue surrounding AI progresses, the distinction between human cognition and machine learning remains pivotal. While advancements in AI present intriguing possibilities, the nuances of thought and creativity—characteristic of human intelligence—underscore the complexities that remain unreplicated in artificial systems.

See also Trump Unveils Genesis Mission: New Executive Order Sets Ambitious AI Innovation Agenda

Trump Unveils Genesis Mission: New Executive Order Sets Ambitious AI Innovation Agenda Nvidia Shares Drop as Meta Considers Google’s AI Chips for 2026 Transition

Nvidia Shares Drop as Meta Considers Google’s AI Chips for 2026 Transition Amazon Unveils $50B AI Infrastructure Push for US Government, Boosting National Tech Edge

Amazon Unveils $50B AI Infrastructure Push for US Government, Boosting National Tech Edge Michigan Launches Massive AI Data Center Projects Amid Environmental and Economic Concerns

Michigan Launches Massive AI Data Center Projects Amid Environmental and Economic Concerns CrowdStrike Discovers Killswitch in DeepSeek-R1, Reveals 50% Code Vulnerability Increase

CrowdStrike Discovers Killswitch in DeepSeek-R1, Reveals 50% Code Vulnerability Increase