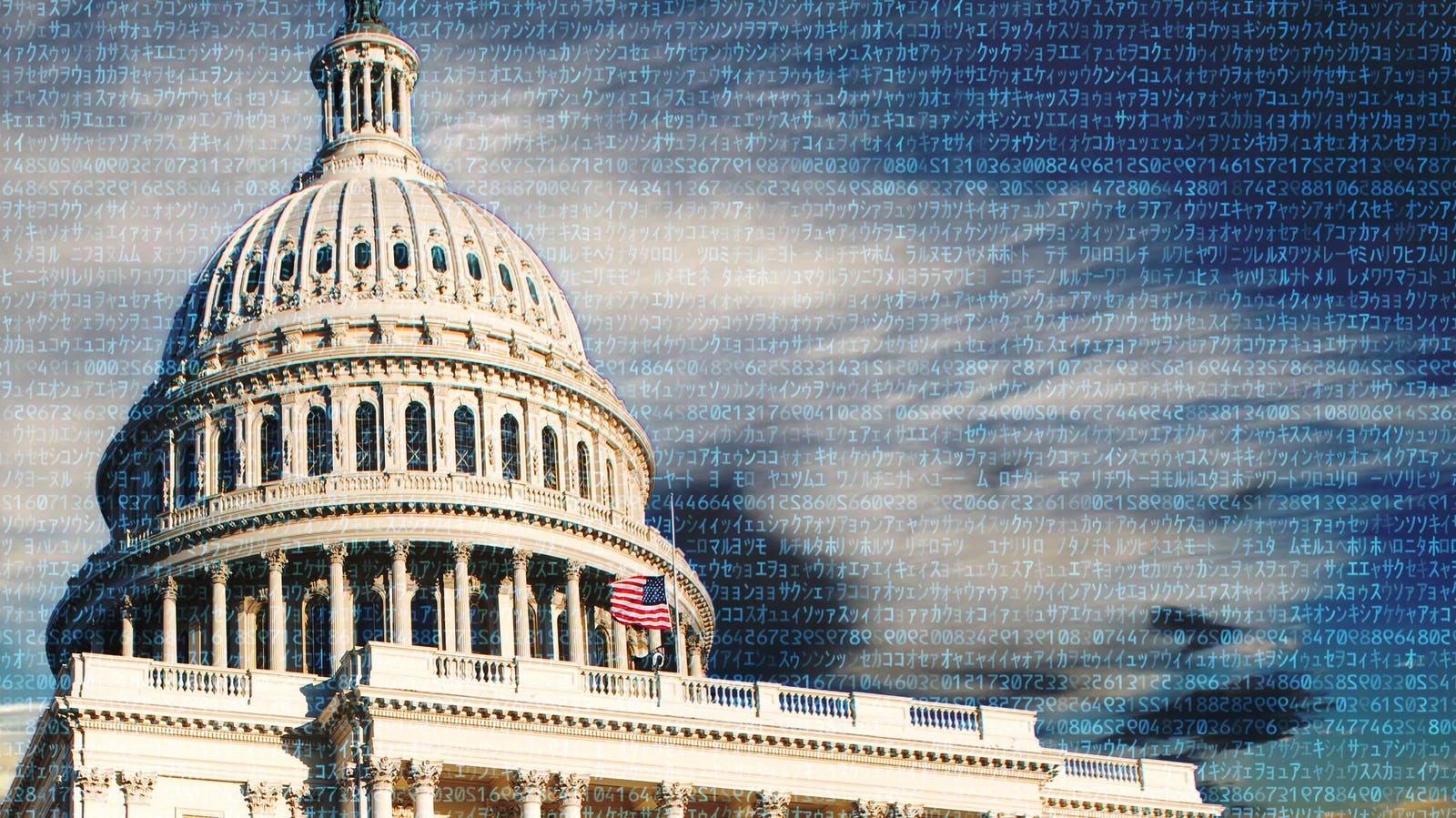

Artificial intelligence is at the center of a fierce political struggle, with a $150 million battle heating up over federal preemption regarding AI regulations. As Congress prepares to deliberate on potential preemption language within the National Defense Authorization Act, the White House is considering an executive order that could override state-level regulations. Two main coalitions are vying for influence: one backed by significant Silicon Valley investors pushing for a unified federal framework, and another funded by safety-conscious donor networks advocating for the preservation of state authority in the absence of comprehensive national standards. This clash fundamentally revolves around who shapes the rules, enforces them, and the extent of state action.

The initiative advocating against federal preemption, known as Public First, is led by former Representatives Chris Stewart (R-Utah) and Brad Carson (D-Okla.). The group has established two affiliated Super PACs aimed at supporting candidates who endorse stronger AI oversight. Stewart emphasized the initiative’s objective to guarantee “meaningful oversight of the most powerful technology ever created.” Public First plans to raise at least $50 million for the 2026 election cycle.

Beyond funding candidates, Public First’s nonprofit arm promotes stronger export controls on advanced chips and transparency requirements for AI labs. The organization opposes federal preemption that could stifle state-level progress unless accompanied by meaningful national safeguards. Public First cites a survey indicating that 97% of Americans favor AI safety regulations.

Parallel to this effort, Carlson co-founded the think tank Americans for Responsible Innovation (ARI), which has emerged as a leading public-interest organization in the AI governance arena. ARI’s leadership includes prominent figures such as computer scientist Stuart Russell from the University of California, Berkeley, and economist Erik Brynjolfsson of the Stanford Institute for Human-Centered AI. The organization focuses on protecting against AI-enabled scams, threats to minors, and national security risks, while advocating for increased funding for the National Institute of Standards and Technology (NIST).

ARI positions itself as independent from industry influence, relying on its founders and donors committed to addressing long-term AI risks. Critics argue that this coalition’s substantial funding may promote overly restrictive regulations. Unlike the pro-industry coalition, ARI’s donor base includes investors wary of long-term risks and employees from safety-oriented labs like Anthropic. Reports have indicated that Anthropic’s executives are also exploring political engagement to counter the opposition.

This coalition’s strategy entails pursuing state-level measures while Congress remains gridlocked. The RAISE Act, introduced by New York Assemblymember Alex Bores (D), exemplifies this approach; it mandates safety disclosures and risk assessments, with fines up to $30 million for noncompliance. Bores’s campaign has already become a focal point for those opposed to AI regulation, marking him as the first target of one of the pro-industry Super PACs.

In contrast, the coalition defending federal preemption, leading under the banner of Leading the Future (LTF), launched in August with a strategy that includes both federal and state Super PACs supporting candidates who favor innovation over regulation. Led by GOP strategist Zac Moffatt and Democratic operative Josh Vlasto, LTF argues that a fragmented state regulatory landscape could jeopardize American job growth and AI leadership. They assert that a unified federal law is critical for maintaining competitiveness.

The LTF initiative began with $100 million in funding from Silicon Valley investors, including Marc Andreessen and OpenAI co-founder Greg Brockman. Their objective is to elect candidates aligned with their vision of a national framework that preempts state regulations, beginning with targeting Bores, whose campaign represents a new electoral strategy shaped by AI regulation discussions.

With the backing of major tech firms like Meta, which has initiated its state-focused Super PAC aimed at supporting candidates who align with innovation, the LTF coalition is amplifying its efforts. The America First Policy Institute (AFPI), associated with former President Trump’s political movement, has also introduced an AI agenda that focuses on economic growth through widespread AI adoption and minimizing regulatory overhead.

As these two coalitions sharpen their positions, the stakes are escalating. The urgency for federal action is palpable, with Congress approaching a critical decision on preemption language within the National Defense Authorization Act and potential executive orders looming. With voter concerns about AI’s economic impact and job displacement rising, the outcome of this political battle could have lasting implications for the future of AI governance in the United States.

See also PwC Survey Reveals 58% of Executives Link Responsible AI to Higher ROI and Customer Trust

PwC Survey Reveals 58% of Executives Link Responsible AI to Higher ROI and Customer Trust Cohere’s Martin Kon Warns: Consumer AI Tools Fail to Transform Enterprise Needs

Cohere’s Martin Kon Warns: Consumer AI Tools Fail to Transform Enterprise Needs Micron Invests $9.6B in Cutting-Edge AI Memory Chip Plant in Japan

Micron Invests $9.6B in Cutting-Edge AI Memory Chip Plant in Japan SAP Launches EU AI Cloud to Enhance Data Sovereignty for European Enterprises

SAP Launches EU AI Cloud to Enhance Data Sovereignty for European Enterprises