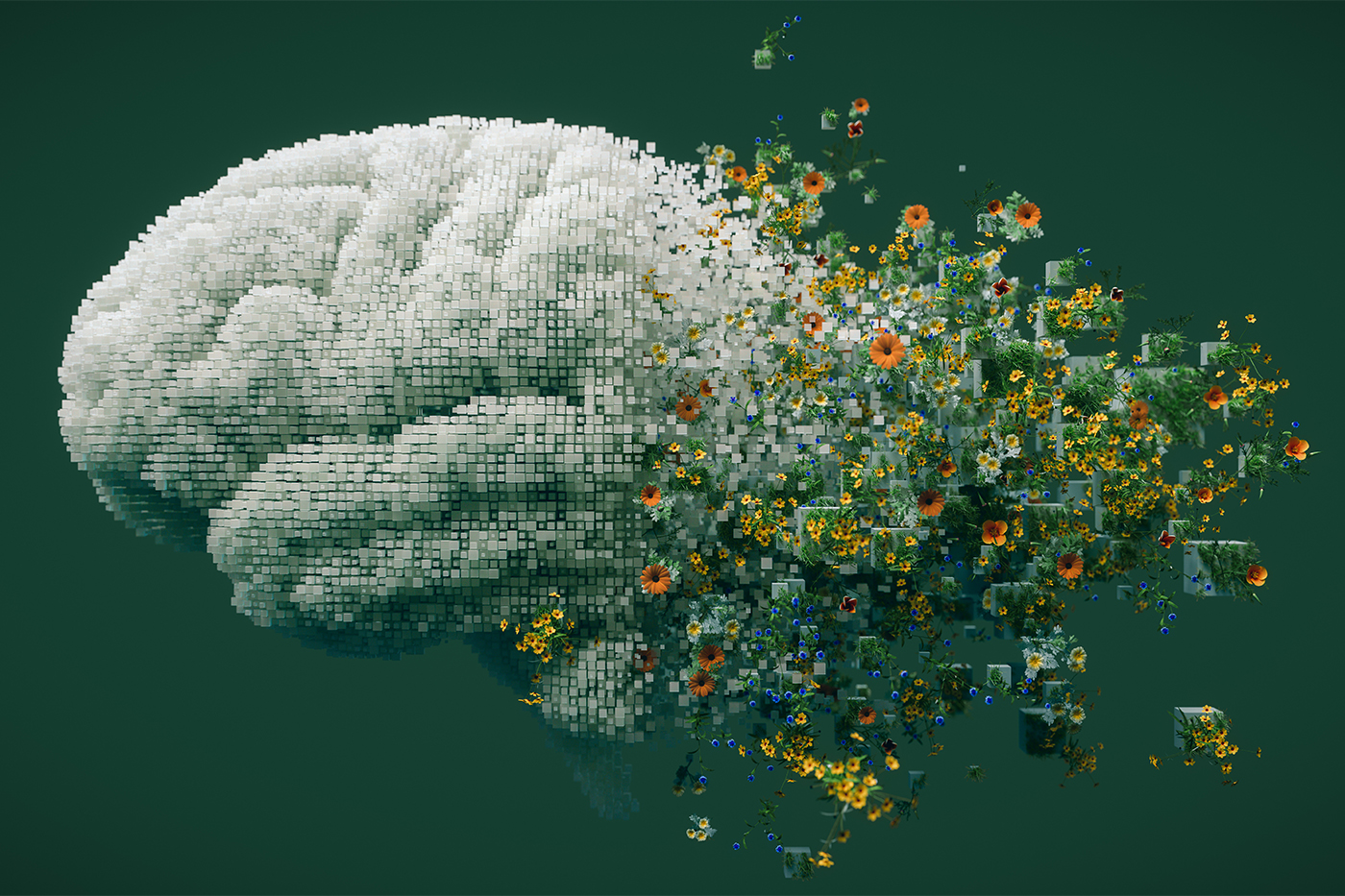

The newly established Center for Responsible Artificial Intelligence and Governance (CRAIG) aims to enhance the ethical deployment of artificial intelligence (AI) by merging academic research with industry expertise. Funded by the National Science Foundation and led by a team of experts from Northeastern University and three partner universities, CRAIG is positioned to address significant challenges in the AI landscape, from privacy concerns to regulatory issues.

John Basl, an associate professor of philosophy at Northeastern and a member of CRAIG, emphasized that companies often lack the necessary infrastructure to implement responsible AI. Instead, they typically focus on compliance with existing regulations. The initiative seeks to tackle this gap by allowing industry partners to identify specific challenges, with researchers developing projects tailored to these needs. “This will be a call to arms to get that done,” Basl said.

In addition to Northeastern, the collaborative effort involves faculty from Ohio State University, Baylor University, and Rutgers University. Industry participants include major players like Meta, Nationwide, Honda Research, CISCO, Worthington Steel, and Bread Financial, with additional partnerships expected as CRAIG develops.

Cansu Canca, director of Northeastern’s Responsible AI Practice, noted that responsible AI practices are often the first element to be sacrificed in organizational projects. CRAIG seeks to balance this tension by encouraging private industry partners to pinpoint their specific challenges. “With this setup, you can claim with confidence that the research is really done with the same academic rigor that we hold ourselves subject to in academia,” Canca stated, underscoring the integrity of the research process.

Homogenization in AI decision-making is among the key issues CRAIG researchers aim to address. Basl illustrated this point by highlighting how a single AI model could inadvertently bias hiring practices. “Hiring managers might not like people with purple shoes, but some other hiring managers might,” he explained, cautioning against a uniform decision-making framework that overlooks diverse perspectives.

The unique combination of academic expertise and industry reach that CRAIG offers is a notable departure from traditional approaches to responsible AI. Canca pointed out that while companies often seek straightforward solutions during the early stages of responsible AI implementation, academia can provide more nuanced insights into advanced applications, furthering the field. “Having a center, a structure, a mandate that really focuses on responsible AI, that allows you to do novel work and allows you to connect this novel work to actual industry applications, get feedback from the application, that’s fantastic,” she said.

The collaboration is not merely theoretical; it has practical implications for the future workforce. Over the next five years, CRAIG plans to support 30 Ph.D. researchers along with various co-op opportunities and summer programs for hundreds of additional students. This investment in talent is expected to cultivate a new generation of professionals equipped to address critical issues surrounding responsible AI.

Canca expressed hope for the initiative’s expansion, envisioning a broader industry group and research community that can establish standards and develop tools for responsible AI. “The dream would be to have this really grow, to really create a broader industry group and a broader researcher community so that we can set standards,” she said, emphasizing the need for cohesive methodologies in an ever-evolving field.

See also AI Lab Workers Emerge as Key Geopolitical Actors Amidst Regulatory Gaps, Study Reveals

AI Lab Workers Emerge as Key Geopolitical Actors Amidst Regulatory Gaps, Study Reveals Elkhart Airport Board Approves FAA Grant Pre-Application for Runway 27 Easements

Elkhart Airport Board Approves FAA Grant Pre-Application for Runway 27 Easements AI PC Upgrade Cycle Stalls: HP, Dell Face Market Challenges Amid Windows 10 Transition

AI PC Upgrade Cycle Stalls: HP, Dell Face Market Challenges Amid Windows 10 Transition Young Innovators Reject Elon Musk’s $Multimillion Offer to Advance AI with Hierarchical Reasoning Model

Young Innovators Reject Elon Musk’s $Multimillion Offer to Advance AI with Hierarchical Reasoning Model ChatGPT-5 Fails to Identify Risky Behavior in Mental Health Interactions, Psychologists Warn

ChatGPT-5 Fails to Identify Risky Behavior in Mental Health Interactions, Psychologists Warn