In an effort to enhance the speed and precision of drug discovery, Receptor.AI, led by CEO and founder Alan Nafiiev, is leveraging large language models (LLMs) to transform unstructured scientific literature into structured data. This innovative approach allows researchers to accelerate the identification of binding sites critical for drug development, addressing significant bottlenecks in the field.

Large language models are designed to systematically extract binding-site annotations from a vast array of publications. By aligning these insights with protein structures, the models refine geometry-based predictions into biologically verified binding pockets. Benchmarks across diverse proteins highlight the high accuracy and specificity of this method, demonstrating that evidence derived from text can effectively guide the prioritization of pockets. In this framework, LLMs serve as a complement to traditional geometric and physics-based methods rather than a replacement.

The challenge of translating computational predictions into experimental evidence is a well-known hurdle in structure-based drug discovery. For instance, docking methodologies often yield multiple plausible ligand poses, yet only a fraction may correspond to actual binding modes. Residue contacts detailed in scientific publications can act as essential filters, enabling researchers to discern which poses are relevant in biological contexts.

Another complicating factor is the presence of transient or cryptic pockets revealed through molecular dynamics simulations, which can introduce uncertainty regarding their functional significance. Systematic mining of literature reports on modulators and functional assays offers a viable solution to distinguish biologically meaningful transient sites from mere simulation artifacts.

Resistance mutations present another layer of complexity. Single substitutions in kinases and other clinical targets can significantly alter binding pockets, leading to therapeutic failures. Although these effects are extensively documented in oncology literature, they often go underutilized in modeling workflows. While LLMs do not directly remodel binding sites, they can highlight literature reports on resistance-associated variants, thereby guiding researchers toward critical modifications to consider during docking and design studies.

The creation of benchmark datasets is vital for evaluating docking algorithms, and automating the extraction of residue-level annotations from literature can streamline this process. By replacing time-consuming, inconsistent manual curation with scalable, literature-driven datasets, researchers can enhance the reliability of their evaluations.

One critical application of LLMs in structure-based discovery is their ability to determine which of the numerous cavities predicted by geometry-based algorithms correspond to actual binding sites. Tools like Fpocket identify concave regions on protein surfaces but often generate more candidates than are biologically relevant. Traditionally, validation involves laboriously consulting literature to compile residue-level binding information scattered across multiple studies, a process that is inefficient and prone to error.

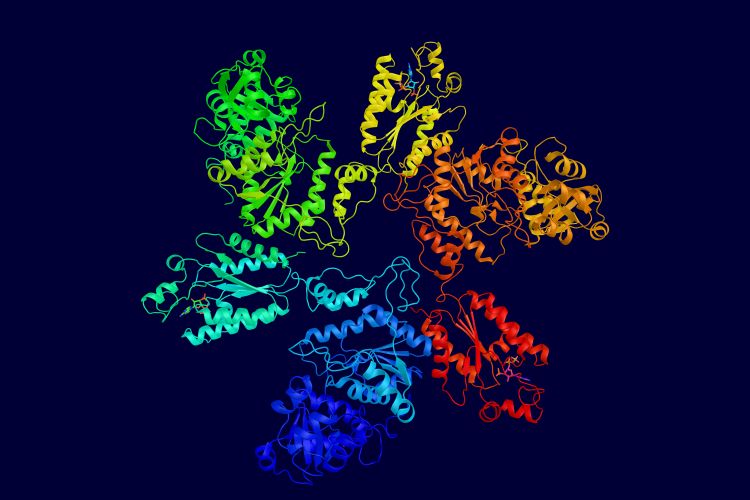

To overcome these challenges, Receptor.AI has developed a hybrid workflow that integrates geometric predictions with LLM-driven literature mining. Initially, Fpocket identifies potential cavities, after which an LLM scans publications to extract experimentally confirmed residues involved in ligand binding. These residues are then mapped onto the protein structure, aligned with Fpocket predictions, and used to filter or merge the geometric pockets. Ultimately, the retained cavities are refined into three-dimensional models, eliminating regions that lack residue support.

This LLM-supported workflow transforms literature from a passive reference into a dynamic data source, ensuring that pocket definitions are both geometrically plausible and experimentally validated. This represents a significant advancement in integrating text mining into structural pipelines, thereby establishing a faster, more reliable foundation for downstream tasks such as docking, druggability assessment, and ligand design.

A vital process in this workflow involves converting residue lists drawn from scientific papers into spatial pocket models. When a literature-derived site aligns with one or more Fpocket cavities—either by residue identity or spatial proximity—these cavities are merged into a cohesive binding region. This merging process is particularly crucial for large active sites that are often fragmented by geometry-only methods. For example, kinase ATP-binding clefts are typically divided into multiple sub-pockets by Fpocket, but the LLM-guided pipeline successfully reconstructs them into a single, continuous catalytic site that aligns with experimental evidence.

As the number of protein structures rapidly grows due to advancements in crystallography, cryo-electron microscopy, and predictive tools like AlphaFold, the need for scalable annotation becomes increasingly urgent. LLMs provide a pathway to ensure that new structures are effectively linked to biologically validated binding sites, thereby enhancing the efficiency of drug discovery pipelines.

Overall, LLMs are emerging as a critical intermediary that connects published literature with structural bioinformatics. By enriching computational predictions with experimental evidence, they enable faster, more reliable decision-making. This innovation brings the field closer to a future where drug discovery processes are continually informed by, and can adapt to, the expanding corpus of biomedical knowledge.

See also Skyra Launches Groundbreaking ViF-CoT-4K Dataset for Enhanced AI Video Detection and Explainability

Skyra Launches Groundbreaking ViF-CoT-4K Dataset for Enhanced AI Video Detection and Explainability DuckDuckGo Launches Privacy-Focused AI Image Generator with OpenAI Technology

DuckDuckGo Launches Privacy-Focused AI Image Generator with OpenAI Technology Actor Neil Newbon Critiques Generative AI in Gaming: “It Sounds Dull as Hell”

Actor Neil Newbon Critiques Generative AI in Gaming: “It Sounds Dull as Hell” New Diffusion Model Achieves Advanced Mural Restoration with Guided Residuals and Enhanced Noise Reduction

New Diffusion Model Achieves Advanced Mural Restoration with Guided Residuals and Enhanced Noise Reduction AI Image Generators Default to 12 Generic Styles, Study Reveals Surprising Trends

AI Image Generators Default to 12 Generic Styles, Study Reveals Surprising Trends