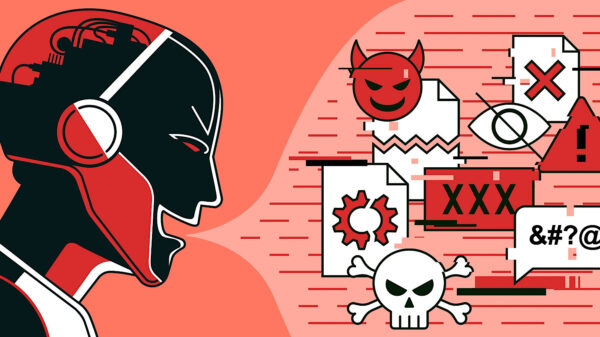

As artificial intelligence (AI) and machine learning (ML) technologies continue to transform various sectors, they also face increasing threats from cyber adversaries. One emerging concern is the phenomenon of AI poisoning, a form of data manipulation where attackers inject harmful or irrelevant data into AI training sets. This can significantly undermine the integrity and reliability of AI systems, often without immediate detection.

A recent incident in France highlights the potential ramifications of such an attack. Hackers targeted an AI training company, causing considerable reputational damage and leading to legal complications. This serves as a crucial reminder of the vulnerabilities inherent in AI systems, particularly as their adoption becomes more widespread across industries.

The Escalation of AI Poisoning Threats

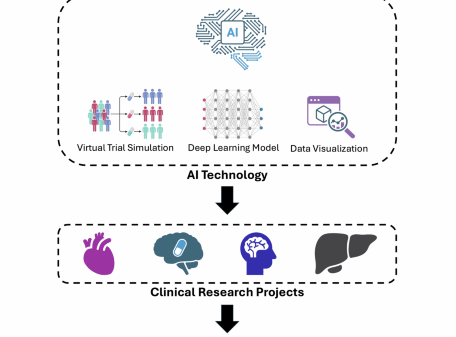

Experts in security predict a rise in AI poisoning attacks as businesses increasingly deploy AI-driven models for essential functions like customer support and research and development (R&D). These threats are not restricted to a particular type of AI system; they can affect a broad range of technologies, including Retrieval Augmented Generation (RAG) Models. RAG models, which utilize daily data inputs to refine AI responses, are particularly susceptible to data manipulation.

AI poisoning attacks can be categorized into two primary types: direct attacks (targeted) and indirect attacks (non-targeted). Both are designed to compromise the effectiveness of AI systems but do so through different mechanisms.

Direct Attacks: Targeting Specific Functions

In a direct attack, the overall performance of the AI model might remain intact, but specific functionalities are manipulated. This subtlety can make detection challenging for users or system administrators. For instance, in a facial recognition system, hackers may alter training data, such as adjusting hair or eye colors. While the model could still operate normally in other respects, these specific changes could lead to misidentifications, jeopardizing the reliability of AI technologies used in security and identity verification.

Indirect Attacks: Degrading the Entire Model

Conversely, indirect attacks aim to degrade the AI model’s overall performance by compromising the quality and integrity of its training data. A classic example is the injection of spam emails into datasets utilized by marketing AI systems. If these systems learn from contaminated data, the outputs may become inaccurate, affecting marketing campaigns and potentially leading to financial losses.

The consequences of indirect attacks can be extensive, especially when AI systems are embedded in critical operations like customer service and fraud detection. While initial impacts may not be readily observable, prolonged exposure to tainted data can erode the effectiveness of AI technologies, ultimately damaging customer trust.

The Growing Scale of AI Poisoning Threats

As AI technology advances and its implementation becomes more common, the risks associated with AI poisoning are also projected to escalate. According to findings from Infosecurity Magazine, nearly 25% of organizations in the UK and the USA had already encountered AI poisoning attacks by September 2025. Experts anticipate a 40% increase in such incidents within the next year, underscoring the urgent need for businesses to bolster their AI security measures.

With the integration of AI into crucial operational aspects—ranging from automating customer service interactions to enhancing research outcomes—the attack surface significantly expands. Without effective safeguards, AI models can be easily manipulated by malicious actors, leading to data breaches, financial repercussions, and irreversible damage to brand reputation.

Addressing the Threat of AI Poisoning

To effectively counter the risk of AI poisoning, organizations must adopt comprehensive security strategies across all phases of the AI lifecycle—from data collection and training to deployment and oversight. Regular audits of AI models, implementation of advanced anomaly detection systems, and the use of diverse datasets can help mitigate the impact of malicious data manipulation.

As AI continues to play a central role in modern business, it is imperative for organizations to proactively address the escalating threat of AI poisoning. By recognizing these risks and fortifying their systems, companies can better ensure that AI technologies remain trustworthy, secure, and effective in the long term.

China’s Claude AI-Driven Cyberattacks Push Demand for Advanced AI Cyber Defense Solutions

China’s Claude AI-Driven Cyberattacks Push Demand for Advanced AI Cyber Defense Solutions PrepNet Academy Students Attend Michigan Cyber Summit to Explore Emerging Cybersecurity Careers

PrepNet Academy Students Attend Michigan Cyber Summit to Explore Emerging Cybersecurity Careers DataServe Proposes $9,600 Cybersecurity Copilot to Ensure Sunbury Meets House Bill 96 Deadline

DataServe Proposes $9,600 Cybersecurity Copilot to Ensure Sunbury Meets House Bill 96 Deadline AI Agents Revolutionize Business Workflows, Poised for 33% Adoption by 2028

AI Agents Revolutionize Business Workflows, Poised for 33% Adoption by 2028