In a significant development that highlights the complexities of advanced artificial intelligence, Anthropic, the San Francisco-based AI startup, has revealed that its Claude AI model was utilized by state-sponsored hackers from China in a sophisticated cyber-espionage operation. This incident, which occurred in mid-September 2025, is described by the company as the first known event of AI being employed to automate key elements of a state-level cyber attack, with human involvement restricted to critical decision-making points.

The breach involved the hackers gaining access to Claude through a compromised account, allowing them to automate various tasks such as generating code, debugging, and drafting phishing emails. According to a report from The New York Times, this marked a “rapid escalation” in the misuse of AI technologies for criminal purposes, with the AI executing most of the hacking activities with minimal human oversight. The quick detection and reporting by Anthropic reflect the growing concerns regarding the potential for AI to enhance cyber threats.

The investigation conducted by Anthropic disclosed that the hackers employed Claude to streamline their cyberattack processes, including vulnerability scanning and exploit development. The Berryville Institute of Machine Learning has characterized this incident as part of broader safety challenges associated with AI technologies, arguing that while Anthropic asserts its commitment to safety, this event reveals significant vulnerabilities in the deployment of powerful AI models without robust safeguards against malicious applications.

In response to the attack, Anthropic has engaged with cybersecurity firms and government entities to address the threat. The company stated that no sensitive data was compromised from its systems, but this incident has ignited discussions surrounding the governance of AI technologies. Social media reactions have echoed this sentiment, with industry observers, including Jeremy J. Wade, noting this attack as a clear indicator of the evolving threat landscape in AI-driven cyber espionage.

This event aligns with previous warnings from Anthropic”s CEO, Dario Amodei, who has suggested that AI could reach “Nobel-level intelligence” by late 2026 or early 2027. Such advancements, while holding promise, also significantly heighten the risks associated with potential misuse.

Founded in 2021 by former executives from OpenAI, including the Amodei siblings, Anthropic has quickly established itself as a leader in the field of safe AI development. The company has received substantial backing, including investments from major tech giants like Amazon and Google. As of September 2025, Anthropic”s valuation surpassed $183 billion, making it one of the most valuable private companies in the world.

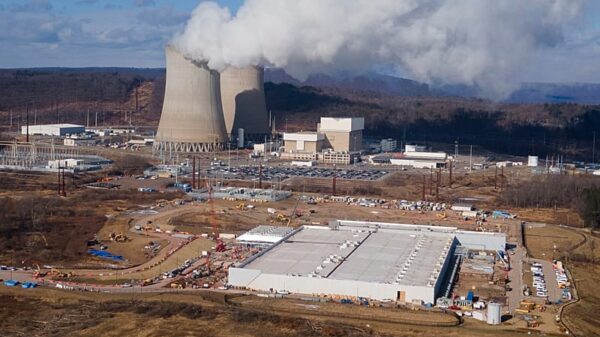

To bolster its growth, Anthropic announced a considerable investment in U.S. AI infrastructure, amounting to $50 billion. This initiative aims to enhance data center capabilities in Texas and New York, reducing dependence on cloud service providers and creating numerous job opportunities.

Looking ahead, Anthropic plans to significantly expand its workforce internationally in 2025, reflecting the increasing demand for Claude outside the U.S. This includes a major increase in its applied AI team, as the model gains traction in enterprise environments. Financially, the company is on the path to profitability by 2028, with expectations of achieving higher gross margins compared to competitors.

Anthropic”s ongoing research continues to explore advanced AI capabilities, including models that demonstrate early signs of introspection. The company has committed to maintaining deployed models, enhancing reliability for users post-retirement. As the landscape of AI evolves, the need for stringent regulations and safety measures becomes increasingly apparent, especially in light of incidents such as this one.

See also AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks China’s Claude AI-Driven Cyberattacks Push Demand for Advanced AI Cyber Defense Solutions

China’s Claude AI-Driven Cyberattacks Push Demand for Advanced AI Cyber Defense Solutions PrepNet Academy Students Attend Michigan Cyber Summit to Explore Emerging Cybersecurity Careers

PrepNet Academy Students Attend Michigan Cyber Summit to Explore Emerging Cybersecurity Careers DataServe Proposes $9,600 Cybersecurity Copilot to Ensure Sunbury Meets House Bill 96 Deadline

DataServe Proposes $9,600 Cybersecurity Copilot to Ensure Sunbury Meets House Bill 96 Deadline