In a significant lapse highlighting the disconnect between federal cybersecurity policy and practice, Madhu Gottumukkala, a senior official at the Cybersecurity and Infrastructure Security Agency (CISA), uploaded several documents labeled “for official use only” to OpenAI’s public ChatGPT platform. This breach, reported by CSO Online, raises critical concerns about the enforcement of security protocols within government agencies, especially as they increasingly seek to integrate generative AI technologies while navigating the associated risks.

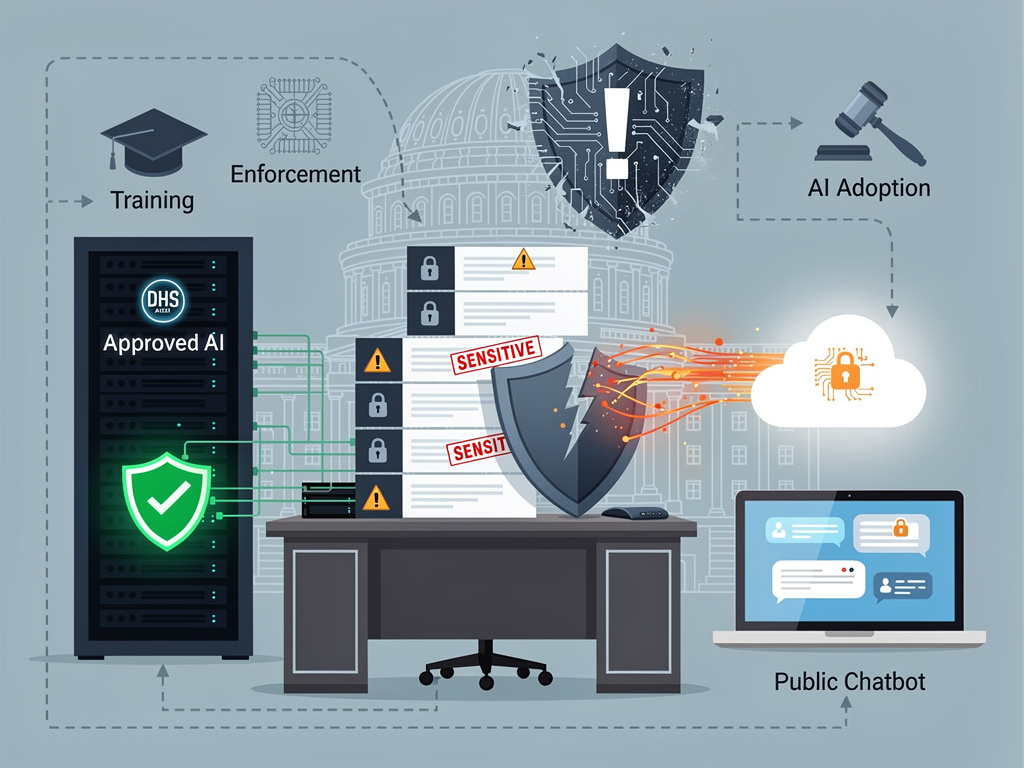

The documents involved pertained to government contracting processes and were uploaded to the consumer version of ChatGPT, which utilizes input data to enhance its model. This raises the alarming possibility that sensitive government information could be inadvertently included in OpenAI’s training datasets, accessible to the company’s employees and potentially revealed in responses to other users. The incident underlines a troubling gap between established guidelines and individual adherence, especially given that the Department of Homeland Security (DHS) has designated specific AI platforms to prevent such data exposures.

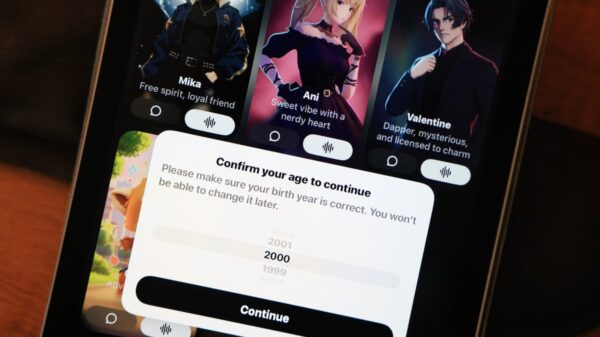

When users input documents into the free version of ChatGPT, these materials become part of OpenAI’s data ecosystem unless explicitly opted out—a feature many government employees may not be aware of. Unlike enterprise versions of ChatGPT, which offer data isolation guarantees, the consumer platform operates under terms that allow OpenAI broad rights to utilize input data. This poses a serious issue for government documents marked “for official use only,” creating a chain of custody problem that contravenes federal information handling protocols intended to restrict access to authorized personnel only.

The technical intricacies of modern AI data flows complicate matters further. Once information enters a large language model’s training pipeline, extracting or ensuring complete removal of that information can be nearly impossible. Security researchers have shown that large language models can sometimes reveal training data, though the chances of this vary considerably depending on how the data was incorporated and the model’s architecture.

The irony of this occurrence is stark. CISA, tasked with protecting federal networks and critical infrastructure from digital threats, regularly provides guidance to both governmental and private sectors regarding secure AI adoption and data handling protocols. Gottumukkala’s actions serve as a case study in the shadow IT phenomena CISA has long warned about, undermining the agency’s credibility at a time when federal AI governance frameworks are still being defined.

CISA has been instrumental in formulating AI security guidelines, publishing frameworks for secure AI deployment and cautioning organizations about the dangers of using unauthorized cloud services. The agency’s guidance stresses the importance of data classification, tool usage approval, and maintaining control over sensitive information. By disregarding these protocols, senior officials send a troubling message regarding the enforceability of CISA’s own standards, raising questions about whether adequate training and controls exist to prevent such violations.

This incident is emblematic of broader challenges faced by federal agencies as they strive to leverage AI capabilities while maintaining security measures. Employees increasingly feel pressured to improve efficiency and utilize advanced tools, yet the approved technology often lags behind commercial options. This dynamic fosters the use of unapproved tools, particularly when sanctioned alternatives appear cumbersome or less effective. The issue of shadow IT has been a persistent concern for federal agencies, and the rise of generative AI amplifies both the temptation and the potential risks involved.

Some federal agencies have opted to ban generative AI tools altogether, while others have established agreements with providers like OpenAI, Anthropic, and Google that include enhanced security provisions. The Department of Homeland Security has authorized specific AI platforms for employee use, rendering Gottumukkala’s choice to utilize the public ChatGPT platform particularly difficult to rationalize. This incident suggests that even with approved alternatives available, awareness, training, and enforcement mechanisms may be insufficient to ensure compliance.

The nature of the documents uploaded—contracting materials marked “for official use only”—adds another layer of complexity. While these documents are not classified at a Secret or Top Secret level, FOUO designations denote information that could disadvantage the government if disclosed. Such documents often contain pricing strategies, vendor evaluation criteria, technical specifications, and procurement timelines, which, if exposed, could provide competitors with unfair advantages or highlight vulnerabilities in government acquisition processes. The potential for exposure through an AI platform raises immediate procurement risks.

OpenAI has introduced enterprise versions of ChatGPT specifically designed to mitigate the data security and privacy concerns associated with the consumer version. ChatGPT Enterprise and ChatGPT Team feature data encryption, administrative controls, and assurances that customer data will not be used for model training. These enterprise solutions have gained traction among numerous Fortune 500 companies and increasingly among government agencies wanting to leverage AI capabilities securely. The existence of these alternatives makes the use of the consumer platform for government tasks all the more problematic.

This incident comes at a crucial moment for federal AI policy. The Biden administration has issued executive orders on AI safety and security, and agencies are exploring AI applications across various government functions. When senior officials at the agency responsible for cybersecurity fail to adhere to basic data handling protocols, it provides fodder for critics of AI and complicates efforts to develop balanced policies that foster innovation while managing risks. The challenge for policymakers is to glean insights from this incident without overreacting in ways that hinder the beneficial adoption of AI technologies.

As of the latest reports, the repercussions for Gottumukkala and any broader organizational response from CISA or DHS are unclear. The handling of such incidents conveys powerful messages regarding institutional priorities and the seriousness with which security protocols are taken. A purely punitive response may discourage transparency, whereas inadequate accountability might suggest minimal repercussions for violations. Effective strategies will likely blend individual accountability with systemic improvements addressing the root causes of such occurrences, promoting a culture that empowers employees to seek guidance on appropriate tool usage in a rapidly evolving technological landscape.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks