The year 2026 is poised to witness a significant surge in corporate fraud, primarily fueled by the rapid evolution and potential misuse of artificial intelligence technologies. A particularly alarming threat is the anticipated rise of deepfake-enabled cyberattacks, which are expected to serve as powerful tools for cybercriminals engaged in sophisticated social engineering campaigns. As AI tools become increasingly accessible and realistic, malicious actors are leveraging these technologies to deceive organizations and circumvent traditional security measures.

According to a recent study by fraud prevention firm Nametag, titled “The 2026 Workforce Impersonation Report,” deepfake technology is projected to play a pivotal role in future cybercrime. The report underscores how Generative AI platforms, such as ChatGPT, when paired with advanced video generation tools like Sora 2, can produce highly convincing audio and video content. These deepfake materials can effectively impersonate CEOs, CTOs, CIOs, and other C-suite executives with alarming accuracy.

Impersonation attacks pose a unique risk as they exploit inherent trust within corporate structures. A seemingly legitimate video call or voice message from a company executive can easily persuade employees to authorize fraudulent wire transfers, share sensitive information, or grant access to secure systems. Unlike traditional phishing emails, deepfake-based social engineering attacks are significantly more challenging to detect, as they closely mimic genuine human behavior, tone, and visual cues.

Nametag researchers caution that the forthcoming months may see an increase in Deepfake-as-a-Service (DaaS) offerings emerging on underground markets. These services would empower even novice cybercriminals to purchase ready-made deepfake tools and orchestrate complex fraud schemes with minimal technical expertise. Consequently, attacks such as CEO fraud, business email compromise, and financial manipulation could become both more common and more successful.

The financial ramifications of these attacks could be catastrophic. With realistic deepfake impersonations, hackers may siphon millions of dollars from organizations within hours. Beyond the immediate monetary losses, companies face the potential for reputational damage, legal repercussions, and a long-term erosion of trust among employees and stakeholders.

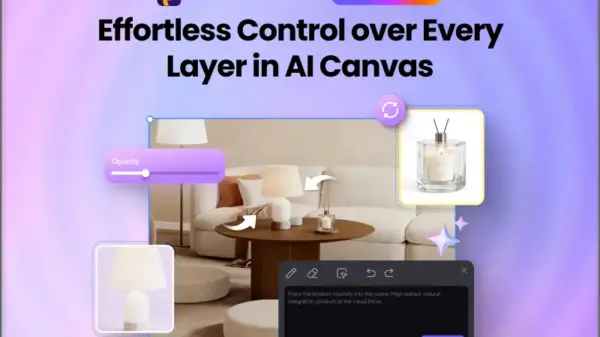

As deepfake technology continues to advance, experts are emphasizing the urgent need for organizations to bolster their identity verification processes, educate employees about emerging threats, and implement AI-based detection tools. Without proactive defenses, corporate environments risk becoming increasingly susceptible to this new era of AI-driven fraud.

In an age where trust is paramount, the implications of these advancements in AI technology are profound. Companies must stay ahead of evolving threats, ensuring they are equipped to counteract the sophisticated tactics that cybercriminals are likely to deploy in the coming years. The future challenges posed by deepfake technology demand not only vigilance but also a reevaluation of corporate security protocols to safeguard against unprecedented risks.

See also ThreatQuotient Earns Seven Awards, Expands Threat Intelligence Capabilities After Securonix Acquisition

ThreatQuotient Earns Seven Awards, Expands Threat Intelligence Capabilities After Securonix Acquisition Quantum Computing Threatens Current Encryption; Embracing Zero Trust in Cybersecurity

Quantum Computing Threatens Current Encryption; Embracing Zero Trust in Cybersecurity Novee Secures $51.5M to Launch AI-Driven Cybersecurity Against Advanced Threats

Novee Secures $51.5M to Launch AI-Driven Cybersecurity Against Advanced Threats AI-Driven Cyberattacks Surge in Mexico, Targeting Energy and Banking Sectors

AI-Driven Cyberattacks Surge in Mexico, Targeting Energy and Banking Sectors Novee Launches with $51.5 Million to Enhance AI Cybersecurity Through Continuous Penetration Testing

Novee Launches with $51.5 Million to Enhance AI Cybersecurity Through Continuous Penetration Testing