In a significant escalation of tactics, North Korean state-linked hackers have initiated a sophisticated social engineering campaign utilizing artificial intelligence-generated video content to distribute malware aimed at both macOS and Windows systems. Cybersecurity researchers attribute this strategy to a threat group with ties to Pyongyang, marking a troubling evolution in how nation-state actors are leveraging generative AI tools for cyberattacks aimed at individuals and organizations worldwide.

Detailed by cybersecurity analysts, the campaign employs convincing AI-generated video presentations that often imitate legitimate corporate communications or investment briefings, successfully luring targets into downloading malicious payloads. This method represents a noticeable shift from traditional phishing techniques, which primarily rely on text-based emails and fraudulent documents, indicating that adversaries are quickly integrating the latest AI capabilities into their offensive strategies.

According to a report by TechRadar, this campaign is associated with North Korean threat actors known for conducting financially motivated cyber operations to fund the regime’s weapons programs and evade international sanctions. The distinctiveness of this operation lies in its use of AI-generated video content—synthetic media that appears to feature real individuals delivering presentations—as a means of establishing trust with potential victims.

The attackers craft scenarios tailored to specific targets, including cryptocurrency investors, software developers, and professionals in the financial technology sector. Victims are approached via social media platforms, professional networking sites, or messaging applications and directed to view what seems to be a legitimate video briefing. The video content is generated using accessible AI tools and is polished enough to withstand casual scrutiny, creating an authenticity that traditional phishing emails often lack.

One of the campaign’s most alarming features is its cross-platform capability. The threat actors have engineered malware payloads proficient in infecting both macOS and Windows machines, ensuring that virtually no target is immune, regardless of their operating system. This dual-platform approach illustrates a broader trend among sophisticated threat groups that recognize the increasing market share of Apple devices within corporate and developer environments.

For Windows users, the malware typically masquerades as a software installer or a document viewer necessary for accessing the purported video content. Meanwhile, on macOS, attackers have utilized similar deceptive strategies, packaging their payloads in ways that circumvent Apple’s Gatekeeper security features or exploit users’ willingness to override security warnings. Once activated, the malware can pilfer credentials, cryptocurrency wallet keys, browser session data, and other sensitive information, all of which can be monetized or exploited for further intrusions.

North Korea’s cyber operations have long been associated with the Lazarus Group and its various sub-clusters, which have executed some of the most high-profile cyberattacks in recent years, such as the 2014 Sony Pictures hack and the 2017 WannaCry ransomware outbreak. The incorporation of AI-generated video content marks the latest chapter in a constantly evolving playbook that adapts to new technologies and methods.

Researchers have noted that North Korean hackers have been early adopters of social engineering tactics aimed at the cryptocurrency and Web3 sectors. Previous campaigns have involved elaborate fake job offerings and counterfeit venture capital firms. The addition of AI-generated video to this arsenal suggests the regime’s cyber units are actively investing in generative AI capabilities and exploring how to deploy them effectively.

The employment of synthetic video as a social engineering tool is particularly dangerous because it exploits a basic human tendency to trust visual and auditory information more than text alone. An expertly crafted AI-generated video featuring a seemingly real person delivering a business pitch can create a powerful sense of legitimacy that often overrides skepticism typically applied to suspicious emails or messages.

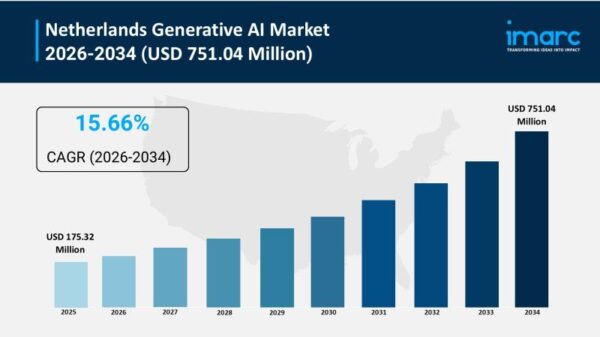

As generative AI tools have become increasingly accessible, the barrier to creating convincing synthetic media has lowered dramatically. Tools that once required significant technical expertise are now available as consumer-grade applications, enabling even modestly resourced threat actors to produce high-quality video content. For a state-sponsored group with dedicated resources, the output quality can be exceptionally high.

This campaign does not operate in isolation. Cybersecurity experts have been warning about the rising use of AI in offensive operations across the industry. Reports have documented instances of North Korean operatives utilizing AI-generated identities—complete with fabricated LinkedIn profiles and synthetic resumes—to secure remote employment in Western technology firms. These infiltration campaigns, highlighted by FBI warnings and Department of Justice indictments, serve dual purposes: generating revenue for the regime and providing insider access to corporate networks. The deployment of AI-generated video in malware schemes is a natural progression of these tactics.

For organizations and individuals, combating this threat requires a multi-layered strategy combining technical defenses with increased vigilance. Security experts advise treating unsolicited video content with the same suspicion typically reserved for unexpected email attachments or links. Verifying the identity of anyone requesting software downloads or presenting investment opportunities through independent channels, rather than relying solely on the video’s content, is crucial.

On the technical side, organizations should employ endpoint detection and response (EDR) solutions across all platforms, including macOS, which historically has received less security attention than Windows. Keeping operating systems and security tools updated, enforcing application allowlisting, and implementing robust multi-factor authentication can help mitigate the risk of credential theft, even if a user initially falls victim to a social engineering attack.

The campaign also raises pressing questions for the technology industry and policymakers about the dual-use nature of generative AI tools. While these technologies offer tremendous benefits for legitimate applications, their potential for misuse in cyberattacks and fraud is increasingly apparent. The cybersecurity community is advocating for heightened investment in deepfake detection technologies, improved platform-level safeguards against synthetic media abuse, and international cooperation to hold state-sponsored threat actors accountable.

This latest campaign serves as a stark reminder that nation-state cyber threats are not static; they evolve alongside technological advancements. North Korea’s willingness to weaponize AI-generated video for malware delivery underscores the reality that the most dangerous cyber threats often exploit human psychology rather than software vulnerabilities. As generative AI technology advances, distinguishing between authentic and synthetic content will grow more challenging, making vigilance and skepticism paramount for anyone operating in the digital landscape.

Cybersecurity firms are now urging increased caution for those in the cryptocurrency, fintech, and software development sectors—the primary targets of this campaign. The message is clear: if a video appears overly polished, an opportunity seems too good to be true, or a request to download software feels overly convenient, it may be the product of a North Korean AI lab rather than a legitimate business contact.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks