Sneaking into lectures, infiltrating group chats, and impersonating professors are some of the desperate tactics employed by contract cheating companies as they seek to regain lost market share amid the rise of generative AI. Universities across Australia report that traditional contract cheating—where students outsource assessments—has diminished as misuse of AI tools has surged. This uptick has led to a notable increase in the number of students caught violating academic integrity policies.

Since the emergence of AI technologies in 2023, many universities initially imposed strict bans on their use. However, these restrictions have gradually loosened, with most institutions now adopting a “two-lane” approach to AI utilization, allowing its use in certain contexts while prohibiting it in others. This model, which began at the University of Sydney, has been implemented at several other educational establishments.

Professor Phillip Dawson from Deakin University’s Centre for Research in Assessment and Digital Learning noted that there is still a niche market for bespoke contract cheating, which may involve a more personal relationship with the provider of academic materials. He stated, “There are still people who outsource the entirety of the degree. In courses with a face-to-face component, you need a warm body in the room.”

Kane Murdoch, head of complaints, appeals, and misconduct at Macquarie University, emphasized that institutions must adapt to the evolving academic landscape, warning that without significant changes to assessment methods, cheating will become increasingly prevalent, leading to a decline in genuine learning experiences.

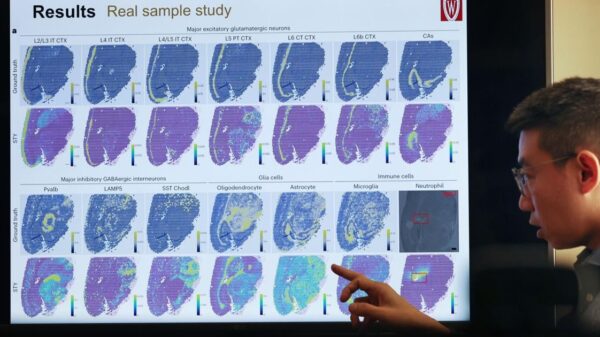

A report from the University of New South Wales (UNSW) revealed a staggering 219 percent increase in “unauthorized use” of generative AI in 2024 compared to the previous year. In contrast, the university recorded no such reports in 2022. At UNSW, verified instances of contract cheating fell from 232 in 2023 to 132 in 2024, indicating a shift from traditional cheating methods to AI misuse.

The financial performance of **Chegg**, a study help platform, mirrors these trends. After reaching a pandemic peak of $113.51 per share, Chegg’s stock has plummeted to just 69 cents. The company laid off 45 percent of its workforce late last year and has initiated legal action against **Google**, alleging that AI summaries on Google’s homepage have negatively impacted its website traffic. Professor Dawson remarked, “There’s a market signal in the share price,” pointing out that Chegg’s decline correlates with institutions shifting away from online learning toward face-to-face interactions and the rise of AI technologies.

Murdoch bluntly declared that “Chegg is dead,” highlighting the company’s struggles in the current educational climate. Compounding its challenges, Chegg faces a lawsuit from the **Tertiary Education Quality and Standards Agency (TEQSA)** for allegedly breaching federal anti-cheating laws. Court documents filed in September detail TEQSA’s claims that Chegg and its subsidiary, Chegg India, violated laws against academic cheating services through its “Expert Q&A service.”

TEQSA asserts that it has identified five instances of Australian university assignments in various fields—including programming, water systems, and quantum mechanics—being submitted to Chegg’s platform, with responses from its experts appearing within days. The agency’s filings suggest that Chegg’s management either knew or should have known that these submissions were likely student assignments.

In response to the allegations, Chegg representatives have denied providing any form of academic cheating service. The company argues that TEQSA’s claims are based on a limited selection of examples that do not accurately represent its commitment to academic integrity. A spokesperson stated, “The lawsuit brought by TEQSA is based on an outdated academic integrity policy, which was formulated long before the rise of AI and its profound impact on education and technology today.”

As educational institutions continue to navigate the complexities introduced by AI technologies, the landscape of academic integrity is poised for further transformation. The ongoing discourse surrounding AI’s role in education will likely shape future policies and approaches to assessment, raising critical questions about the balance between innovation and integrity in higher learning.

See also Andrew Ng Advocates for Coding Skills Amid AI Evolution in Tech

Andrew Ng Advocates for Coding Skills Amid AI Evolution in Tech AI’s Growing Influence in Higher Education: Balancing Innovation and Critical Thinking

AI’s Growing Influence in Higher Education: Balancing Innovation and Critical Thinking AI in English Language Education: 6 Principles for Ethical Use and Human-Centered Solutions

AI in English Language Education: 6 Principles for Ethical Use and Human-Centered Solutions Ghana’s Ministry of Education Launches AI Curriculum, Training 68,000 Teachers by 2025

Ghana’s Ministry of Education Launches AI Curriculum, Training 68,000 Teachers by 2025 57% of Special Educators Use AI for IEPs, Raising Legal and Ethical Concerns

57% of Special Educators Use AI for IEPs, Raising Legal and Ethical Concerns