Google and Google DeepMind have initiated the rollout of Project Genie, an innovative AI world model that empowers users to create and navigate interactive environments in real time. This experimental system, which had previously undergone private testing, is now accessible to Google AI Ultra subscribers in the U.S., indicating a significant shift from internal research to wider experimentation.

The launch underscores Google’s increasing emphasis on world models as a foundational element for advanced AI systems, with potential ramifications for sectors such as education and simulation-based training. By enabling users to immerse themselves in scenarios rather than merely reading or observing them, Project Genie could transform learning experiences.

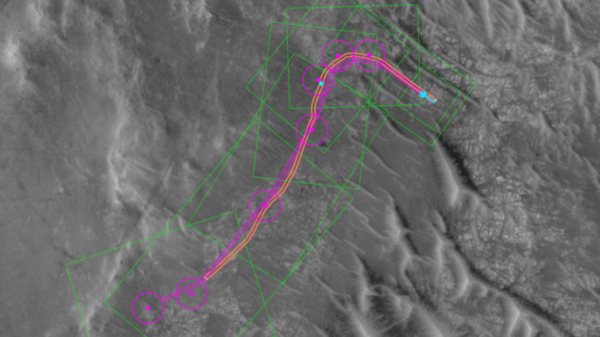

Built upon Genie 3, a general-purpose world model first introduced by Google DeepMind in August, Project Genie distinguishes itself from traditional static 3D environments by dynamically generating worlds as users explore them. Neil Hoyne, Chief Strategist at Google, elaborated on LinkedIn, stating that Project Genie creates “an interactive environment you can actually move through,” emphasizing that it is not merely a static 3D render but a real-time construction of the user’s surroundings.

Hoyne noted that users can control a character within the generated environments, allowing them to “walk, fly, drive, whatever makes sense for the world you built.” This functionality positions the tool as a means to conceptualize ideas spatially rather than abstractly, potentially enhancing creative processes across various fields.

Despite its promising capabilities, Hoyne acknowledged the current limitations of Project Genie. He expressed that its primary value lies in experimentation rather than polish, suggesting that it could be particularly beneficial for animation, design, writing, and educational applications, as it offers users the ability to create experiential scenarios. He remarked, “People working in training or education might find this useful for creating scenarios people can experience rather than just read about.”

However, Hoyne was forthright about the system’s shortcomings, admitting, “The physics aren’t always going to make sense,” and that users might encounter moments where the environment does not align with their expectations. He also cautioned that controlling characters could present challenges. Nevertheless, he maintained that early-stage tools can still provide significant utility, asserting, “The most useful tools aren’t always the polished ones. Sometimes it’s the weird, experimental thing that gives you just enough to see your idea differently.”

Hoyne framed the decision to grant broader access to Project Genie as practical, urging potential users not to hesitate in exploring the tool. He advised, “Don’t overthink it. If you have access, spend an afternoon playing with it,” further stating, “Sitting on the sidelines wondering isn’t going to tell you anything in your AI pursuits.”

As Project Genie moves from the lab into the hands of users, it could pave the way for a new era in interactive design and experiential learning, challenging traditional approaches and inspiring creative exploration in various fields. The ongoing development of such technologies suggests a future where AI-driven environments may become integral to education and training, fostering innovative interactions that extend beyond conventional learning methods.

See also Andrew Ng Advocates for Coding Skills Amid AI Evolution in Tech

Andrew Ng Advocates for Coding Skills Amid AI Evolution in Tech AI’s Growing Influence in Higher Education: Balancing Innovation and Critical Thinking

AI’s Growing Influence in Higher Education: Balancing Innovation and Critical Thinking AI in English Language Education: 6 Principles for Ethical Use and Human-Centered Solutions

AI in English Language Education: 6 Principles for Ethical Use and Human-Centered Solutions Ghana’s Ministry of Education Launches AI Curriculum, Training 68,000 Teachers by 2025

Ghana’s Ministry of Education Launches AI Curriculum, Training 68,000 Teachers by 2025 57% of Special Educators Use AI for IEPs, Raising Legal and Ethical Concerns

57% of Special Educators Use AI for IEPs, Raising Legal and Ethical Concerns