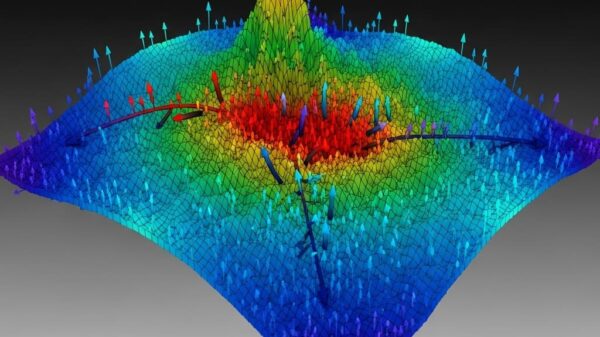

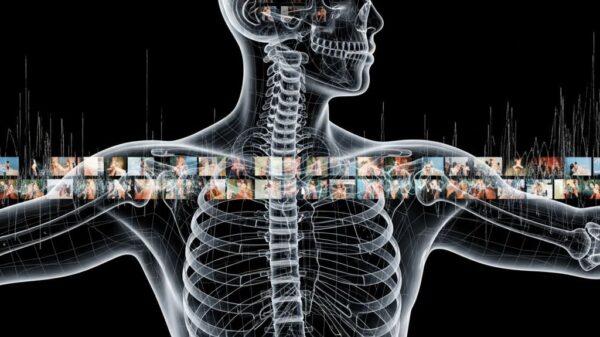

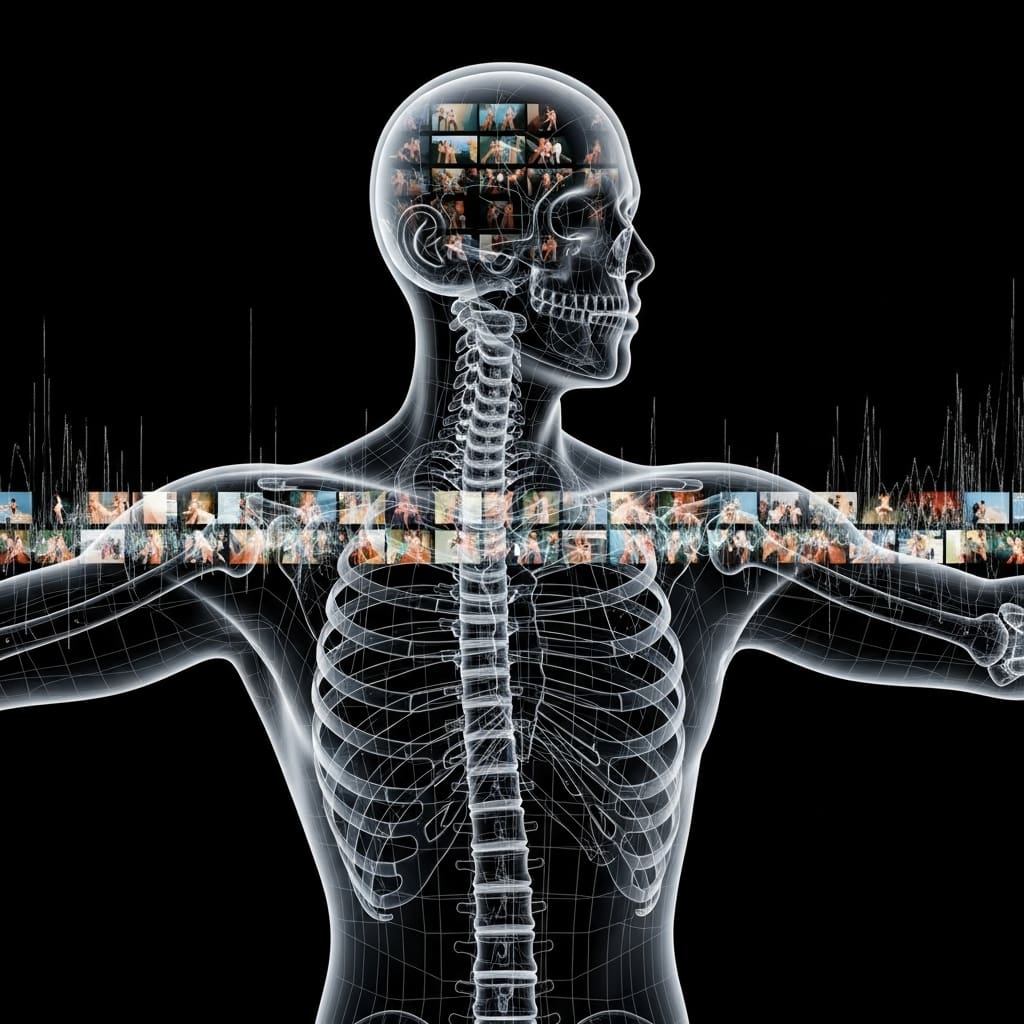

Researchers at the Hong Kong University of Science and Technology (HKUST) and South China University of Technology (SCUT) have unveiled a significant advance in the field of computer vision, specifically in the generation of realistic human motion and video. The team, led by Chengfeng Zhao and Jiazhi Shu, has developed a novel co-generative framework named CoMoVi, which simultaneously produces both 3D human motions and corresponding 2D videos within a unified denoising process. This innovative approach leverages pre-trained video diffusion models (VDMs) and introduces a new representation for 2D human motion, facilitating research into complex human actions.

The researchers have established an intrinsic link between 3D motion generation and 2D video creation, highlighting how 3D motions serve as critical structural priors for ensuring the realism and consistency of generated videos. By harnessing the generalizing capabilities of pre-trained VDMs, CoMoVi aims to bridge the traditional gap between these two often isolated domains. The framework effectively synchronizes the generation of 3D human motions and videos, presenting a unified methodology that significantly enhances the realism and coherence of the outputs.

A key technical advancement of this project is the development of a novel 2D human motion representation that compresses 3D motion information into pixel space. This representation takes advantage of the temporal coherence and denoising capabilities present in advanced video models. To implement the framework, the team designed a dual-branch diffusion model, extending the architecture of Wan2.2-I2V-5B. This model integrates mutual feature interaction with 3D-2D cross-attention mechanisms, allowing for a continuous exchange of information and resulting in more natural 3D motions alongside realistic video outputs.

Central to this research is the CoMoVi Dataset, a large-scale collection comprising approximately 50,000 high-resolution real-world human videos, each meticulously annotated with text descriptions and motion labels. The dataset covers a diverse range of challenging human actions and serves as the foundation for training and evaluating the CoMoVi framework. Extensive experimentation using additional datasets, such as Motion-X++ and the VBench benchmark, has demonstrated CoMoVi’s effectiveness in both 3D motion and video generation tasks, consistently surpassing the performance of existing state-of-the-art models.

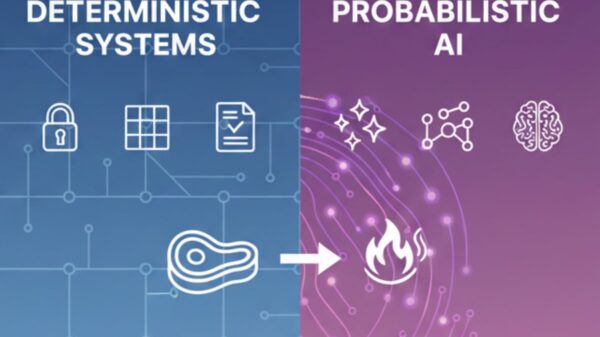

The experiments highlight that CoMoVi’s co-generative approach effectively overcomes limitations associated with traditional cascaded methods, which typically treat motion and video generation as separate tasks. By integrating these processes, the framework unlocks enhanced capabilities in generalization for motion generation and provides improved structural guidance for video creation. This methodological innovation presents exciting possibilities for applications in character animation, virtual and augmented reality, and gaming, where realistic human movement in dynamic environments is essential.

In summary, the development of CoMoVi marks a significant milestone in the realm of computer vision, particularly in the co-generation of 3D human motion and 2D video. By utilizing a cohesive approach that combines the strengths of dual video diffusion models, this research opens new avenues for enhancing the realism of animated and simulated human interactions. As applications for this technology continue to expand, the implications for various industries, including entertainment and virtual experiences, become increasingly significant.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature