The Indian government has introduced significant amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, mandating that digital platforms clearly label AI-generated content, including deepfake videos and altered visuals. This regulation, issued by the Ministry of Electronics and Information Technology (MeitY), aims to enhance transparency in digital media and will take effect on **February 20, 2023**.

According to a Gazette notification dated **February 10**, social media platforms are now required to remove flagged AI-generated content within three hours of receiving a complaint from a competent authority or a court order. The amended rules have also expedited the grievance resolution process, reducing the timeframe from **15 days to 7 days**. For urgent complaints, platforms must respond within **36 hours**, down from the previous **72 hours**. Furthermore, intermediaries are now obliged to act on specified content removal complaints within a **2-hour** window, significantly shortened from **24 hours**.

The notification specifies that a “significant social media intermediary,” which enables the display, uploading, or publication of information, must require users to declare whether such information is synthetically generated before allowing its distribution. This aims to ensure that users are aware of the nature of the content they are consuming and sharing.

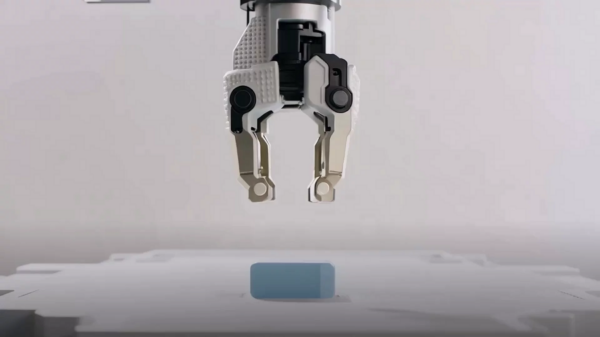

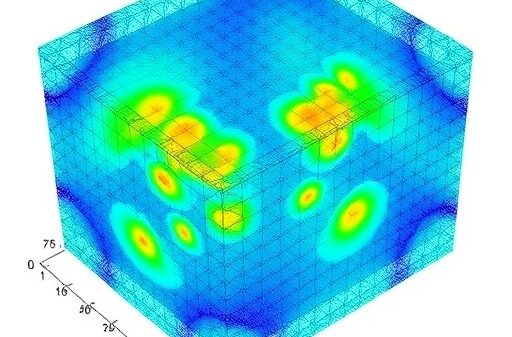

Where technically feasible, the regulations stipulate that such content must include permanent metadata or provenance tools, complete with a unique identifier, to trace the computer resources used to create or modify it. Intermediaries are strictly prohibited from allowing the removal, hiding, or alteration of these labels or metadata, reinforcing the importance of content authenticity in the digital sphere.

The amendments define “synthetically generated information” as content that is artificially or algorithmically created, modified, or altered to appear real or authentic. This includes audio, visual, or audio-visual material that could mislead viewers into perceiving it as genuine, thereby raising ethical and legal concerns regarding misinformation.

In addition to content labeling, the Ministry’s notification requires social media companies to deploy automated tools designed to detect and prevent the circulation of illegal, sexually exploitative, or deceptive AI-generated content. This move reflects an increasing recognition of the challenges posed by rapidly evolving technologies and their potential for misuse in society.

As digital platforms navigate these new regulations, the onus will increasingly be on them to develop robust mechanisms for compliance, including investment in advanced content moderation technologies. The amendments mark a significant step in India’s regulatory landscape, as the government seeks to balance innovation with the need for public safety and ethical standards in the digital world.

Looking ahead, the implementation of these regulations may have broader implications for the global discourse on digital ethics and AI governance. As countries grapple with similar challenges, India’s proactive stance could serve as a model for other nations aiming to address the complexities of AI-generated content in the age of misinformation.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature