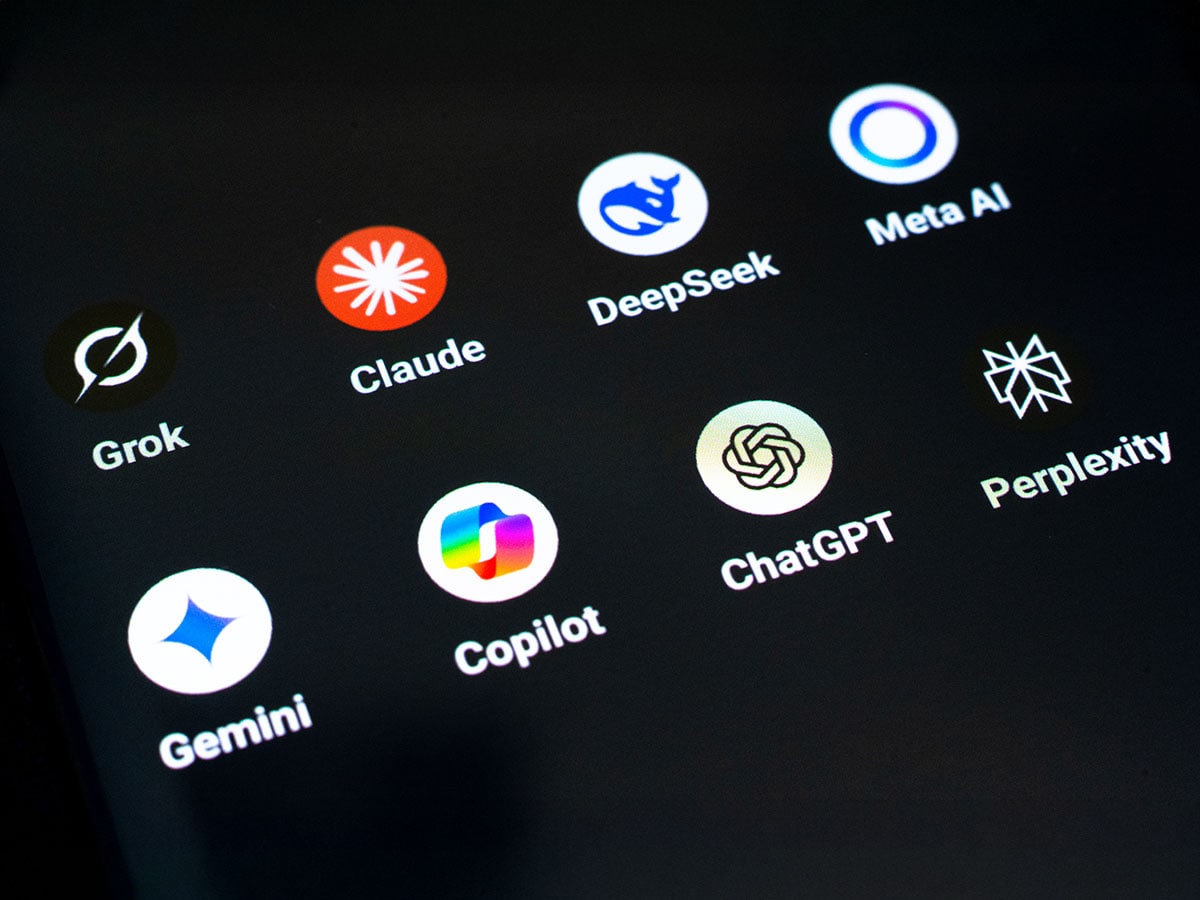

Three years after the launch of ChatGPT, the competition among large language models (LLMs) has evolved from a race for user numbers to a complex contest over cost, trust, and control of the emerging AI stack. Leading players are no longer simply focused on developing faster or larger models; instead, they are integrating LLMs into full-scale platforms that encompass operating systems, productivity software, search engines, and developer tools. This strategy aims to lock in users within their everyday workflows, reflecting a dynamic market that resembles an operating system war for a new computing era.

From OpenAI’s ChatGPT to Anthropic’s Claude, the competition has shifted from acquiring user attention to ensuring long-term engagement—transforming habitual use into subscription revenue. In a newly published “constitution,” Anthropic discusses the “deeply uncertain” moral status of Claude, noting that it does not claim consciousness but acknowledges the necessity of designing with this uncertainty in mind. This perspective stands out in an industry where many organizations tend to downplay such concerns.

Anthropic’s emphasis on AI safety and ethics has become a core differentiator, positioning Claude as more than just a functional tool. Anushree Verma, a senior director analyst at Gartner, states that this commitment appeals strongly to risk-averse enterprise clients and policymakers. “Anthropic’s training methodology ensures that its models, like Claude, adhere to a set of ethical principles (such as being ‘helpful, honest, and harmless’), making them more reliable and resistant to generating harmful or biased output,” Verma adds. Notably, Gartner reports that nearly 80 percent of Claude’s consumer usage occurs outside the U.S., with countries like South Korea, Australia, and Singapore exhibiting higher per capita engagement.

This segmentation of the market illustrates how each provider is leveraging specific advantages—whether it be reasoning, distribution, multimodality, openness, or governance—to drive user retention and paid interactions. OpenAI maintains a significant presence in consumer AI, with ChatGPT serving as the default reference for millions globally. Its advantage lies in its scale and the breadth of its ecosystem, allowing paid users to seamlessly transition between text, voice, image generation, and custom GPTs within a unified interface.

Recent developments at OpenAI have concentrated on reasoning-oriented models such as the o series, which excel in tasks involving mathematics, coding, and structured data. However, the company has also narrowed its platform focus, retiring plugins and directing users toward curated GPTs while heavily depending on Microsoft for computational resources and enterprise distribution.

Microsoft’s Copilot strategy distinguishes itself not through a cutting-edge model or flashy interface, but via distribution and contextual integration. Embedded across Windows, Edge, Microsoft 365 apps, Teams, and GitHub, Copilot offers AI as an incremental extension for enterprises already utilizing Microsoft software. Unlike standalone chatbots, Copilot leverages existing workplace documents, spreadsheets, and meetings, making context a crucial differentiator alongside model quality. Behind the scenes, Microsoft utilizes OpenAI models while providing GPT-class systems through Azure OpenAI Service, ensuring AI adoption remains within a compliance framework.

In contrast, Google’s Gemini initiative is built around native multimodality, enabling models to understand and reason across text, images, audio, video, and code within a single architecture. This capability allows Gemini to integrate deeply into Google’s core products, including Search, Gmail, Docs, YouTube, and Android, tapping into real-time data and user-permitted context on a massive scale. Despite facing early challenges, such as a widely criticized image generation failure that prompted a public apology, Google’s distribution advantage remains unmatched, with Gemini deployed across cloud data centers and on-device Nano models on Pixel phones.

Meta has adopted a distinctive approach by releasing increasingly powerful open-weight Llama models, including the Llama 3.1 family, which scales up to 405 billion parameters. Unlike its closed-model competitors, Llama can be downloaded, fine-tuned, and redeployed under Meta’s community license, enhancing its adoption among startups, researchers, and enterprises seeking more control without API costs. Meta further bolsters this strategy by integrating Meta AI across Facebook, Instagram, and WhatsApp, achieving extensive distribution while maintaining open access to its models.

Distinctively, Perplexity positions itself as more of a search tool than a traditional chatbot. Rather than relying solely on training data, it searches the web in real-time and delivers concise answers with citations, allowing users to verify the information provided. For those prioritizing accuracy and transparency, this focus serves as a competitive edge. The Pro version enhances research capabilities, enabling users to analyze files alongside up-to-date web results, thereby competing on trust rather than aiming to be the most conversational AI.

As the landscape of AI continues to evolve, the integration of ethical considerations and user retention strategies will likely shape the future of LLM competition, indicating a significant shift in how companies approach the development and deployment of these advanced technologies.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature