The Malaysian Communications and Multimedia Commission (MCMC) is preparing to initiate legal action against X Corp. (formerly Twitter) and xAI LLC over allegations of inadequate user safety measures concerning their artificial intelligence (AI) tool, Grok. The commission announced on January 13 that legal proceedings are imminent, having appointed solicitors to handle the case.

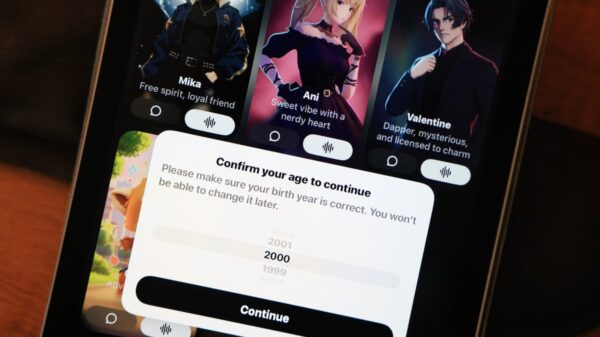

MCMC’s statement highlighted concerns about the misuse of Grok to produce and distribute harmful content, specifically noting the generation of obscene, sexually explicit, indecent, and manipulated images without consent. The commission emphasized that the creation of such content, particularly involving women and minors, raises significant legal and ethical issues. “This conduct contravenes Malaysian law and undermines the entities’ stated safety commitments,” MCMC asserted.

Despite being user-generated, MCMC maintains that X Corp. and xAI LLC hold responsibility for the content due to their control over Grok’s design, implementation, and risk mitigation strategies. “Responsibility cannot be ignored when safety fails,” the commission stated.

Notably, prior warnings were issued to both companies on January 3 and January 8, demanding the removal of offensive content. However, no remedial actions were reported, prompting MCMC to temporarily block Grok for Malaysian users on January 11, following a similar decision by the Indonesian government.

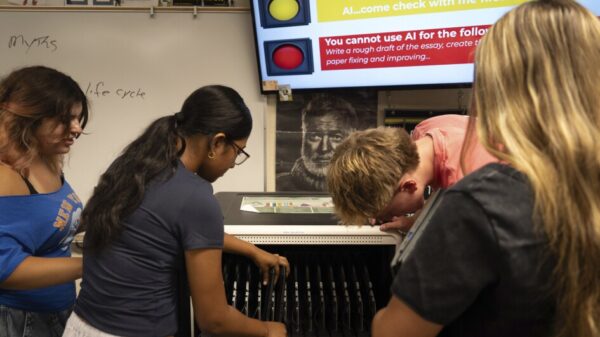

The issue has emerged from the use of Grok to create sexual deepfake images, which are AI-generated media that convincingly depict real individuals in fabricated scenarios. These images can be created by altering faces, manipulating expressions, or synthesizing voices using advanced deep learning techniques. While the Grok website is currently inaccessible, the AI tool remains operable through X on both mobile and desktop platforms.

Legal frameworks in Malaysia specifically address the generation of deepfake images. Under Section 233 of the Communications and Multimedia Act 1998, creating or transmitting obscene or offensive content is prohibited, with penalties including fines of up to RM50,000, a year of imprisonment, or both. More severe repercussions apply if such images are used for harassment or extortion: Section 292 criminalizes the distribution of obscene materials with penalties of up to three years in prison, while Section 509 addresses insulting modesty, carrying a sentence of up to five years.

Moreover, the Penal Code outlines harsh penalties for extortion, which can involve up to seven years in prison if deepfake imagery is used for blackmail. The Sexual Offences Against Children Act 2017 further intensifies the penalties for cases involving minors, equating AI-generated child sexual abuse material to real images and imposing sentences of up to 30 years in prison along with corporal punishment.

The ongoing legal scrutiny highlights the growing challenges and responsibilities faced by technology companies in ensuring user safety, particularly as AI tools become increasingly sophisticated and accessible. As concerns about the misuse of AI technology continue to mount globally, the resolutions reached in this case could set important precedents for the regulation of AI-generated content and user protection standards.

See also Study Reveals AI Image Tools Can Be Manipulated to Generate Political Propaganda

Study Reveals AI Image Tools Can Be Manipulated to Generate Political Propaganda Kling AI Surpasses $240M ARR Amid Video Generation Boom Driven by Kuaishou’s Upgrades

Kling AI Surpasses $240M ARR Amid Video Generation Boom Driven by Kuaishou’s Upgrades Quadric Secures $30M Series C Funding, Triples Revenue, Boosts On-Device AI Chip Production

Quadric Secures $30M Series C Funding, Triples Revenue, Boosts On-Device AI Chip Production Top 10 AI Development Firms in the USA for 2026: Key Players and Innovations

Top 10 AI Development Firms in the USA for 2026: Key Players and Innovations Google Launches Veo 3.1 with Native Vertical Support and Enhanced Video Consistency

Google Launches Veo 3.1 with Native Vertical Support and Enhanced Video Consistency