Researchers at Peking University and the Chinese University of Hong Kong (CUHK) have developed a new evaluation benchmark aimed at assessing the fluid intelligence of generative artificial intelligence models. The initiative, which includes contributions from researchers at the Polytechnic University (PolyU), introduces the Generative Fluid Intelligence Evaluation Suite (GENIUS). This suite is designed to move beyond traditional AI benchmarks that primarily focus on knowledge recall, addressing the pressing need to evaluate how AI systems reason, adapt, and identify patterns in novel situations.

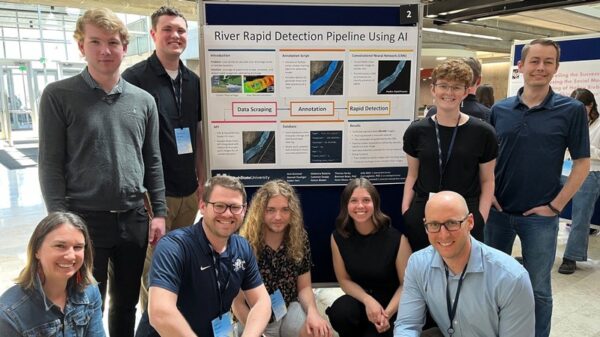

The GENIUS suite comprises 86 carefully curated samples aimed at assessing “Implicit Pattern Generation” in AI systems. The researchers, including Ruichuan An, Sihan Yang, and Ziyu Guo, emphasize that current AI capabilities fall short of true general intelligence. Their findings highlight substantial performance deficits across twelve representative models, indicating that the limitations lie not in generative capacity but rather in contextual understanding.

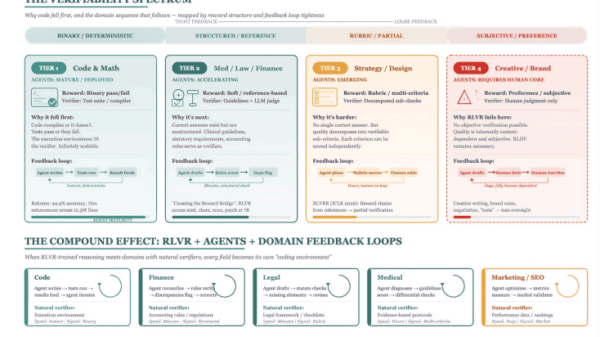

The construction of GENIUS draws upon three core primitives: implicit patterns, ad-hoc constraints, and contextual knowledge adaptation. Implicit Pattern Induction tasks require models to infer unstated visual preferences from a series of images, while Ad-hoc Constraint Execution tasks present abstract constraints that demand logical reasoning. Contextual Knowledge Adaptation evaluates a model’s ability to modify its behavior based on contextual cues, even when these cues contradict pre-existing knowledge.

Each sample within GENIUS is designed to challenge AI systems in ways that reflect real-world complexities. This involves integrating multi-modal data inputs, forcing models to utilize information across various types, such as images, text, and symbols. The aim is to simulate the unpredictable environments where AI is expected to operate, thereby pushing the boundaries of machine reasoning and adaptability.

The researchers conducted a systematic evaluation using the GENIUS suite, revealing significant gaps in the ability of existing models to dynamically reason and adjust. Diagnostic analysis revealed that the core issue stems from a limited comprehension of contextual relationships. This suggests that many models struggle to effectively interpret and apply information within the context of the tasks at hand, highlighting a critical shortfall in their fluid intelligence.

To address these limitations, the research team introduced a training-free attention intervention strategy designed to improve context comprehension. Though this was not the primary focus, it offers a promising avenue for enhancing the adaptability of multimodal models. The implications of this research extend far beyond just achieving higher benchmark scores; they point to the necessity of developing AI systems capable of genuine understanding and reasoning.

As AI technology continues to evolve, the significance of this work lies in its diagnostic approach, isolating specific cognitive weaknesses in generative models. This research provides a clear roadmap for future developments, aiming to enhance AI’s ability to think and respond dynamically rather than simply mimic thought processes. The findings suggest that while scaling datasets and model sizes has propelled AI advancements, true intelligence requires more than just sophisticated pattern recognition; it necessitates the capability to generate novel solutions and apply underlying principles flexibly.

The potential applications of these insights are vast, spanning areas such as robotics, autonomous systems, and creative problem-solving. As industries increasingly integrate AI into unpredictable real-world scenarios, understanding and enhancing fluid intelligence may prove crucial. The introduction of GENIUS marks a significant step toward refining AI capabilities, ensuring that future systems are not just efficient but genuinely intelligent.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature