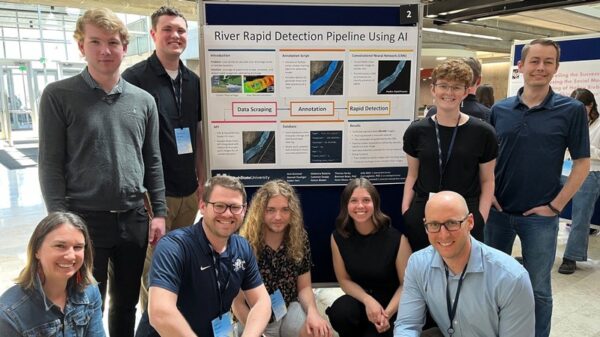

Researchers from the University of Massachusetts Amherst have introduced a novel defense strategy against membership inference attacks (MIAs), a significant challenge for deep learning models that raises privacy concerns. The framework, named Diffence, was presented during Session 12C at the Network and Distributed System Security Symposium (NDSS) 2025. MIAs allow adversaries to determine whether a specific data point was included in a model’s training set, potentially exposing sensitive information.

Led by authors Yuefeng Peng, Ali Naseh, and Amir Houmansadr, the research emphasizes the importance of addressing privacy without compromising model utility. Traditional defenses, which modify either the training process or the model’s output post-inference, fail to adequately balance privacy and performance. In contrast, Diffence operates pre-inference, modifying input samples before they reach the model without altering the model itself.

This innovative approach focuses on diminishing the distinctions between member and non-member inputs, effectively mitigating the vulnerabilities exploited by MIAs. By re-generating input samples, Diffence enhances membership privacy while preserving the accuracy and confidence of model predictions. The researchers conducted extensive experiments, demonstrating that Diffence decreases MIA attack accuracy against an undefended model by an average of 15.8% and reduces attack area under the curve (AUC) by 14.0% across three datasets.

One of the unique advantages of Diffence lies in its compatibility with existing defense mechanisms. The researchers showed that when integrated with the state-of-the-art SELENA defense, Diffence further reduces MIA attack accuracy by 9.3% and AUC by 10.0%. This synergistic effect showcases Diffence’s potential as a robust plug-and-play solution for enhancing data privacy in machine learning applications.

Importantly, Diffence incurs a minimal computational overhead, adding only an average of 57 milliseconds to the inference time per sample. This efficiency makes it a viable option for real-world applications, where both speed and privacy are critical concerns.

The NDSS Symposium serves as a platform for the exchange of ideas among researchers and practitioners focused on the practical aspects of network and distributed system security. By fostering collaboration within the community, the symposium aims to advance the deployment of cutting-edge security technologies.

The introduction of Diffence could represent a significant step forward in addressing the pressing issue of privacy in AI. As the capabilities of deep learning models continue to grow, so too does the need for effective protections against data breaches and unauthorized access. The ongoing research in this domain will likely prompt further innovations and discussions on how to safeguard sensitive information while leveraging the full potential of artificial intelligence.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature