Governance, Risk and Compliance (GRC) teams are increasingly under pressure to navigate complex audits, manage supplier issues, maintain comprehensive training records, and satisfy evolving customer and regulatory requirements. This mounting workload has prompted a shift toward applying repeatable and sustainable practices in risk and compliance work, paving the way for more effective governance strategies.

Artificial intelligence (AI) is emerging as a potential ally for these overburdened GRC teams. When implemented correctly, AI can significantly reduce the time spent on drafting, summarizing, organizing records, and gathering evidence from disparate systems. However, if AI is utilized ineffectively, it can create new challenges, potentially leading teams toward incorrect corrective actions or undermining essential documentation that must withstand scrutiny during audits. Successfully leveraging AI requires the establishment of clear guidelines and integration of review processes into existing workflows.

Quality teams in manufacturing and industrial sectors often bear the brunt of this pressure, serving as the “system of record” for various operational components beyond mere quality management. Their responsibilities encompass tracking internal controls, compliance obligations, supplier performance, customer specifications, incident investigations, and training evidence. Consequently, they face two significant hurdles: the documentation-heavy nature of their work and the fragmentation of data across the organization.

Documentation requirements consume considerable time, diverting focus from value-added judgment and follow-through. In tandem, critical information is often scattered among audit workpapers, corrective and preventive action (CAPA) narratives, supplier questionnaires, incident logs, emails, PDFs, and spreadsheets. This disjointedness not only slows response times but also increases the risk of overlooking trends that could be pivotal for the organization.

AI technology is well-equipped to address these challenges, particularly in environments characterized by large volumes of text, repetitive administrative tasks, and the need for pattern detection across records. AI can enhance governance, risk, and compliance operations by supporting established workflows rather than imposing new ones. For example, AI can facilitate faster documentation processes without compromising consistency, enabling quality teams to produce initial drafts of control descriptions, test procedures, and policy updates swiftly. Human oversight remains crucial, as a final review ensures that the generated content meets organizational standards.

Furthermore, AI can streamline the intake process for lengthy documents such as supplier security assessments and quality manuals by extracting key information and structuring it into manageable fields. This efficiency reduces the re-keying of data and accelerates cycle times. AI can also assist in cleaning records and minimizing duplicates, flagging overlaps that could lead to wasted efforts on redundant reporting. By linking various program elements—risks, controls, incidents, and audits—AI can help maintain updated mappings and reduce the manual effort required to manage them.

Quality leaders require coherent narratives, not just data. AI can help generate summaries, highlight emerging trends, and identify critical exceptions, allowing teams to focus on actionable insights rather than on compiling reports. However, trust and explainability remain essential for the responsible adoption of AI in quality and compliance operations. Teams must feel confident that AI outputs are controlled and defensible, considering they often put their signatures on the outcomes.

A practical approach to AI adoption does not necessitate an expansive new governance program but rather a set of non-negotiable principles. Leaders should establish clear policies regarding sensitive information that must never be entered into AI systems and delineate when anonymization is necessary. Accountability for outcomes must rest with human reviewers, ensuring that AI-generated summaries and proposed mappings are validated for accuracy. Part of the workflow should include an audit trail documenting what data was used, who reviewed it, and any changes made.

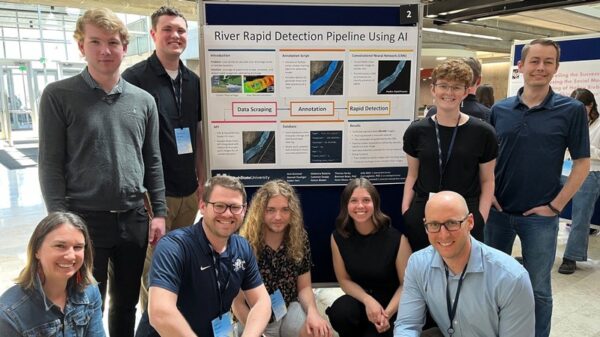

Piloting AI initiatives in low-risk areas is advisable, allowing teams to test effectiveness before scaling up to more critical applications where errors could impact compliance or safety. Moreover, the quality of data input is crucial; AI can only provide value if it operates on consistent and complete inputs. Cleaning up existing terminology, record structures, and ownership is essential for a responsible rollout.

AI can also help preserve institutional knowledge and support long-tenured team members in codifying practices, reducing risks associated with turnover and ensuring continuity in operations. Quality programs already function within a governance, risk, and compliance framework, emphasizing the need for documented requirements, controlled workflows, and sign-offs. AI’s role is to enhance this structure by streamlining documentation, connecting related records, and improving visibility without adding unnecessary complexity to reporting cycles.

Ultimately, the effective adoption of AI in quality operations should focus on selective implementation to maximize benefits while maintaining control. By doing so, AI can serve as a valuable partner for quality leaders, facilitating faster documentation, cleaner records, shorter cycle times, improved visibility, and enhanced audit readiness.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health