This is a three-part series about AI governance in banking QA and software testing. It examines why modern AI systems are colliding with regulatory expectations, how supervisors are responding, and why QA teams inside banks and financial services firms are increasingly being asked to carry responsibility for AI risk, control and evidence. Click here for Part I and Part II.

By the time AI governance reaches the QA function, it is no longer a local or isolated issue. It is a global one. International banking groups operate across jurisdictions with different regulatory philosophies, uneven enforcement timelines, and diverging expectations around AI transparency and control. Yet the underlying technologies they deploy—machine-learning models, generative AI systems, synthetic data pipelines, and agentic tools—behave in broadly similar ways everywhere.

This creates a structural challenge. A system that passes governance scrutiny in one market may fail it in another. QA teams are increasingly responsible for reconciling those differences through testing, evidence, and control frameworks that can withstand scrutiny across borders. Last month’s World Economic Forum was explicit that AI governance in finance is no longer a future concern. In its most recent assessment, it warned that regulators are increasingly focused on whether financial institutions can demonstrate that AI systems are reliable, explainable, and resilient throughout their lifecycle.

For QA teams, the implication is clear. Testing is no longer a downstream activity; it is becoming the mechanism through which trust is built and maintained. The WEF’s analysis highlights that gaps between AI ambition and operational readiness often surface in testing. When firms struggle to evidence control, oversight, or accountability, those gaps become testing failures rather than abstract governance issues. This framing aligns closely with Jennifer J.K.’s critique from Part I. If regulators expect evidence chains that AI architectures cannot always provide, QA teams become the first place where that mismatch is exposed.

The WEF also emphasized that AI risks evolve over time. Unlike deterministic systems, AI models can change behavior as data shifts or as systems interact with other models. Static validation is therefore insufficient. Continuous testing and monitoring become essential governance tools.

Global control and industry convergence

Some large financial institutions are already responding by embedding governance directly into engineering and testing practices. As one of the world’s largest insurers, Allianz offers a useful reference point. Operating across more than 70 countries, the group has little choice but to treat AI governance as a global discipline rather than a local compliance exercise. Philipp Kroetz, CEO of Allianz Direct, has described the organization’s approach as one rooted in outcome discipline, saying that “the most impactful decision was to be stubborn about the outcome, and to never waver on what good looks like.” That insistence on clarity has shaped how data quality, testing, and AI governance are embedded across the business.

For QA teams, Allianz’s emphasis on data lineage, consistency, and traceability is particularly relevant. The firm has invested heavily in shared data models, business glossaries, and data catalogs that allow testing teams to understand where data comes from, how it is transformed, and how it is used in models. This infrastructure supports AI governance not through abstract principles but through testable artifacts. Models can only be validated if their inputs are trusted. Test data can only support resilience testing if its provenance is understood.

Christoph Weber, Chief Transformation Officer at Allianz Direct, has linked this directly to execution, saying success depended on “the combination of technical excellence, sophisticated IT infrastructure, and advanced digital marketing capabilities, along with robust execution and global delivery in a compliant way.” For QA organizations inside banks, Allianz’s approach illustrates a broader lesson: governance scales only when it is engineered.

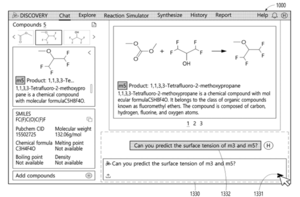

The governance challenge is not unique to finance. Highly regulated sectors are converging on similar conclusions. At AstraZeneca, Director of Testing Strategy Vishali Khiroya has described how AI governance is being integrated into testing practices rather than treated as a separate control layer. Discussing the company’s internal AI tooling, she emphasized that “whatever tech you might be talking about, it is the testing of those that we internally do as well.” That continuity matters. Khiroya noted that while AI introduces new risks, mature testing principles still apply.

“We adapt and we follow pretty much the same regulatory principles,” she explained in a recent QA Financial podcast, pointing to standard operating procedures, documentation, and controlled use of test data. Khiorya drew a clear distinction between adopting AI within testing and testing AI itself, observing that “AI can be seen in two lights within testing,” both of which must sit within governance boundaries. Her insistence on human oversight echoes regulatory expectations across financial services: “Always having that human in the loop,” she said, “I think that will never change.”

Across regulators, industry bodies, vendors, and lawmakers, one pattern stands out: AI governance fails without testability. Jennifer J.K.’s critique provides the conceptual frame; if AI systems cannot meet traditional explainability demands, governance must adapt. But until regulatory frameworks fully reflect that reality, QA teams will continue to act as translators between law and technology. Testing artifacts are becoming governance artifacts. Test coverage becomes evidence. Monitoring dashboards become assurance. Stress tests become regulatory signals.

This is why AI governance has become a global QA concern—not because QA teams asked for it, but because they are the only function positioned to make AI risk visible, measurable, and defensible across borders. As banks continue to scale AI, the defining question will not be how much AI they deploy, but how convincingly they can demonstrate control. Increasingly, that answer will be written in test results, not policy statements.

This was the final installment of our three-part series about AI governance in banking QA and software testing. Click here for Part I and Part II.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health