In a significant shift from its historical role, the White House Office of Science and Technology Policy (OSTP) has requested public input regarding regulations that may hinder the adoption of artificial intelligence (AI) in the United States. This request, made during a Senate Commerce Subcommittee hearing titled “AI’ve Got a Plan: America’s AI Action Plan” on September 10, 2025, has drawn criticism amid rising concerns about the unregulated deployment of AI technologies.

The public response has been immediate and critical. The American Council on Education emphasized the necessity for human oversight in administrative processes and strong privacy protections. The Association for Computing Machinery raised alarms about the potential for AI to exacerbate online scams, create deepfake pornography, and violate personal privacy, suggesting an enforceable tiered governance approach along with a regulatory framework for AI. Individual commenters called for clear legal frameworks to ensure that AI policy respects the dignity, safety, and legal rights of all Americans. Civil rights groups warned that AI systems in housing and lending must ensure fairness, transparency, and accountability to prevent “algorithmic redlining and digital discrimination.”

Critics argue that the OSTP’s request for information misaligns with its core mission established in 1976, which is to advise the president on the impacts of science and technology on both domestic and international affairs. The OSTP is tasked with providing accurate, relevant, and timely scientific and technical advice, a responsibility that requires rigorous evidence gathering and expert consultation rather than promoting pre-established deregulatory conclusions.

Historically, the OSTP has addressed critical societal challenges while fostering scientific innovation. Its past accomplishments include the first national technology policy under former President George H.W. Bush, initiatives on electric vehicles and nanotechnology during the Clinton administration, and the establishment of “science of science policy” during George W. Bush’s presidency. The Obama administration notably released a landmark 2016 report titled “Preparing for the Future of Artificial Intelligence,” which highlighted the state of AI, opportunities, and challenges, advocating for public engagement and workforce impact considerations.

Building on these efforts, the Trump administration rolled out the “American AI Initiative,” which expanded research investments and established national AI research institutes. It also produced regulatory guidance governing private sector AI development and federal AI use, including prohibitions on unsafe systems. The Biden administration continued this trajectory, emphasizing public involvement in shaping AI usage while also advocating for an AI Bill of Rights to ensure necessary safeguards.

Despite these advancements, many in the scientific community have voiced concerns over the administration’s perceived retreat from funding basic research and innovation across various fields, including computing research. The recent call for public input from the OSTP has alarmed experts, as it appears to prioritize deregulation over the rigorous standards necessary for safe AI deployment. The “Gold Standard Science” framework, promoted by the Trump OSTP, offers critical guardrails for evaluating AI systems, yet many commercial AI products, especially large language models, may not meet these standards due to their lack of transparency and reproducibility.

Public sentiment reflects a growing demand for AI regulation. Recent surveys indicate that 80% of U.S. adults believe the government should enforce rules for AI safety and data security, even at the cost of slowing AI development. A Pew poll revealed that 55% of U.S. adults and 57% of AI experts desire greater control over AI’s application in their lives, expressing concerns over potential misuse, privacy violations, and threats from emerging technologies like deepfake pornography.

Distinguished AI researchers, including Nobel Prize winners Geoffrey Hinton and Yoshua Bengio, have underscored the urgency for more enhanced safeguards. Nobel Laureate Daron Acemoglu has pointed out that regulation is crucial for ensuring the equitable distribution of AI innovation benefits. However, the OSTP’s recent request frames regulation as a challenge rather than a necessity, undermining the consensus among the public and experts that AI requires increased oversight.

Looking ahead, experts believe that the OSTP should reconsider its approach. It is vital that the OSTP withdraw the current request for information and initiate a new one focused on updating U.S. laws and regulations to address AI challenges. Gathering evidence from leading scientific experts and working with Congress to craft a modern regulatory framework that aligns with the mission of the OSTP could help fortify American leadership in AI. As the technology evolves, ensuring that AI serves the interests of the American people should be the priority, addressing both innovation and the safeguards necessary to protect individuals.

See also SAI360 Acquires Plural Policy to Enhance AI-Driven Regulatory Compliance Solutions

SAI360 Acquires Plural Policy to Enhance AI-Driven Regulatory Compliance Solutions Federal Government Announces National AI Plan, Relies on Existing Laws to Manage Risks

Federal Government Announces National AI Plan, Relies on Existing Laws to Manage Risks Trump Administration Considers Executive Order to Ban State AI Regulations Amid Bipartisan Backlash

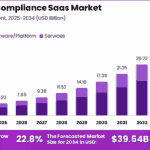

Trump Administration Considers Executive Order to Ban State AI Regulations Amid Bipartisan Backlash AI Compliance SaaS Market Reaches $5.07B, Projected to Grow 22.8% Annually Through 2034

AI Compliance SaaS Market Reaches $5.07B, Projected to Grow 22.8% Annually Through 2034 Houston County Adopts New Policy Manual, Signs $43K UKG HR Contract, and Backs Road Grants

Houston County Adopts New Policy Manual, Signs $43K UKG HR Contract, and Backs Road Grants