A new dispute has emerged between the United States Department of Defense and leading AI company Anthropic, raising fresh questions about how powerful artificial intelligence should be controlled. Reports indicate that the disagreement centers on how Anthropic manages the safety testing and monitoring of its AI systems, with the Pentagon expressing concerns over potential risks associated with advanced AI technology.

The Defense Department has reportedly warned Anthropic that it could lose government cooperation if it fails to fully comply with specific safety requirements. This situation underscores a growing urgency among US officials regarding the regulation of AI companies, particularly in light of the potential consequences of even minor errors in advanced systems used in sensitive areas tied to national security.

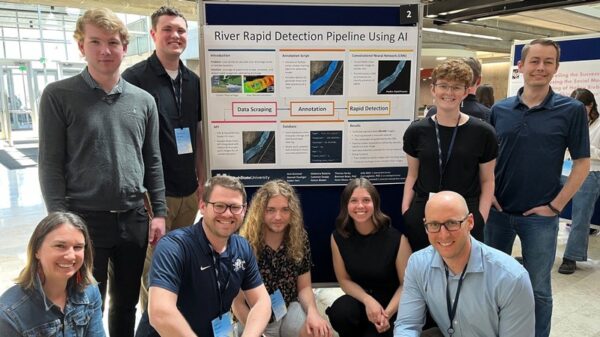

Anthropic, recognized for developing sophisticated AI systems capable of understanding and generating human-like text, is at the forefront of technology that is increasingly integrated into various sectors, including business, education, research, and defense. As these tools gain influence, government officials argue that stringent safeguards are essential to prevent misuse or unintended harm.

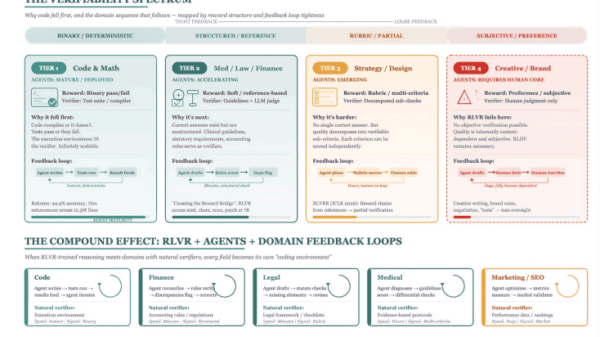

The Pentagon’s demands highlight a broader initiative to establish clear rules, stronger protections, and enhanced transparency in the development of AI technologies. Defense officials maintain that the stakes are exceptionally high, as the application of advanced AI could lead to serious repercussions if not properly supervised. They emphasize the need for AI companies to collaborate with authorities to ensure responsible use of these powerful systems.

In response, Anthropic has asserted its commitment to safety, claiming that it has already implemented robust protective measures within its AI models. However, the company appears wary of adhering to regulations that might hinder innovation or slow the pace of research. Like many tech firms, Anthropic seeks to balance the necessity for regulatory compliance with the desire to maintain a degree of autonomy in its operations.

This dispute reflects a much larger, ongoing conversation about the balance between rapid AI advancement and the imperative for safety and responsibility. While artificial intelligence has the potential to deliver substantial benefits—ranging from advancements in healthcare to enhanced productivity—experts caution that the risks could escalate in tandem with technological progress if adequate safeguards are not put in place.

Currently, discussions between the Pentagon and Anthropic are ongoing, and the outcome of these negotiations could significantly influence how AI companies engage with government entities moving forward. The resolution may also serve as a precedent for other nations grappling with the challenge of managing powerful AI systems safely and effectively.

As the field of AI continues to develop at an unprecedented rate, it is increasingly evident that safety, transparency, and accountability are becoming as crucial as innovation itself. This evolving landscape necessitates careful consideration of how to best regulate and guide advancements in AI technology to harness its potential while mitigating associated risks.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health