As the integration of artificial intelligence (AI) into mental health services accelerates, the establishment of comprehensive policies and regulations is becoming increasingly urgent. Policymakers must grapple with a myriad of issues that arise from the use of AI in providing mental health guidance, especially as many states rush to enact laws that often provide only fragmented protections.

Current Regulatory Landscape

Recent developments indicate a patchwork of regulations emerging at the state level, currently characterized as “hit-or-miss.” Many of these laws tend to overlook significant aspects of AI’s role in mental health, resulting in regulatory gaps and confusion among stakeholders. This inconsistency undermines the intention behind the regulations, leaving both AI developers and users uncertain about permissible practices.

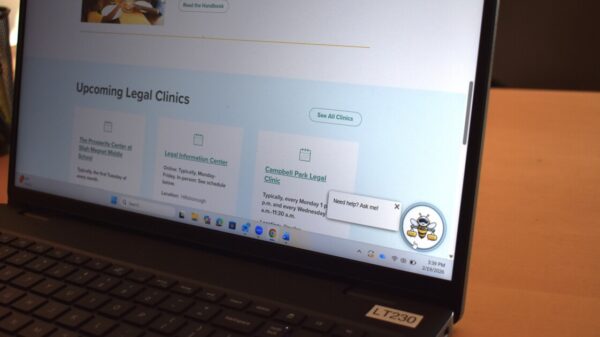

In light of this, a comprehensive framework for AI policy in mental health has been proposed to better guide lawmakers and stakeholders. This framework is designed to help policymakers and researchers across various fields—including governance, ethics, and behavioral sciences—navigate the complexities of AI in mental health.

The Role of AI in Mental Health

AI technologies, particularly generative AI and large language models (LLMs), have rapidly gained traction in providing mental health advice. Notably, ChatGPT boasts over 800 million weekly active users, a significant portion of whom engage with the platform for mental health-related inquiries. The accessibility of these AI systems, often available at low or no cost, allows users to seek support anytime, contrasting sharply with traditional therapy.

There are two main types of AI applications in this field: generic AI, used for various tasks including casual mental health guidance, and customized AIs specifically designed for therapeutic purposes. Therapists are increasingly incorporating AI into their practices, either by encouraging client use of generic AI or employing tailored systems. However, this integration raises ethical concerns about the potential erosion of the therapist-client relationship.

Concerns and Legal Challenges

Despite the promise of AI in mental health, significant concerns persist. For instance, a lawsuit filed against OpenAI in August highlighted the risks associated with inadequate AI safeguards, particularly in providing cognitive advice. Critics warn that improperly managed AI can facilitate harmful outcomes, such as fostering delusions or leading to self-harm.

As states enact laws like those in Illinois, Nevada, and Utah, many lack comprehensiveness, creating a confusing landscape of regulations that vary widely. The absence of federal legislation further complicates matters, potentially resulting in a chaotic legal environment as states craft their own standards. A unified federal law could mitigate these inconsistencies, but efforts to develop such legislation remain stalled.

A Comprehensive Policy Framework

To address these challenges, a structured policy framework encompassing twelve categories has been proposed:

- Scope of Regulated Activities: Clearly define what constitutes AI in mental health to prevent loopholes.

- Licensing and Accountability: Specify who is responsible for the actions of AI, particularly in delivering mental health advice.

- Safety and Efficacy: Establish risk levels and safety measures for AI applications.

- Data Privacy: Ensure that user data is protected and confidentiality is maintained.

- Transparency: Mandate clear disclosure of AI limitations and risks to users.

- Crisis Response: Define protocols for handling emergencies, including self-harm situations.

- Prohibitions: Clearly outline what AI is not permitted to do, such as diagnosing without human intervention.

- Consumer Protection: Protect users from misleading claims and ensure accurate marketing practices.

- Equity and Bias: Implement measures to mitigate algorithmic biases affecting marginalized groups.

- Intellectual Property: Address ownership rights concerning data and AI models.

- Cross-State Practice: Clarify jurisdictional issues related to AI usage across state lines.

- Enforcement: Develop mechanisms for compliance and penalties for violations.

This framework aims to provide a holistic approach to AI regulation in mental health, ensuring that all facets are considered. Lawmakers must remain vigilant in crafting these policies to avoid the pitfalls of previous laws and ensure that the evolving landscape of AI in mental health is navigated responsibly.

As J. William Fulbright once said, “Law is the essential foundation of stability and order.” With the rapid rise of AI in mental health services, the urgency for sound regulatory frameworks has never been more critical.

Congress Moves to Ban State AI Regulations, Heightening Risks for American Children

Congress Moves to Ban State AI Regulations, Heightening Risks for American Children AI Model Risk Management Evolves: 70% of Financial Firms Integrate Advanced Models by 2026

AI Model Risk Management Evolves: 70% of Financial Firms Integrate Advanced Models by 2026 White House Push for AI Regulation Ban Faces Opposition Ahead of NDAA Vote

White House Push for AI Regulation Ban Faces Opposition Ahead of NDAA Vote Trump Advocates for Federal AI Regulation to Prevent State-Level Overreach

Trump Advocates for Federal AI Regulation to Prevent State-Level Overreach White House Executive Order Aims to Block Florida’s AI Regulation Efforts

White House Executive Order Aims to Block Florida’s AI Regulation Efforts