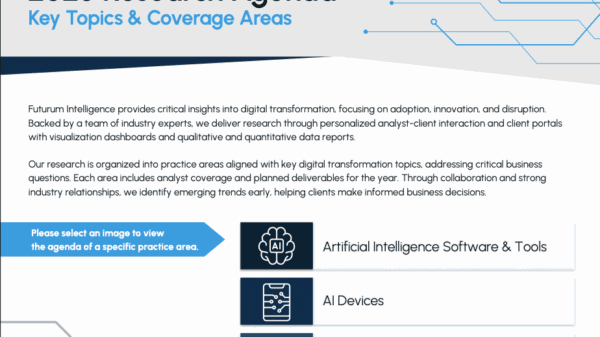

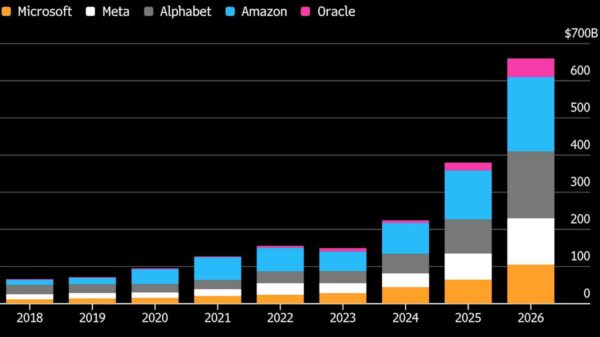

The Software Lifecycle Engineering market is entering a transformative phase, shifting from AI experimentation to AI accountability, particularly across the Software Development Life Cycle (SDLC). By 2026, enterprises will need to demonstrate not only AI-driven business value and operational impact but also clearly measurable risk reduction in their development processes. Vendors that are unable to connect their AI investments to sustainable outcomes may face intensified scrutiny from customers, buyers, and boards.

Simultaneously, the industry is striving to industrialize AI systems to meet the demanding expectations of enterprises. Vendors are currently assembling a new software stack dedicated to AI, encompassing agents, workflows, management, and infrastructure. However, many of these frameworks remain incomplete. Simply having prompts, large language model (LLM) modes, and agent builders does not yield production-ready systems. The more challenging task now involves designing AI-native lifecycle platforms that incorporate agent identity, control planes, behavioral governance, security safeguards, testing protocols, operational management, observability, and comprehensive lifecycle control. Decisions made in this area will either facilitate wide-scale agent adoption or impose constraints that stifle growth.

Such platform choices are not merely technical decisions; they are foundational commitments that influence how vendors build trust, scale deployments, and maintain relevance as buyers gravitate toward fewer, AI-native lifecycle platforms.

Key Issues for 2026

The upcoming 6 to 12 months will be pivotal in determining whether the software development landscape transitions from traditional code-centric execution to intent-directed systems centered around AI and agents. Vendors face critical decisions regarding whether to uphold familiar developer patterns and workflows or to introduce new AI-centered primitives for planning, delegation, and execution that will allow AI to handle end-to-end collaborative tasks effectively.

The successful vendors will be those who adeptly support human-AI collaboration across code, specifications, security, and runtime behavior, while also providing customers with a clear strategy and roadmap for transitioning to these new AI-centric development models and architectures. Failure to make this transition explicit could trap customers in architectures that cannot scale agent-centered systems, ultimately capping the true impact of AI and eroding long-term value.

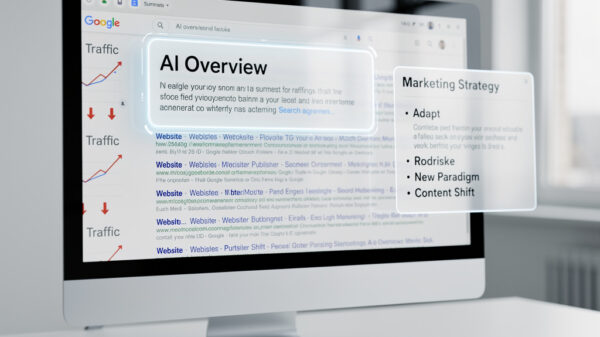

Another significant shift is the movement of AI from being a feature to becoming a core capability integrated throughout the SDLC. Vendors engaged in application development, testing, security, operations, and platform engineering face a crucial structural decision: whether to layer AI into existing tools or design solutions that position AI as a first-class execution capability within their respective areas of the lifecycle.

In the coming year, the competitive edge will lie in creating shared contexts, maintaining persistent AI states, and enabling cross-stage decision-making that allows AI systems to plan, act, and adapt across development, platform engineering, systems reliability engineering, and operations. Platforms that fail to incorporate AI into the workflow, policy enforcement, and operations will likely see their relevance wane as buyers consolidate around fewer, AI-native lifecycle platforms. Once buyers have standardized on these platforms, any late-stage rearchitecture will become exponentially more complex, diminishing market impact.

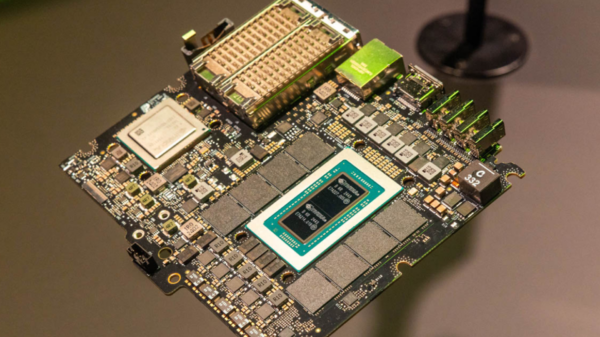

By 2026, AI-centered development is expected to solidify into a well-defined software stack that supports both software engineering and AI applications, establishing protocols for how intent is captured, work is delegated to agents, and execution is governed across the SDLC, infrastructure, DevOps, platform engineering, and operations. The industry is set to witness the rise of agent-native infrastructure, akin to the role Kubernetes played during the microservices era, built around agent control planes, orchestration, and scalable operations. At the core of this stack will be an agent control plane that manages identity, permissions, memory, lifecycle, policy, and observability. Orchestration layers will coordinate specialized agents across various phases—planning, building, testing, security, deployment, and operations—enabling parallel, asynchronous interactions under human oversight.

The next 6 to 12 months are critical for establishing how agent environments will be controlled, observed, and trusted in production. Vendors are racing to define control planes that provide agent identity, scoped authority, behavioral constraints, and real-time observability, directly integrated into development and operational platforms. Success in this area will cement the viability of agent-based software at an enterprise scale, while those treating governance, security, and observability as secondary concerns risk being excluded from production deployments. The governance models adopted during this period will significantly influence whether agents are perceived as manageable systems or ungovernable risks.

As the next year unfolds, the future of agent-based ecosystems will hinge on whether they fragment or converge. While existing open standards continue to evolve, new open standards and open-source initiatives are anticipated to address interoperability challenges within agent harnesses, control planes, and policy enforcement. Vendors must navigate a delicate balance between leading developments, contributing to existing standards, and aligning with the broader ecosystem. Misjudging this balance could result in either ecosystem isolation or a loss of strategic control over core platform value.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions