Anthropic has launched Claude Cowork, a new desktop agent that utilizes Claude Code to simplify agentic AI workflows for non-developers. The tool, currently in research preview for macOS, allows users to queue tasks for Claude without waiting for it to finish previous requests, effectively streamlining the AI’s integration into everyday computer-based knowledge work. Users can also leverage a Chrome plugin to enable Claude to navigate websites and utilize third-party applications like Canva through Anthropic’s Connectors framework. A £20 subscription grants users access to Claude Cowork, which was built in just over a week, showcasing the rapid development potential of the Claude Code.

In another development, Anthropic has introduced Labs, aimed at fostering experimental products based on Claude’s capabilities. This initiative highlights the company’s commitment to building an AI ecosystem beyond just advanced models by standardizing practices like Model Context Protocol (MCP) and Claude Skills. The goal is to enhance their strength within AI ecosystem products, a strategy that has proven successful thus far.

Meanwhile, Z.AI unveiled GLM-Image, the first open auto-regressive image generation model, which combines an auto-regressive module with a diffusion decoder. This model reportedly excels in text rendering and precise instruction-following, achieving benchmarks on par with leading competitors. GLM-Image is available on Hugging Face and through an API for developers, signaling a significant advancement in open-source image generation technology.

On the software front, Google has rolled out Personal Intelligence for its Gemini AI, a feature allowing users to connect their Gmail, Photos, YouTube, and Search accounts for improved contextual responses. Initially available to Google AI Pro and Ultra subscribers, the feature promises strict privacy measures, assuring users that their data will not directly influence model training. Additionally, Google’s Flow tool, based on Veo 3.1, is now available for Business, Enterprise, and Education Workspace plans, enhancing video generation capabilities through prompts.

Meituan has also introduced LongCat-Flash-Thinking-2601, an open-source agentic reasoning model optimized for complex workflows. The 560 billion parameter model includes a “Heavy Thinking” mode that has demonstrated perfect performance on the AIME-25 benchmarks, marking a significant leap in advanced reasoning capabilities.

OpenAI has expanded access to its GPT-5.2 Codex model via API, previously limited to the Codex product. This model has achieved state-of-the-art results on various benchmarks and introduces native context compaction to support longer, agentic tasks. Tools such as Cursor and GitHub Copilot have integrated GPT-5.2 Codex in applications like FastRenderer, a web browser built from scratch in Rust, demonstrating the model’s potential in rapid software development.

In further advancements, Google introduced TranslateGemma, a suite of open-source translation models fine-tuned for multilingual applications across 55 languages. The translation models, available in various parameter sizes, promise efficient performance, with the 4B variant capable of running entirely on-device, enhancing on-the-go translation capabilities.

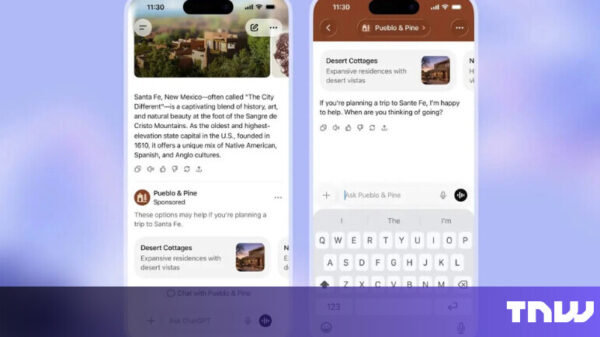

OpenAI’s ChatGPT Go, now available globally, expands its $8/month subscription tier, offering increased access to newer models while introducing plans for ads on lower-tier subscriptions. This shift aims to enhance monetization without compromising user trust.

In the medical AI domain, Google Research has released updates to their models for medical diagnostics, with MedGemma 1.5 introducing native 3D imaging support and MedASR outperforming existing models in clinical dictation accuracy. Simultaneously, Baichuan AI has announced Baichuan-M3, a new 235 billion parameter medical large language model designed to support clinical decision-making and outperform previous iterations in accuracy.

Utilizing advancements in local computing, Raspberry Pi has unveiled an 8GB add-on board designed to run AI models more efficiently, catering to hobbyists looking to leverage powerful AI capabilities in compact devices. Meanwhile, DeepSeek’s latest research highlights a novel approach to memory management in large language models, potentially setting the stage for improved performance in knowledge-intensive tasks.

As the AI landscape evolves, Microsoft Research has introduced OptiMind, a 20 billion parameter model tailored for business optimization. This model can translate natural-language business problems into mathematical formulations, promising efficiency comparable to larger systems while operating on local machines.

In a broader industry context, analysts predict a substantial increase in U.S. corporate bond issuance driven by AI hyperscalers as they seek capital for data center expansions. This trend may mark a significant shift in how companies fund their AI initiatives.

Notably, OpenAI’s recent $10 billion compute deal with Cerebras underscores the tech giant’s pursuit of specialized hardware essential for deploying advanced AI models. The agreement may also bolster Cerebras’ prospects for an initial public offering.

These developments reflect a dynamic landscape in AI, where companies are not only competing on technological fronts but also navigating regulatory reviews and market demands for innovation, privacy, and performance.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions