In a significant advancement for the field of genomics, researchers have unveiled a novel method for analyzing single-cell multiomic data using deep contrastive learning. Led by Cheng et al., the study offers a fresh perspective on cellular heterogeneity and the functional integration of diverse biological modalities, positioning itself as a potential game-changer in the understanding of complex biological systems. The findings were published in the journal *Genome Medicine*.

The innovative approach focuses on the aligned cross-modal integration of various omics layers, which include genomic, transcriptomic, and epigenomic data at the single-cell level. This integration is especially critical, as traditional analytical methods frequently fall short in addressing the complexities inherent in high-dimensional biological datasets. The new method seeks to bridge these gaps, facilitating a comprehensive understanding of cellular dynamics and regulatory mechanisms that dictate cell function and identity.

A core component of the research is the application of contrastive learning principles, a machine learning technique aimed at distinguishing between similar and dissimilar instances. Cheng and colleagues adapted these principles to enhance the analysis of multiomic datasets, enabling the generation of robust representations that capture both shared and unique features of different omic layers. This adaptability allows for more nuanced insights into cellular behavior and interactions among molecular layers.

The methodology presented by the authors integrates deep contrastive learning with single-cell multiomics, establishing a systematic framework for analyzing heterogeneous cellular populations. This framework empowers researchers to better tackle crucial biological questions, such as cell-type identification and the functioning of regulatory networks. The authors argue that this method not only improves clustering and classification performance but also provides vital insights into the implications of cellular diversity.

Moreover, the study emphasizes the importance of considering interactions among various molecular layers. By aligning omics data through deep contrastive representations, the research illustrates the significance of cross-modal relationships in determining cellular identity and function. This holistic approach enhances the interpretation of complex cellular behavior in both health and disease, paving the way for more effective therapeutic strategies.

The potential clinical implications of this research are particularly noteworthy. By elucidating cell-specific regulatory mechanisms, the study could inform targeted interventions for diseases marked by cellular dysregulation, such as cancer and autoimmune disorders. A clearer understanding of the cellular landscape could lead to significant advancements in precision medicine, offering tailored treatment options that align more closely with individual patient profiles.

In addition to its biological insights, the study touts the computational efficiency of its approach. Traditional methods often necessitate extensive preprocessing and manual data integration, which can introduce biases and slow down analysis. In contrast, the deep learning-based framework proposed by Cheng et al. significantly reduces these burdens, streamlining the analysis process and increasing accuracy.

The research findings are underscored by various case studies that demonstrate the method’s capability in uncovering biologically relevant signals and regulatory pathways. These examples highlight how aligned cross-modal integration can reveal novel cell types and states that were previously obscured in high-dimensional data noise.

As the scientific community continues to embrace personalized medicine, methodologies like the one proposed by Cheng and his team are crucial. The integrated analysis of single-cell multiomics promises to deepen our understanding of the genetic and epigenetic mechanisms underlying complex diseases, ultimately guiding the development of more effective treatment strategies.

In conclusion, the work of Cheng, Su, Fan, and their colleagues represents a noteworthy leap forward in the analysis of multiomic data. By harnessing the capabilities of deep contrastive learning, this research offers a new lens through which to explore the intricate nature of single-cell data. As advancements in artificial intelligence and machine learning continue to evolve, studies like this will undoubtedly play a pivotal role in shaping the future of genomic research and its applications in healthcare.

Ultimately, this research stands as a testament to the transformative potential of innovative analytical techniques in the realm of biology. As researchers navigate the cellular world, the tools and insights generated from such studies will pave new paths in our quest to unravel the molecular complexities of life, heightening our understanding of both ourselves and the biological universe around us.

Subject of Research: Integrated analysis of single-cell multiomic data using deep contrastive learning.

Article Title: Aligned cross-modal integration and regulatory heterogeneity characterization of single-cell multiomic data with deep contrastive learning.

Article References: Cheng, Y., Su, Y., Fan, Y. et al. Aligned cross-modal integration and regulatory heterogeneity characterization of single-cell multiomic data with deep contrastive learning. Genome Med 18, 10 (2026). https://doi.org/10.1186/s13073-025-01586-7

Keywords: single-cell multiomics, deep contrastive learning, cross-modal integration, genomic data, machine learning, cellular heterogeneity, regulatory networks, precision medicine.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

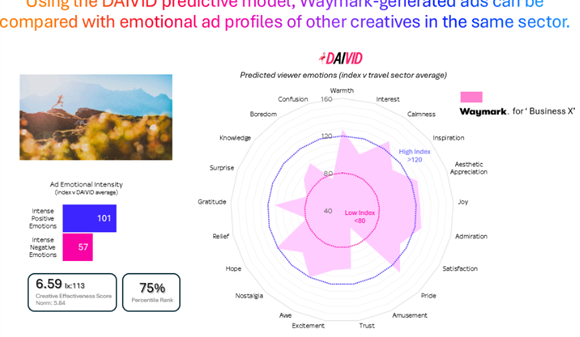

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions