Alphabet is emerging as a formidable player in the competitive landscape of artificial intelligence (AI) infrastructure. Although recent investor enthusiasm for the company’s long-term potential in AI may appear sudden, Alphabet has been strategically building its capabilities for over a decade. The tech giant is beginning to see the fruits of its labor in AI and cloud computing, and analysts predict that its competitive edge will only expand, positioning its stock as a vital investment for the next phase of AI infrastructure development.

Alphabet’s journey into AI began in 2011 with the establishment of the Google Brain research lab, where it developed the TensorFlow deep learning framework. This framework is now widely utilized for training large language models (LLMs) and for running inferences within Google Cloud. Furthermore, the company’s acquisition of British AI lab DeepMind in 2014 has proven instrumental, as the two entities merged in 2023, enhancing the foundational components of Alphabet’s Gemini LLM.

In November 2015, Alphabet launched its TensorFlow machine learning library and introduced its tensor processing units (TPUs) the following year. These custom application-specific integrated circuits (ASICs) are specifically tailored for machine learning and AI workloads, optimized for Google Cloud’s TensorFlow framework. Initially used for internal operations, Alphabet began renting these TPUs to customers as part of its infrastructure-as-a-service solution in 2018.

Over the next decade, Alphabet has continually refined its chip designs; its TPUs are now in their seventh generation. While many competitors are only beginning to explore the benefits of ASICs for AI workloads, Alphabet’s TPUs have been battle-tested in demanding environments such as search and YouTube, as well as in training its advanced Gemini models. This extensive experience provides Alphabet with an unmatched advantage in the AI space.

With state-of-the-art custom AI chips coupled with one of the premier foundational AI models, Alphabet possesses a significant structural advantage for the future of AI. No other competitor has this unique combination, granting Alphabet a substantial cost efficiency that creates a virtuous cycle. By utilizing TPUs for training Gemini, Alphabet achieves superior returns on its capital expenditures compared to rivals, who often depend on Nvidia‘s pricier graphics processing units (GPUs) to train their models. This enhanced return on investment allows Alphabet to invest further in improving its TPUs and AI models, which in turn drives demand from customers.

Moreover, Alphabet’s TPUs contribute to reduced inference costs, benefiting both the company and its clientele. This overall operating model is more cost-effective compared to competitors, establishing an ecosystem that is difficult for others to replicate. Unlike cloud computing rivals such as Amazon and Microsoft, which rely more heavily on third-party LLMs, Alphabet’s access to its own world-class AI model enables it to capture the complete AI revenue stream.

Alphabet’s ongoing efforts to enhance its ecosystem, exemplified by its impending acquisition of cloud security company Wiz, further solidify its competitive edge. The next phase of AI infrastructure is likely to reward not merely chipmakers or cloud companies but those with a vertically integrated model capable of optimizing the entire AI model training and inference process. Alphabet is poised to excel in this regard, thanks to its significant head start in custom AI chips and vertical integration.

As Alphabet continues to deepen its foothold in AI infrastructure, the stock is viewed as a compelling long-term investment. Even after a strong performance this year, its unique position suggests a promising trajectory ahead, capturing both the growing demand for AI solutions and the evolving landscape of cloud computing.

See also Ukraine Develops National AI Using Google’s Gemma Framework for Military and Civilian Use

Ukraine Develops National AI Using Google’s Gemma Framework for Military and Civilian Use AI Researchers Unveil Expert System for Hydroponic Strawberry Cultivation, Enhancing Sustainability and Yield

AI Researchers Unveil Expert System for Hydroponic Strawberry Cultivation, Enhancing Sustainability and Yield Nvidia Invests $2B in Synopsys to Revolutionize AI Chip Design and Engineering Workflows

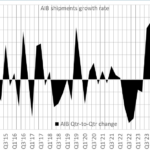

Nvidia Invests $2B in Synopsys to Revolutionize AI Chip Design and Engineering Workflows Jon Peddie Research Reveals Q3’25 AIB Market at $8.8B with -0.7% CAGR Forecast

Jon Peddie Research Reveals Q3’25 AIB Market at $8.8B with -0.7% CAGR Forecast VCI Global Announces Strategic Shift to Lead AI Infrastructure and Stablecoin Payments in ASEAN, MENA

VCI Global Announces Strategic Shift to Lead AI Infrastructure and Stablecoin Payments in ASEAN, MENA